-

Notifications

You must be signed in to change notification settings - Fork 86

Logbook 2022 H2

- What is this about?

- Newer entries

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- Older entries

- Today I'll go through the tx traces and related code to alter the graph so we can publish it on the website.

- While looking at the code for the token minting I am noticing a FIXME which sounds like fun:

-- FIXME: This doesn't verify that: -- -- (a) A ST is minted with the right 'HydraHeadV1' name -- (b) PTs's name have the right shape (i.e. 28 bytes long) - Let's do this real quick so we get rid of this FIXME. I am using

lengthOfBytestringto check the length of PT tokens - It is a bit awkward that we are not separating PT's and ST and do a separate check but it also works if we check while folding that the token is EITHER of length 28 (for PT's) OR that it has the name "HydraHeadV1" for ST token.

- Ok submitted a PR so now we can move on with the tx traces.

- Continuing with the ST token checks in the head validators

- After removing traces tx sizes went down for around 1kb. Decided to leave the traces out.

- Let's see how much the tx size is reduced by removing traces from initial and commit validators and if they drop significantly I'll remove them.

- Results for the fanout tx, top ones are new and the bottom ones are from the previous commit:

UTxO Tx size % max Mem % max CPU Min fee ₳

1 13459 10.90 4.72 0.86

2 13430 11.79 5.34 0.88

3 13531 13.69 6.37 0.90

5 13603 16.98 8.23 0.95

10 13718 23.96 12.38 1.04

50 15157 85.19 47.72 1.85

59 15477 99.16 55.76 2.03

UTxO Tx size % max Mem % max CPU Min fee ₳

1 14272 10.91 4.72 0.90

2 14309 12.18 5.50 0.92

3 14213 12.95 6.08 0.92

5 14350 16.66 8.11 0.97

10 14595 24.36 12.53 1.08

50 15972 84.90 47.61 1.88

59 16226 98.49 55.49 2.06

So we can see the drop in the tx size around 750/800 bytes. I'll leave the traces out for now and consult with the team if they think we should still keep them around while working on smart contracts.

- Refactoring the code and preparing it for a review.

- Already worked with Arnaud on this and we had a great pairing session before Christmas, go Hydra!

- Each head script datum now contains the head policy id

- Need to make sure every validator checks for presence and correctness of the ST token

- With the new changes the tx size for the fanout tx exceeds 16kb which is a new thing

- Fanout tx doesn't use reference inputs so this looks lika a way forward

- Adding ref inputs didn't work out the way I thought it would. Decided to concentrate on CollectCom tx being too big instead.

- Fixed the collectCom mutation test by adding the logic to check if head id was not manipulated

- Fixed fanout tx size by reducing the number of outputs from 70 to 60. This needs investigating. Tx size is expected to grow bigger

since I added some hew values in the head

Statebut it would be good to start reducing script sizes since we are at point where we are dealing with the on-chain part of the code more so ideally we will get some time to optimize scripts. - Let's check how much we can reduce script size just by removing traces. We got 16399 for fanout tx in the previous test run and now after removing traces the tx size is below 16kb. Nice! But unfortunatelly I'd like to keep traces around so let's see what other tricks we can utilize.

- When we wanted to open a head from the

hydra-node:unstableimage, we realised the last released--hydra-script-tx-idis not holding the right hydra scripts (as they changed onmaster)- We also thought that a cross-check could be helpful here. i.e. the

hydra-nodeerroring if the found scripts are not what was compiled into it. Is it not doing this already?

- We also thought that a cross-check could be helpful here. i.e. the

- SN published the scripts onto

previewtestnet usinghydra-nodefrom revision03f64a7dc8f5e6d3a6144f086f70563461d63036with the command

docker run --rm -it -v /data:/data ghcr.io/input-output-hk/hydra-node:unstable publish-scripts --network-id 2 --node-socket /data/cardano-node/node.socket --cardano-signing-key /data/credentials/sebastian.cardano.sk

(adapt as necessary to paths of volumes, sockets and credentials)

- A

--hydra-scripts-tx-idfor revision03f64a7dc8f5e6d3a6144f086f70563461d63036is:9a078cf71042241734a2d37024e302c2badcf319f47db9641d8cb8cc506522e1

-

Implementing PR review comments I am noticing a failing test on hydra-node related to MBT. The test in question is implementation matches model and the problem is that test never finishes in time. After adding

traceDebuginrunIOSimPropI noticed there is aCommandFailedlog message printed but no errors. -

Sprinkled some

traceto appropriate places whereCommandFailed(in theHeadLogic) and also printed the head state when this happens. Turns out head state is inIdleStatewhich is weird since we are trying to do some actions in the test so head must be opened. Also we are trying toCommitright before so might be related. And thecontentsfield contains this kind of utc time1970-01-01T00:00:16.215388918983Zso might be that changes in theTimeHandleare related... -

Back to

Modelto try to find the culprit. Looks to me like the Init tx didn't do what it is supposed to do so we are stuck at this state... -

Intuition tells me this has to do with changes in the contestation period (now it is part of the

Seedphase in MBT) so I inspected where did we set the values and found in themockChainAndNetworkthat we are using the default value for it! Hardcoding it to 1 made the tests pass! -

Turns out it is quite easy to fix this just by introducing the new parameter to

mockChainAndNetworksince we already have the CP coming in from theSeedat hand. All great so far, I am really great to be able to find out the cause of this since I am not that familiar with MBT. Anyway, all tests are green onhydra-nodeso I can proceed doing the PR review changes.

- After getting everything to build in Github actions and (likely still) via Cicero, the docker build is still failing:

#14 767.2 > Error: Setup: filepath wildcard

#14 767.2 > 'config/cardano-configurations/network/preprod/cardano-node/config.json' does

#14 767.2 > not match any files.

-

Checked the working copy and there is a

hydra-cluster/config/cardano-configurations/network/preprod/cardano-node/config.jsonfile checked out -

This workflow feels annoying and lengthy (nix build in a docker image build). Maybe using

nixto build the image will be better: https://nixos.org/guides/building-and-running-docker-images.html -

After switching to nix build for images I run into the same problem again:

> installing > Error: Setup: filepath wildcard > 'config/cardano-configurations/network/preprod/cardano-node/config.json' does > not match any files.-> Is it cabal not knowing these files? When looking at the nix store path with the source it’s missing the submodule files.

-

The store path in question is

/nix/store/fcd86pgvs6mddwyvyp5mhxfwsi63g873-hydra-root-hydra-cluster-lib-hydra-cluster-root/hydra-cluster/config -

It might be because haskell.nix filters too much files out (see https://input-output-hk.github.io/haskell.nix/tutorials/clean-git.html?highlight=cleangit#cleangit)

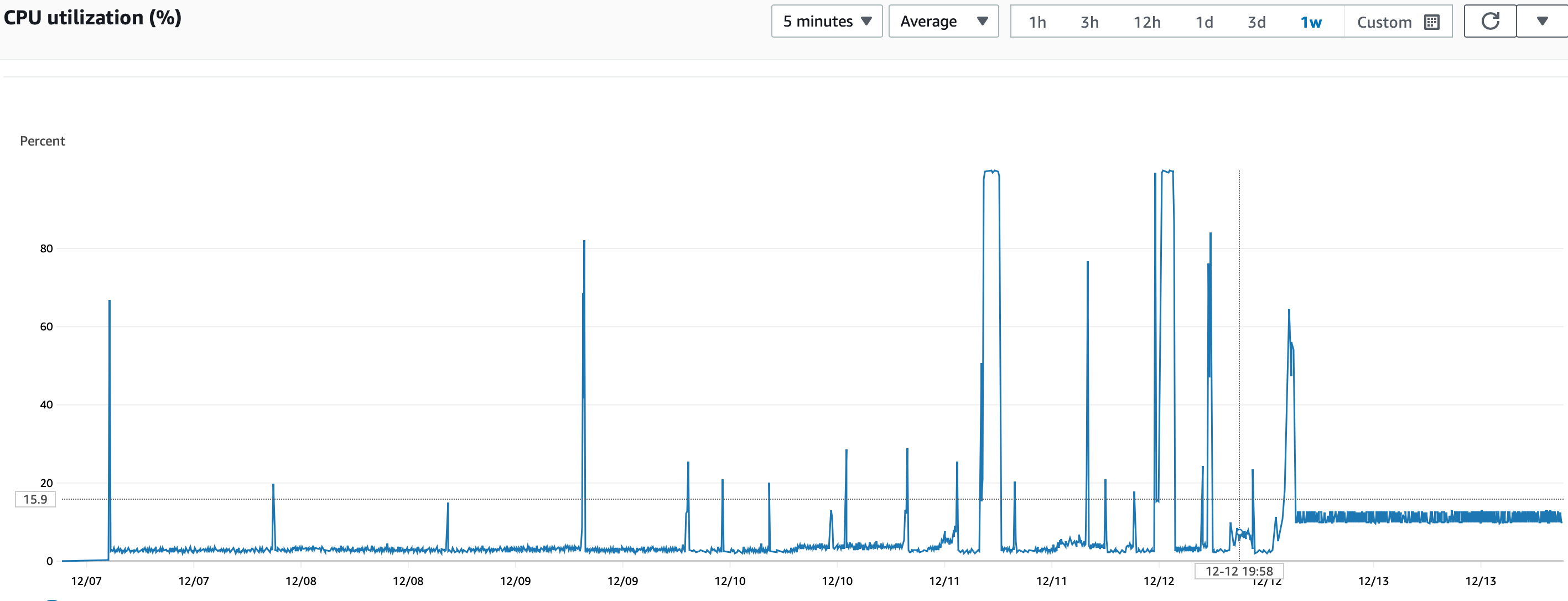

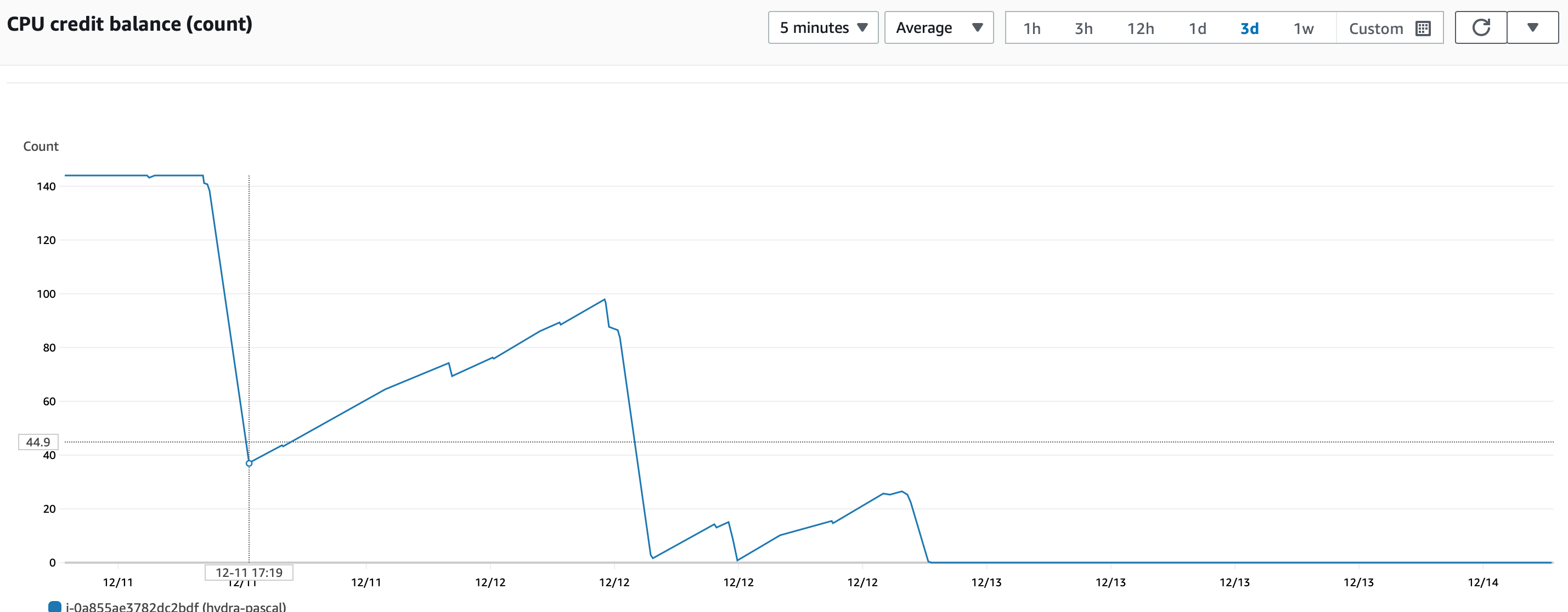

My hydra-node is not respoinding since, approximately, december the 13th at 2am.

When trying to ssh into the EC2 hosting it, I get timeout errors.

When looking at the AWS consoleI see that:

- all the checks for the EC2 are ok (2/2 checks passed);

- the CPU utilization, is stuck at, approximately 11% since december the 13th at 2am UTC;

- the CPU Credit Balance is down to 0 since the same time, more or less.

I understand that:

- december the 11th at 15:06 the EC2 starts consuming the CPU burst credit

- december the 11th at 17:19 the EC2 stops consuming the CPU burst credit and start regaining credits

- december the 12th at 10:31 the EC2 starts consuming the CPU burst credit again

- december the 12th at 12:48 CPU burst credit is down to 0

Then we see some CPU burst credit regained but that's marginal, the machine is mainly at 0 CPU burst.

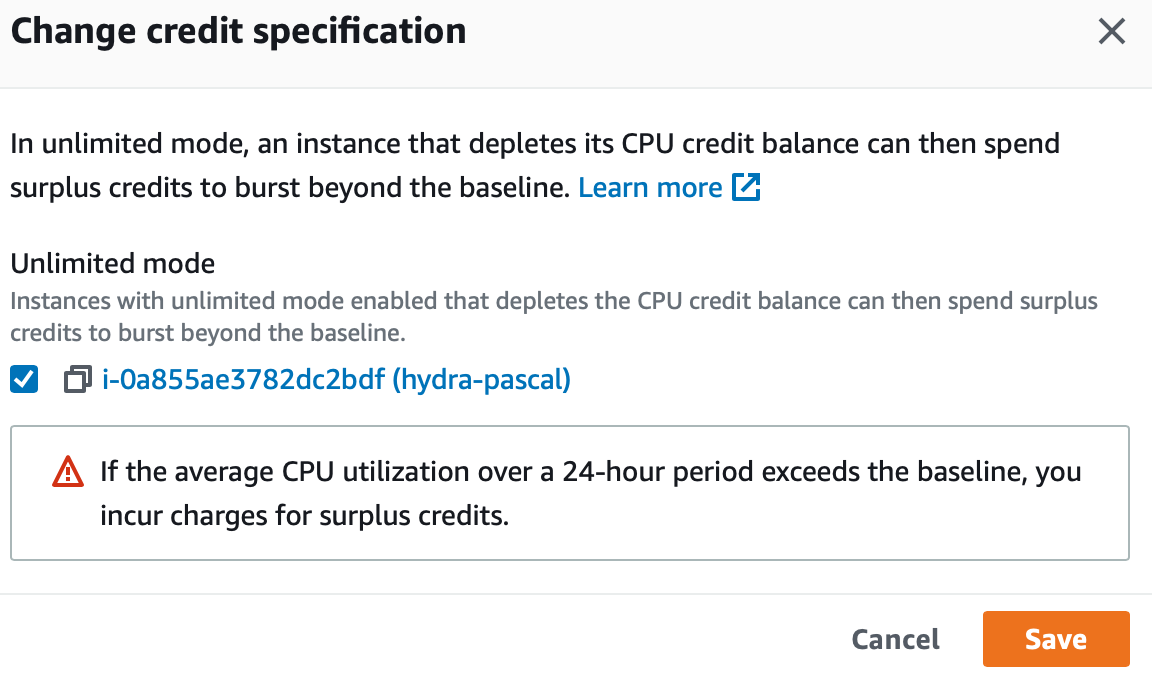

So this EC2 is eating more CPU than it can. I don't know what is consuming the CPU. The burst mode of this instance is set to standard so it can't use more than the daily burst credit. I switch to unlimited to see what happens.

I can see that the machine is now eating as much CPU as it wants. However I'm still not able to connect with ssh. I reboot the machine and now it's ok. I'll let it run and see what happens.

SB had the same problem so we have also change his machine settings but differently so we can compare:

- PG: t2.micro with unlimited credit

- SB: t2.xlarge with standard credit

- Quickly looking into the

PPViewHashesDontMatcherror we receive on the “commitTx using reference scripts” work - The error comes from the

cardano-ledgerand is raised if the script integrity hash of a given tx body does not match the one the ledger (in thecardano-node) computes. - We checked the protocol parameters used yesterday, they are the same.

- What is left are datums, redeemers and the scripts which are converted to “language views”. Are reference scripts also part of the hash? Shouldn’t they be?

- The

cardano-ledgerpasses (and computes) the integrity hash withsNeededscripts.

(Ensemble continued)

-

languagesis used to lookup languages forgetLanguageViewand is coming fromAlonzo.TxInfo! This is a strong indicator, that this function does not take into account reference scripts! Furthermore the return value isSet Language, which would explain why it works for other transactions (abort), but now starts failing forcommit. -

txscriptsis actually fine as it is defined forBabbageEraand would consider reference scripts. But onlyMap.restrictKeysthe passedsNeeded-> where is this coming from. - It ultimately uses function

scriptsNeededFromBodyfor this and it seems it would pick up thespendscripts frominputsTxBodyL. While this not includes reference inputs, it would still resolve tov_initialin our case. i.e. this is fine. - We double checked the

PlutusV2cost model in the protocol parameters again. - Our wallet code, however, is using the

txscriptsof the witness set .. is this different? - We realized that the

langsin the Wallet'sscriptIntegrityHashcomputation is empty, hard-coding it to onlyPlutusV2makes the test pass. Case closed.

How to build a profiled version of hydra packages:

Enter a nix-shell without haskell.nix (It seems haskell.nix requires some more configuration which I was not able to figure out):

nix-shell -A cabalOnly

Configure the following flag in the cabal.project.local

package plutus-core

ghc-options: -fexternal-interpreter

then just run cabal build all --enable-profiling 🎉 Of course it will take ages but then one should be able to run tests and hydra-node with profiling enabled.

I was able to produce a profile report for a single test (the conflict-free liveness property):

Fri Dec 9 13:26 2022 Time and Allocation Profiling Report (Final)

tests +RTS -N -p -RTS -m liveness

total time = 1.44 secs (5767 ticks @ 1000 us, 4 processors)

total alloc = 5,175,508,264 bytes (excludes profiling overheads)

COST CENTRE MODULE SRC %time %alloc

psbUseAsSizedPtr Cardano.Crypto.PinnedSizedBytes src/Cardano/Crypto/PinnedSizedBytes.hs:(211,1)-(213,17) 17.5 0.0

execAtomically.go.WriteTVar Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(982,55)-(986,79) 9.7 10.0

execAtomically.go.ReadTVar Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(971,54)-(974,68) 3.3 2.5

foldF Control.Monad.Free.Church src/Control/Monad/Free/Church.hs:194:1-41 2.4 2.2

reschedule.Nothing Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(690,5)-(715,25) 2.4 1.4

psbToBytes Cardano.Crypto.PinnedSizedBytes src/Cardano/Crypto/PinnedSizedBytes.hs:140:1-46 2.3 11.5

applyRuleInternal Control.State.Transition.Extended src/Control/State/Transition/Extended.hs:(586,1)-(633,34) 2.1 2.5

encodeTerm UntypedPlutusCore.Core.Instance.Flat untyped-plutus-core/src/UntypedPlutusCore/Core/Instance/Flat.hs:(108,1)-(116,67) 2.0 3.3

psbCreate Cardano.Crypto.PinnedSizedBytes src/Cardano/Crypto/PinnedSizedBytes.hs:(216,1)-(222,20) 1.6 0.0

schedule.NewTimeout.2 Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(340,43)-(354,87) 1.5 0.9

blake2b_libsodium Cardano.Crypto.Hash.Blake2b src/Cardano/Crypto/Hash/Blake2b.hs:(37,1)-(43,104) 1.4 1.4

toBuilder Codec.CBOR.Write src/Codec/CBOR/Write.hs:(102,1)-(103,57) 1.4 1.7

serialise Codec.Serialise src/Codec/Serialise.hs:109:1-48 1.4 2.6

liftF Control.Monad.Free.Class src/Control/Monad/Free/Class.hs:163:1-26 1.2 1.0

stepHydraNode Hydra.Node src/Hydra/Node.hs:(131,1)-(159,51) 1.2 0.7

reschedule.Just Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(680,5)-(684,66) 1.2 1.5

psbToByteString Cardano.Crypto.PinnedSizedBytes src/Cardano/Crypto/PinnedSizedBytes.hs:143:1-38 1.1 0.4

getSize Flat.Class src/Flat/Class.hs:51:1-20 1.1 0.6

hashWith Cardano.Crypto.Hash.Class src/Cardano/Crypto/Hash/Class.hs:(125,1)-(129,13) 0.9 1.3

. PlutusTx.Base src/PlutusTx/Base.hs:48:1-23 0.9 1.1

toLazyByteString Codec.CBOR.Write src/Codec/CBOR/Write.hs:86:1-49 0.7 8.5

schedule.Output Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(282,37)-(285,56) 0.7 1.3

getDefTypeCheckConfig PlutusCore.TypeCheck plutus-core/src/PlutusCore/TypeCheck.hs:56:1-74 0.7 1.0

schedule.Atomically Control.Monad.IOSim.Internal src/Control/Monad/IOSim/Internal.hs:(430,41)-(478,24) 0.7 1.6

serialiseAddr Cardano.Ledger.Address src/Cardano/Ledger/Address.hs:146:1-49 0.5 7.2

typeOfBuiltinFunction PlutusCore.Builtin.Meaning plutus-core/src/PlutusCore/Builtin/Meaning.hs:(78,1)-(79,50) 0.4 2.0

Running integration tsets with profiling on gives the following hotspots:

fromList Data.Aeson.KeyMap src/Data/Aeson/KeyMap.hs:236:1-30 16.2 20.9

unsafeInsert Data.HashMap.Internal Data/HashMap/Internal.hs:(888,1)-(918,76) 8.4 10.0

toHashMapText Data.Aeson.KeyMap src/Data/Aeson/KeyMap.hs:540:1-62 6.2 11.9

camelTo2 Data.Aeson.Types.Internal src/Data/Aeson/Types/Internal.hs:(836,1)-(842,33) 4.6 6.4

fromHashMapText Data.Aeson.KeyMap src/Data/Aeson/KeyMap.hs:544:1-66 4.2 8.4

hashByteArrayWithSalt Data.Hashable.LowLevel src/Data/Hashable/LowLevel.hs:(109,1)-(111,20) 2.7 0.0

toList Data.Aeson.KeyMap src/Data/Aeson/KeyMap.hs:242:1-28 2.4 5.0

fromList Data.HashMap.Internal.Strict Data/HashMap/Internal/Strict.hs:638:1-70 2.3 0.0

genericToJSON Data.Aeson.Types.ToJSON src/Data/Aeson/Types/ToJSON.hs:185:1-49 2.3 1.1

flattenObject Data.Aeson.Flatten plutus-core/src/Data/Aeson/Flatten.hs:(37,1)-(46,54) 2.0 2.8

applyCostModelParams PlutusCore.Evaluation.Machine.CostModelInterface plutus-core/src/PlutusCore/Evaluation/Machine/CostModelInterface.hs:191:1-70 1.7 1.6

It seems generating JSON (probably for writing logs?) is the most costly activity that's happening inside the hydra-node.

-

Started with running the hydra cluster tests - I observe

OutsideValidityIntervalUTxOerror when running the single party hydra head test. -

I am suspecting the contestation period value is 1 is causing this based on the log output. Seems like tx validity lower/upper bounds are 18/19 and the network is at slot 19 already so the tx is expired. When changing the contestation period to just 2 the test is passing. I suspected that wait command in the test was causing this so reducing the wait from 600 to 10 and then adding back the contestation period to its original value 1 is also working.

-

Let's run the hydra-cluster and hydra-node tests again to see if there are still issues. All green! We are good to commit and continue.

e4a30f2 -

It is time to tackle the next issue. We want to ignore Init transactions that specify invalid contestation period. And by invalid we consider any contestation period that is different to what we have set when starting up our hydra-node. Ideally we would like to have the log output and/or error out in this case but it seems our architecture is not so flexible to implement this easily. This is one point we would like to address in the future (not the scope of this work here).

-

When observing txs we are already checking if parties/peers match to our node config and all needed PTs exist. I am noticing the readme that hints that this function

observeInitTxshould perhaps return anEitherso we can have some logging in place with the appropriate error message instead of just getting backNothingvalue.Seems this affect a bit bigger piece of code so need to decide if this is a job for a separate PR.

I passed the contestation period coming in from

ChainContexttoobserveInitTxand usedguardto prevent the rest of the code from running in case CP is not matched. Also added a quick test to make sure this is checked (Ignore InitTx with wrong contestation period).f5ef94b -

Now it looks like we got green in all of the tests. Time to see how we can make this code better/remove redundant things we perhaps don't need anymore as the outcome of Init not containing the contestation period. My main suspect is the TUI.hs module and I think we may be able to improve things in there. Or maybe I am just crazy since I didn't find anywhere. Ok, the code I was reffering to is in the MBT (Model Based Testing) framework. I made the changes so that we generate contestation period in the seeding phase instead as part of the

Initconstructor.I made sure all of the places in test code do not use any hardcoded value for the contestation period but re-use the value we have in the underlying context (

ChainContext,HydraContextorEnvironmenttypes)Added the autors option to docusaurus and the actual ADR21 with appropriate avatars.

Now ADR21 is ready to be reviewed!

hydra-node test take 5 minutes to run (excluding compilation time). This makes it painful to run them everytime we change something and it has subtle producitvity impact on the team.

As a first objective, I'd like this tests to take less than a minute. We have 26 test files, that leaves 2.3 seconds per test file.

Time per test file:

-

Hydra.Options: 0.1050 seconds

-

Hydra.Crypto: 0.0062 seconds

-

Hydra.SnapshotStrategy: 0.0655 seconds

-

Hydra.Model: 33.8062 seconds

-

Hydra.Network: 10.2711 seconds

-> Trying to improve Hydra.Network before exploring more

We manage to make existing tests pass by fixing how the start validity time of a close transaction in computed.

Then we use the contestation period as a close grace time replacement for close transactions.

Now that the contestation period has been added as a CLI parameter, we remove it from the API to have something coherent. That means updating all the end to end tests to set the contestation perdiod when starting the node under test instead of on API calls.

Next step is to use the contestation period to filter out head init transaction that we want to be part of. It can be discussed at which level we should do that. However, we already do that kind of stuff for filtering out head init transaction for which we do not agree with the list of members. So we decide to add that in the same part of the code.

Starting from a failing test in hydra-cluster (two heads on the same network do not conflict) - we don't want to just fix it

- we want to really understand what is happening.

We see the error message related to PastHorizon:

user error (PastHorizon {pastHorizonCallStack =

...

,("interpretQuery",SrcLoc {srcLocPackage = "hydra-node-0.9.0-inplace", srcLocModule = "Hydra.Chain.Direct.TimeHandle"

, srcLocFile = "src/Hydra/Chain/Direct/TimeHandle.hs", srcLocStartLine = 91

...

and line related to TimeHandle looks interesting since it points to a place in our code. Namely a function to convert time to slot number slotFromUTCTime.

Ok, so it must be we are handling time incorrectly since we know that we introduced lower tx bound when working on this ADR.

Let's introduce some traces in code to see what do the slots look like when we submit a close tx.

Function name fromPostChainTx doesn't sound

like a good name for it since what we do here is create a tx that will be posted a bit later on after finalizeTx in the submitTx. I am renaming

it to prepareTxToPost.

Traces don't get printed from running the tests in the ghci so let's juse use cabal.

Ok, that was not the issue - prints are still not shown. Let's use error to stop and determine if we are in the right code path.

At this point it is pretty obvoius that constructing a close tx is not erroring out but we know that PastHorizon is happening

when we try to convert time to slots. In the (renamed) function prepareTxToPost we are actually invoking currentPointInTime function so

let's see if that is what is failing here. Bingo! We are correct. This means that the time conversion is failing.

Lets reduce the defaultContestationPeriod

to 10 slots instead of 100 and see what happens. Oh nice, the tests do pass now! But how is this actually related to our tests? My take is we already

plugged in our node's contestationPeriod in the init transaction instead of relying on the client so each time we change this value it needs to

be in sync with the network we are currently in. 100 slots seems to be too much for devnet.

Let's run the hydra-node tests and see if they are green still. It is expected to see json schema missmatches since we introduced a new --contestation-period

param to hydra-node executable. Vast majority of tests are still green but let's wait until the end. Three failing tests - not so bad. Let's inspect.

These are the failing ones:

- Hydra.Node notifies client when postTx throws PostTxError

- Hydra.Options, Hydra Node RunOptions, JSON encoding of RunOptions, produces the same JSON as is found in golden/RunOptions.json

- Hydra.Options, Hydra Node RunOptions, roundtrip parsing & printing

Nice to not see any time failure. We can quickly fix these, that should not be hard.

The fix involves introducing --contestation-period flag into the test cases and fixing the expected json for running options.

Now we are able to proceed further down the rabbit hole and we can actually see our validator failing which is great! We will be able to fix it now.

- When looking into the

stdPR, it seems that the normalnix-shell/nix-buildis not picking up the overlays correctly anymore. Trying to get the project built viahaskell.nixfrom theflake.nixto resolve it.

- We add a contestation-period CLI flag

- We use the contestation-period to open a head (instead of the API param)

- Use the contestation period from the environment instead of the API param

- populate the environment with the CLI flag value

Then we're stuck with some obscure ambiguous arbitrary definition for Natural :(

- Exporting confluence pages to markdown. The node module + pandoc worked, probably pandoc alone would be good enough: https://community.atlassian.com/t5/Confluence-questions/Does-anyone-know-of-a-way-to-export-from-confluence-to-MarkDown/qaq-p/921126

When updating my hydra node to use the unstable version, The CI building my beloved hydra_setup image failed.

Static binary for cardano-node is not available anymore. I opened the following issues to make dev portal and cardano-node maintainers to know about it:

- https://github.com/cardano-foundation/developer-portal/issues/871

- https://github.com/input-output-hk/cardano-node/issues/4688

In the meantime, I happened to still have a static binary for cardano-node 1.35.4 available locally on my machine 😅 so I decided to move forward with a locally built docker image straight on my hydra node.

The EC2 I use to run my cardano-node and hydra-node is deterministically crashing. I see a huge raise in CPU usage and then the machine becomes unreachable.

Looking at the logs:

- I don't see any log after 12:00 UTC eventhough the machine was still up until 12:54

- I see a shitload of cardano-node logs about PeerSelection around 11:48

- I also see super huge cardano-node log messages like this one:

Nov 29 11:48:47 ip-172-31-45-238 391b6ab80c0b[762]: [391b6ab8:cardano.node.InboundGovernor:Info:110] [2022-11-29 11:47:58.77 UTC] TrMuxErrored (ConnectionId {localAddress = 172.17.0.2:3001, remoteAddress = 89.160.112.162:2102}) (InvalidBlock (At (Block {blockPointSlot = SlotNo 2683396, blockPointHash = 1519d70f2ab7eaa26dc19473f3439e194cfc85d588f5186c0fa1f2671a6c26dc})) e005ad37cc9f148412f53d194c3dc63cf9cc5161f2b6de7eb5c00f9f85bd331e (ValidationError (ExtValidationErrorLedger (HardForkLedgerErrorFromEra S (S (S (S (S (Z (WrapLedgerErr {unwrapLedgerErr = BBodyError (BlockTransitionError [ShelleyInAlonzoPredFail (LedgersFailure (LedgerFailure (UtxowFailure (UtxoFailure (FromAlonzoUtxoFail (UtxosFailure (ValidationTagMismatch (IsValid True) (FailedUnexpectedly (PlutusFailure "\nThe 3 arg plutus script (PlutusScript PlutusV2 ScriptHash \"e4f24686d294de43ad630cdb95975aac9616f180bb919402286765f9\") fails.\nCekError An error has occurred: User error:\nThe machine terminated because of an error, either from a built-in function or from an explicit use of 'error'.\nCaused by: (verifyEcdsaSecp256k1Signature #0215630cfe3f332151459cf1960a498238d4de798214b426d4085be50ac454621f #dd043434f92e27ffe7c291ca4c03365efc100a14701872f417d818a06d99916f #b9c12204b299b3be0143552b2bd0f413f2e7b1bd54b7e9281be66a806853e72a387906b3fabeb897f82b04ef37417452556681e057a2bfaafd29eefa15e4c48e)\nThe protocol version is: ProtVer {pvMajor = 8, pvMinor = 0}\nThe data is: Constr 0 [B \"#`ZN\\182\\189\\134\\220\\247g,e%+\\239\\241\\DLE3\\156H\\249M\\255\\254\\254\\t\\146\\157A\\188j\\138\",I 0]\nThe redeemer is: Constr 0 [List [B \"\\185\\193\\\"\\EOT\\178\\153\\179\\190\\SOHCU++\\208\\244\\DC3\\242\\231\\177\\189T\\183\\233(\\ESC\\230j\\128hS\\231*8y\\ACK\\179\\250\\190\\184\\151\\248+\\EOT\\239\\&7AtRUf\\129\\224W\\162\\191\\170\\253)\\238\\250\\NAK\\228\\196\\142\",B \"j\\SOV\\184g\\255\\196x\\156\\201y\\131r?r2\\157p5f\\164\\139\\136t-\\SI\\145(\\228u2YE\\213\\&3o\\145\\232\\223\\&4\\209\\213\\151\\207q.\\SO \\192\\&9\\176\\195\\\\\\251\\&4GG\\238\\238\\249M\\213\\DC4\\CAN\",B \"m\\151Fz;\\151\\206\\&0\\155\\150\\STX\\185#\\177M\\234\\206X\\208\\231\\172\\222,}\\n\\131\\189qR\\229\\170\\157r\\255rl\\NAKe\\240J\\207\\SUB\\135\\225\\170\\188\\168O\\149\\173\\165\\132\\150a+Xpik\\181\\138{z>\",B \"\\DC4\\154\\181P\\DC2\\CANXd\\197_\\254|t\\240q\\230\\193t\\195h\\130\\181\\DC3|j&\\193\\239\\210)\\247d!rRVW\\219mY\\252\\SYNA\\130af+m\\169U\\US[%F^\\241\\DC2n\\132\\137_\\RS\\r\\185\",B \"|\\ESC\\239!\\162\\172\\\\\\180\\193'\\185\\220R\\242\\157ir\\246\\161\\&2\\177\\&6\\160\\166\\136\\220\\154<l}M\\222=\\212\\241\\245\\231\\FS(!\\251\\197\\194.~\\239\\146\\253n`$`\\229\\182\\208M\\164\\181\\US\\134z9\\172\\r\"],List [B \"\\STX\\NAKc\\f\\254?3!QE\\156\\241\\150\\nI\\130\\&8\\212\\222y\\130\\DC4\\180&\\212\\b[\\229\\n\\196Tb\\US\",B \"\\STXQ&\\EM\\255\\237\\163\\226\\213T8\\195\\240\\250KW\\159\\DLE\\147\\165\\162b\\169M\\237\\ACK\\131gB\\135!\\140\\ACK\",B \"\\STX\\130\\SUB\\240\\244\\171P\\181\\DC2\\STX\\213\\153\\224u\\STX\\r\\160/\\192\\UST\\224\\174\\NAK\\158z\\153\\RSl\\ESCwS\\243\",B \"\\STX\\156\\240\\128\\199\\158\\247k\\200R\\185\\136\\203a\\160\\DC1SA@J\\192\\146\\172\\224\\211\\233\\193g\\230R\\128\\183\\&3\",B \"\\ETX\\245{\\226\\\\-\\181\\208\\SYNsS\\154A\\ACK\\EOTG%\\171&\\217\\US\\202\\182\\&2\\139Y>\\DC2q\\156+\\189\\203\"],List [B \"\\STX\\233\\198Z\\134\\148\\223\\252%\\184\\DEL%\\152\\147\\156\\FS\\250\\203*\\187\\154\\185\\&8\\242\\250\\236\\136\\185Ik\\250j\\ACK\",B \"\\ETX!\\202\\ETX=U_\\234\\&0\\153\\229\\SIU8\\144\\255\\229\\196V=\\176`L<7/\\199\\142\\186\\NAK\\185\\238\\184\",B \"\\ETXN\\172S\\136\\255\\145%\\NAK@*X\\136)\\179\\252\\203\\184\\145\\ETX-\\DC3\\ENQ\\169\\190\\140\\227\\192\\\\\\189pR\\153\",B \"\\ETXW|%\\221U\\212\\ENQO\\DC4x\\232\\SUB+\\252\\162\\&4>\\166\\223tl/\\228\\138\\246n\\243\\158S\\145l\\255\",B \"\\ETX\\138\\250\\STXKv\\v\\USmQ[[\\ENQ\\169\\149\\150\\221\\255\\246\\205U\\SYN\\239\\145>\\245,\\SYN\\211\\132\\248\\174\\r\"],Constr 1 []]\nThe third data argument, does not decode to a context\nConstr 0 [Constr 0 [List [Constr 0 [Constr 0 [Constr 0 [B \"6\\204\\149\\151#cV\\247\\243\\CAN\\167\\195?1\\153\\ACK\\195Q\\234\\198\\171 \\169]\\ENQ\\197\\151\\233\\ACK\\150\\159\\234\"],I 0],Constr 0 [Constr 0 [Constr 0 [B \"\\v\\183\\239\\181\\209\\133\\NUL\\244\\n\\147\\155\\148\\243\\131Z\\133\\181ae$\\170Z-J\\167\\142\\GS\\ESC\"],Constr 1 []],Map [(B \"\",Map [(B \"\",I 9957062417)])],Constr 0 [],Constr 1 []]],Constr 0 [Constr 0 [Constr 0 [B \"6\\204\\149\\151#cV\\247\\243\\CAN\\167\\195?1\\153\\ACK\\195Q\\234\\198\\171 \\169]\\ENQ\\197\\151\\233\\ACK\\150\\159\\234\"],I 1],Constr 0 [Constr 0 [Constr 1 [B \"\\228\\242F\\134\\210\\148\\222C\\173c\\f\\219\\149\\151Z\\172\\150\\SYN\\241\\128\\187\\145\\148\\STX(ge\\249\"],Constr 1 []],Map [(B \"\",Map [(B \"\",I 1310240)]),(B \"\\SUB\\236\\166\\157\\235\\152\\253\\157y\\140YS)\\226\\DC4\\161\\&7E85\\161[0\\171\\193\\238\\147C\",Map [(B \"\",I 1)])],Constr 2 [Constr 0 [B \"#`ZN\\182\\189\\134\\220\\247g,e%+\\239\\241\\DLE3\\156H\\249M\\255\\254\\254\\t\\146\\157A\\188j\\138\",I 0]],Constr 1 []]]],List [],List [Constr 0 [Constr 0 [Constr 0 [B \"\\v\\183\\239\\181\\209\\133\\NUL\\244\\n\\147\\155\\148\\243\\131Z\\133\\181ae$\\170Z-J\\167\\142\\GS\\ESC\"],Constr 1 []],Map [(B \"\",Map [(B \"\",I 9956501143)])],Constr 0 [],Constr 1 []],Constr 0 [Constr 0 [Constr 1 [B \"\\228\\242F\\134\\210\\148\\222C\\173c\\f\\219\\149\\151Z\\172\\150\\SYN\\241\\128\\187\\145\\148\\STX(ge\\249\"],Constr 1 []],Map [(B \"\",Map [(B \"\",I 1310240)]),(B \"\\SUB\\236\\166\\157\\235\\152\\253\\157y\\140YS)\\226\\DC4\\161\\&7E85\\161[0\\171\\193\\238\\147C\",Map [(B \"\",I 1)])],Constr 2 [Constr 0 [B \"\\133J\\141\\196.|\\153M\\160\\SOH\\137\\183=k\\238\\130\\SYN4\\DEL\\NUL\\209\\US\\139\\SI\\211|\\132b\\NUL\\ETB\\fM\",I 1]],Constr 1 []]],Map [(B \"\",Map [(B \"\",I 561274)])],Map [(B \"\",Map [(B \"\",I 0)])],List [],Map [],Constr 0 [Constr 0 [Constr 0 [],Constr 1 []],Constr 0 [Constr 2 [],Constr 1 []]],List [],Map [(Constr 1 [Constr 0 [Constr 0 [B \"6\\204\\149\\151#cV\\247\\243\\CAN\\167\\195?1\\153\\ACK\\195Q\\234\\198\\171 \\169]\\ENQ\\197\\151\\233\\ACK\\150\\159\\234\"],I 1]],Constr 0 [List [B \"\\185\\193\\\"\\EOT\\178\\153\\179\\190\\SOHCU++\\208\\244\\DC3\\242\\231\\177\\189T\\183\\233(\\ESC\\230j\\128hS\\231*8y\\ACK\\179\\250\\190\\184\\151\\248+\\EOT\\239\\&7AtRUf\\129\\224W\\162\\191\\170\\253)\\238\\250\\NAK\\228\\196\\142\",B \"j\\SOV\\184g\\255\\196x\\156\\201y\\131r?r2\\157p5f\\164\\139\\136t-\\SI\\145(\\228u2YE\\213\\&3o\\145\\232\\223\\&4\\209\\213\\151\\207q.\\SO \\192\\&9\\176\\195\\\\\\251\\&4GG\\238\\238\\249M\\213\\DC4\\CAN\",B \"m\\151Fz;\\151\\206\\&0\\155\\150\\STX\\185#\\177M\\234\\206X\\208\\231\\172\\222,}\\n\\131\\189qR\\229\\170\\157r\\255rl\\NAKe\\240J\\207\\SUB\\135\\225\\170\\188\\168O\\149\\173\\165\\132\\150a+Xpik\\181\\138{z>\",B \"\\DC4\\154\\181P\\DC2\\CANXd\\197_\\254|t\\240q\\230\\193t\\195h\\130\\181\\DC3|j&\\193\\239\\210)\\247d!rRVW\\219mY\\252\\SYNA\\130af+m\\169U\\US[%F^\\241\\DC2n\\132\\137_\\RS\\r\\185\",B \"|\\ESC\\239!\\162\\172\\\\\\180\\193'\\185\\220R\\242\\157ir\\246\\161\\&2\\177\\&6\\160\\166\\136\\220\\154<l}M\\222=\\212\\241\\245\\231\\FS(!\\251\\197\\194.~\\239\\146\\253n`$`\\229\\182\\208M\\164\\181\\US\\134z9\\172\\r\"],List [B \"\\STX\\NAKc\\f\\254?3!QE\\156\\241\\150\\nI\\130\\&8\\212\\222y\\130\\DC4\\180&\\212\\b[\\229\\n\\196Tb\\US\",B \"\\STXQ&\\EM\\255\\237\\163\\226\\213T8\\195\\240\\250KW\\159\\DLE\\147\\165\\162b\\169M\\237\\ACK\\131gB\\135!\\140\\ACK\",B \"\\STX\\130\\SUB\\240\\244\\171P\\181\\DC2\\STX\\213\\153\\224u\\STX\\r\\160/\\192\\UST\\224\\174\\NAK\\158z\\153\\RSl\\ESCwS\\243\",B \"\\STX\\156\\240\\128\\199\\158\\247k\\200R\\185\\136\\203a\\160\\DC1SA@J\\192\\146\\172\\224\\211\\233\\193g\\230R\\128\\183\\&3\",B \"\\ETX\\245{\\226\\\\-\\181\\208\\SYNsS\\154A\\ACK\\EOTG%\\171&\\217\\US\\202\\182\\&2\\139Y>\\DC2q\\156+\\189\\203\"],List [B \"\\STX\\233\\198Z\\134\\148\\223\\252%\\184\\DEL%\\152\\147\\156\\FS\\250\\203*\\187\\154\\185\\&8\\242\\250\\236\\136\\185Ik\\250j\\ACK\",B \"\\ETX!\\202\\ETX=U_\\234\\&0\\153\\229\\SIU8\\144\\255\\229\\196V=\\176`L<7/\\199\\142\\186\\NAK\\185\\238\\184\",B \"\\ETXN\\172S\\136\\255\\145%\\NAK@*X\\136)\\179\\252\\203\\184\\145\\ETX-\\DC3\\ENQ\\169\\190\\140\\227\\192\\\\\\189pR\\153\",B \"\\ETXW|%\\221U\\212\\ENQO\\DC4x\\232\\SUB+\\252\\162\\&4>\\166\\223tl/\\228\\138\\246n\\243\\158S\\145l\\255\",B \"\\ETX\\138\\250\\STXKv\\v\\USmQ[[\\ENQ\\169\\149\\150\\221\\255\\246\\205U\\SYN\\239\\145>\\245,\\SYN\\211\\132\\248\\174\\r\"],Constr 1 []])],Map [],Constr 0 [B \"\\206\\221\\171\\244\\195c\\225t\\SUB]\\215tv\\a\\DC2\\169#\\158\\&8\\211\\132\\233\\181\\242e\\233\\n\\GS\\DC4\\140\\n\\190\"]],Constr 1 [Constr 0 [Constr 0 [B \"6\\204\\149\\151#cV\\247\\243\\CAN\\167\\195?1\\153\\ACK\\195Q\\234\\198\\171 \\169]\\ENQ\\197\\151\\233\\ACK\\150\\159\\234\"],I 1]]]\n" "hgCYrxoAAyNhGQMsAQEZA+gZAjsAARkD6BlecQQBGQPoGCAaAAHKdhko6wQZWdgYZBlZ2BhkGVnYGGQZWdgYZBlZ2BhkGVnYGGQYZBhkGVnYGGQZTFEYIBoAAqz6GCAZtVEEGgADYxUZAf8AARoAAVw1GCAaAAeXdRk29AQCGgAC/5QaAAbqeBjcAAEBGQPoGW/2BAIaAAO9CBoAA07FGD4BGgAQLg8ZMSoBGgADLoAZAaUBGgAC2ngZA+gZzwYBGgABOjQYIBmo8RggGQPoGCAaAAE6rAEZ4UMEGQPoChoAAwIZGJwBGgADAhkYnAEaAAMgfBkB2QEaAAMwABkB/wEZzPMYIBn9QBggGf/VGCAZWB4YIBlAsxggGgABKt8YIBoAAv+UGgAG6ngY3AABARoAAQ+SGS2nAAEZ6rsYIBoAAv+UGgAG6ngY3AABARoAAv+UGgAG6ngY3AABARoAEbIsGgAF/d4AAhoADFBOGXcSBBoAHWr2GgABQlsEGgAEDGYABAAaAAFPqxggGgADI2EZAywBARmg3hggGgADPXYYIBl59BggGX+4GCAZqV0YIBl99xggGZWqGCAaAiOszAoaAJBjuRkD/QoaAlFehBmAswqCGgAZ/L0aJ3JCMlkSa1kSaAEAADMjIzIjIzIjIzIjIyMjIyMjIyMjIyMjIyMjIyMjIyMjIyMjIyMjIyMjIyM1VQHSIjIyMjIjIyUzUzMAowFgCTMzVzRm4c1VzqgDpAAEZmZERCRmZgAgCgCABgBGagMuuNXQqAOZqAy641dCoAxmoDLrjV0KgCmagPOuNXQmrolAFIyYyA1M1c4BOBUBmZmauaM3DmqudUAJIAAjMiEjMAEAMAIyMjIyMjIyMjIyMjIyMzNXNGbhzVXOqAYkAARmZmZmZmZEREREREQkZmZmZmZmACAaAYAWAUASAQAOAMAKAIAGAEZqBOBQauhUAwzUCcCg1dCoBZmoE4FJq6FQCjM1UCt1ygVGroVAJMzVQK3XKBUauhUAgzUCcDA1dCoA5maqBWBi601dCoAxkZGRmZq5ozcOaq51QAkgACMyISMwAQAwAjIyMjMzVzRm4c1VzqgBJAAEZkQkZgAgBgBGagdutNXQqAEYHhq6E1dEoARGTGQJZmrnAPQQASRNVc8oAIm6oAE1dCoARkZGRmZq5ozcOaq51QAkgACMyISMwAQAwAjNQO3WmroVACMDw1dCauiUAIjJjIEszVzgHoIAJImqueUAETdUACauhNXRKAERkxkCOZq5wDkDwEUTVXPKACJuqABNXQqAKZqBO641dCoAhmaqBWBaQAJq6FQAzM1UCt1xAAmroVACMC81dCauiUAIjJjIEMzVzgGoHAIImrolABE1dEoAImrolABE1dEoAImrolABE1dEoAImrolABE1dEoAImrolABE1dEoAImqueUAETdUACauhUAIwHzV0Jq6JQAiMmMgNTNXOATgVAZiBSJqAYkkDUFQ1ABNVc8oAIm6oAETV0SgAiauiUAETVXPKACJuqABM1VQHSMiIjIyUzVTNTM1c0ZuHNTUAciIAIiMzARNVAEIiIAMAIAFIAICwCsQLBM1c4kk6ZXJyb3IgJ21rVXBkYXRlQ29tbWl0dGVlSGFzaFZhbGlkYXRvcic6IG91dHB1dCBtaXNzaW5nIE5GVAACsVM1UzUyMjIyUzUzNVMA8SABMjNQEyIzNQAyIAIAIAE1ABIgARIzABIlM1ACEAEQMgMSABMwLgBFM1AEE1ANSQEDUFQ5ACIQARMzNVMAwSABIjM1c0Zm7QAIAQAEDIDEAMAQAEQLzUAciIgBDcobszI1ABIiIzdKkAAZq6A1AEIiIjN0qQABmroDdQAKZq6A3UgCGaugNQAyIzdKkAAZq6AzdKkAAZq6A3UgBG7ED4zV0BuoABN2IHxmroDdQAEZq6A3UAAm7EDwzV0BunMDQAMzV0CmagBEJm6VIAAzV0BupABN2IHAmbpUgAjdiBuZq6A3UAAm7EDczMyIiEjMzABAFAEADACNVAIIiIiEjMzMAEAcAYAQAMAI1AGIiIAI1AGIiIAE1UAMiABM3AGbgzNwRkZmaqYBokACZGagIkRmagJABgAgBGoB4AJmoCBERgBmAEACQAJEZuAABSACABSAANQBSIiADNVAHIiIiACNVAHIiIiABSACM1UwFBIAEgATUAQiIgAxAsEzVziSAUNlcnJvciAnbWtVcGRhdGVDb21taXR0ZWVIYXNoVmFsaWRhdG9yJzogY29tbWl0dGVlIHNpZ25hdHVyZSBpbnZhbGlkAAKxUzVTNTIyMzVzRm483KGZqpgJiQALigFDNVMBISABIAEAIAEC4C01AGIgAjUAQiIgAxAsEzVziSFCZXJyb3IgJ21rVXBkYXRlQ29tbWl0dGVlSGFzaFZhbGlkYXRvcic6IGN1cnJlbnQgY29tbWl0dGVlIG1pc21hdGNoAAKxUzVTNVM1NQBCIiABIyEwATU1AFIgAiIiIiIiIgCzIAE1UDIiUzUAEQLiIVM1MzVzRm4kzMBQ1NQAiIAEiIgAzUAwiIAEAVIAADADEQMRMAQAEQLBAsEzVziSAVNlcnJvciAnbWtVcGRhdGVDb21taXR0ZWVIYXNoVmFsaWRhdG9yJzogbWlzc2luZyByZWZlcmVuY2UgaW5wdXQgdG8gbGFzdCBtZXJrbGUgcm9vdAACsVM1UzUzNXNGbjzVQASIAI3KGZqpgIiQALigEjNVMBASABIAE1AEIiIAICwCsQLBM1c4kgFIZXJyb3IgJ21rVXBkYXRlQ29tbWl0dGVlSGFzaFZhbGlkYXRvcic6IGV4cGVjdGVkIGRpZmZlcmVudCBuZXcgY29tbWl0dGVlAAKxUzUzNXNGbiDUAUiABNVABIgAQLAKxAsEzVziSVJlcnJvciAnbWtVcGRhdGVDb21taXR0ZWVIYXNoVmFsaWRhdG9yJzogc2lkZWNoYWluIGVwb2NoIGlzIG5vdCBzdHJpY3RseSBpbmNyZWFzaW5nAAKxArECsQKxArECsVMzU1UAEiIgAhMmMgMDNXOJIUZlcnJvciAnbWtVcGRhdGVDb21taXR0ZWVIYXNoVmFsaWRhdG9yJzogbm8gb3V0cHV0IGlubGluZSBkYXR1bSBtaXNzaW5nAAJSEwEwASEyYyAxM1c4kgFGZXJyb3IgJ21rVXBkYXRlQ29tbWl0dGVlSGFzaFZhbGlkYXRvcic6IG5vIG91dHB1dCBpbmxpbmUgZGF0dW0gbWlzc2luZwACYVM1UzU1ABIjUAIiIiIiIiIzM1ANJQNSUDUlA1IzNVMBgSABM1AbIlM1ACIQAxABUDUjUAEiUzVTNTM1c0ZuPNQAiIAI1AEIgAgPAOxMzVzRm4c1ACIgATUAQiABA8A7EDsTUDkAMVA4ANITUAEiNQASIiNQCCI1ACIiIiIiIiMzVTAkEgASI1ACIiJTNTUBgiNQBiIyM1AFIzUAQlM1MzVzRm48AIAEEwEsVADEEsgSyM1AEIEslM1MzVzRm48AIAEEwEsVADEEsVM1ADIVM1ACITNQAiM1ACIzUAIjNQAiMwLQAgASBOIzUAIgTiMwLQAgASIgTiIjNQBCBOIiUzUzNXNGbhwBgAwUQUBUzUzNXNGbhwBQAgUQUBMzVzRm4cAQAEFEFAQUBBQEEkVM1ABIQSRBJEzUEcAYAUQBVBCAKEyYyAvM1c4kgQJMZgACQTAjSYiFTNQARACIhMCdJhNQASIgAyMjIyMzNXNGbhzVXOqAGkAARmZEQkZmACAIAGAEZGRkZGRkZGZmrmjNw5qrnVAGSAAIzMzMiIiISMzMzABAHAGAFAEADACN1pq6FQBjdcauhUAUyMjIzM1c0ZuHUAFIAAjUDMwJjV0JqrnlADIzM1c0ZuHUAJIAIlAzIyYyA8M1c4BcBiB0ByJqrnVABE3VAAmroVAEMCI1dCoAZutNXQqAEbrTV0Jq6JQAiMmMgNzNXOAUgWAaiauiUAETV0SgAiauiUAETV0SgAiaq55QARN1QAJq6FQAzIyMjMzVzRm4c1VzqgBJAAEZqoFJuuNXQqAEbrjV0Jq6JQAiMmMgMzNXOASgUAYiaq55QARN1QAJq6FQAjdcauhNXRKAERkxkBeZq5wCECQC0TV0SgAiaq55QARN1QAJkACaqBSRCJGRESmamZq5ozcSAGkAABWBUIFYqZqAEIFREKmamYAwAQAZCZmaqYBQkACAQZuBAGSACACABEzM1UwCRIAEAcAUAEAMyABNVAsIiUzUAEVAmIhUzUzAGAEACE1ApABFTNTMAUAQAEhNQKjNQLwAwARUCgSMmMgKTNXOAAgPERmauaM3HgBAAgSARmQAJqoExEIkRKZqACJqAMAGRCZmoBIApgCABGZqpgDiQAIAoAgAIkagAkQAIkagAkQAQkQkZgAgBgBERGRkYAIApkACaqBMRGagApAAERqAERKZqZmrmjNx4AQBIE4EwmAOACJgDABmQAJqoEpEZqACkAARGoAREpmpmauaM3HgBADgTASiACJgDABmQAJqoEJEIkSmagAioEZEJmoEhgCABGaqYAwkACAIACZAAmqgQEQiREpmoAIgBEQmYAoARmaqYA4kACAKAIACkQEAIyMjMzVzRm4c1VzqgBJAAEZkQkZgAgBgBG641dCoARutNXQmrolACIyYyAiM1c4AoAuBAJqrnlABE3VAAmQAJqoDpEIkSmagAioD5EJmoEBgCABGaqYAwkACAIACJGRGAEbrAATIAE1UB0iMzNVc+ACSgPEZqA6YAhq6EAIwAzV0QAQChGRkZmauaM3DmqudUAJIAAjMiEjMAEAMAIwCjV0KgBGAKauhNXRKAERkxkA+Zq5wBEBQB0TVXPKACJuqABIyMjIyMzNXNGbhzVXOqAIkAARmZkREJGZmACAKAIAGAEZGRkZmauaM3DmqudUAJIAAjMiEjMAEAMAIwEzV0KgBGagGgJGroTV0SgBEZMZASGaucAWAZAiE1VzygAibqgATV0KgCGZqoBDrlAHNXQqAGZGRkZmauaM3DqACkAIRkJERgBACGroTVXPKAGRmZq5ozcOoASQARGQkRGACAIbrjV0JqrnlAEIzM1c0ZuHUANIAAhIiADIyYyAmM1c4AwA2BIBGBEJqrnVABE3VAAmroVACM1AJdcauhNXRKAERkxkBAZq5wBIBUB4TV0SgAiauiUAETVXPKACJuqABEzVQAXXOtESIyIwAjdWACZAAmqgNERkZmaq58AIlAcIzUBszVQFDAGNVc6oARgCmqueUAIwBDV0QAYCQmroQARIjIyMzNXNGbh1ABSAAI1AUMAU1dCaq55QAyMzNXNGbh1ACSACJQFCMmMgHTNXOAHgJANgNCaq51QARN1QAJGRkZmauaM3DqACkAMRkJERGAIAKYA5q6E1VzygBkZmauaM3DqAEkAIRkJERGAEAKYBJq6E1VzygCEZmauaM3DqAGkAERkJERGACAKYA5q6E1VzygCkZmauaM3DqAIkAARkJERGAGAKbrjV0JqrnlAGIyYyAdM1c4AeAkA2A0AyAwJqrnVABE3VAAkZGRmZq5ozcOaq51QAkgACMyISMwAQAwAjAFNXQqAEbrTV0Jq6JQAiMmMgGTNXOAFgHALiaq55QARN1QAJGRmZq5ozcOaq51QAUgACN1xq6E1VzygBEZMZALmaucAJAMAVE3VAAkZGRkZGRmZq5ozcOoAKQBhCREREQAZGZmrmjNw6gBJAFEJERERACEZmauaM3DqAGkAQRmRCRERERmACASAQbrjV0KgCm601dCauiUAUjMzVzRm4dQBEgBiMyISIiIiMwAgCQCDdcauhUAc3XGroTV0SgDkZmauaM3DqAKkAIRmRCRERERmAMASAQYBhq6FQCTdcauhNXRKASRmZq5ozcOoAyQARGQkREREYA4BBgGmroTVXPKAWRmZq5ozcOoA6QABGQkREREYAoBBgHGroTVXPKAYRkxkBAZq5wBIBUB4B0BwBsBoBkBgTVXOqAIJqrnlADE1VzygBCaq55QARN1QAJGRkZGRmZq5ozcOoAKQARGZkRCRGZgAgCgCABm601dCoAhutNXQqAGbrTV0Jq6JQAyMzNXNGbh1ACSAAIyEiMAIAMwCDV0JqrnlAGIyYyAZM1c4AWAcAuAsJqrnVADE1dEoAImqueUAETdUACRkZGZmrmjNw6gApABEZCRGACAGbrjV0JqrnlADIzM1c0ZuHUAJIAAjISIwAgAzdcauhNVc8oAhGTGQCxmrnACACwFAExNVc6oAIm6oAESIyMjMzVzRm4dQAUgBCEiIAEjMzVzRm4dQAkgAiMhIiMAMAQwBjV0JqrnlAEIzM1c0ZuHUANIAAhIiACIyYyAXM1c4ASAYAqAoAmJqrnVABE3VAAkZGZmrmjNw6gApABEAaRmZq5ozcOoASQABAGkZMZAJmaucAFAIARAQE1VzpuqABSQQNQVDEAERIiMAMwAgASMmMgDTNXOJIBJUV4cGVjdGVkIGV4YWN0bHkgb25lIGNvbW1pdHRlZSBvdXRwdXQAACEgAREiEjMAEAMAISEiMAIAMRIgATIAE1UAciJTNQAhUAgiFTNQAxUAoiEzUAszNXNGbkABAAgCQCDMAcAMAESIAISIAEyABNVAEIlM1ABE3YgCkQmaugN1IARgCAAiJEAEJEJEZgAgCABpMIkZGACACRGYAZgBABAApgF/2Hmf2HmfGEVDESIz2HqA2Hmf2HmfWCBrdZT7nC/4EkUs+7EOrsuWChE8N2qb3C9sZ+o5qXjiR/8A/wID/9h5n1gcGuymneuY/Z15jFlTKeIUoTdFODWhWzCrwe6TQ0D/WBy/ssK/S7RpbYefOsnY1xmkkrJQVRp3OZIPhwzR/wABn9h5n1ggI2BaTra9htz3ZyxlJSvv8RAznEj5Tf/+/gmSnUG8aooA/9h5n59YQLnBIgSymbO+AUNVKyvQ9BPy57G9VLfpKBvmaoBoU+cqOHkGs/q+uJf4KwTvN0F0UlVmgeBXor+q/Snu+hXkxI5YQGoOVrhn/8R4nMl5g3I/cjKdcDVmpIuIdC0PkSjkdTJZRdUzb5Ho3zTR1ZfPcS4OIMA5sMNc+zRHR+7u+U3VFBhYQG2XRno7l84wm5YCuSOxTerOWNDnrN4sfQqDvXFS5aqdcv9ybBVl8ErPGofhqryoT5WtpYSWYStYcGlrtYp7ej5YQBSatVASGFhkxV/+fHTwcebBdMNogrUTfGomwe/SKfdkIXJSVlfbbVn8FkGCYWYrbalVH1slRl7xEm6EiV8eDblYQHwb7yGirFy0wSe53FLynWly9qEysTagpojcmjxsfU3ePdTx9eccKCH7xcIufu+S/W5gJGDlttBNpLUfhno5rA3/n1ghAhVjDP4/MyFRRZzxlgpJgjjU3nmCFLQm1Ahb5

I know they're had been a preview upgrade, maybe I'm not using the last version of cardano-node. Let's update that first.

Upgrading cardano-node from 1.35.4-rc2 to 1.35.4 fixed the problem but I also deleted the cardano db and started a full sync for the preview network.

My hydra-node wanted to start while the cardano node was still synchronizing and I got errors about the hydra scripts not being published yet, which is fair:

Nov 30 09:19:34 ip-172-31-45-238 ae0d18945430[703]: hydra-node: MissingScript {scriptName = "\957Initial", scriptHash = "8d73f125395466f1d68570447e4f4b87cd633c6728f3802b2dcfca20", discoveredScripts = fromList []}

So then, I waited a bit before restarting my hydra-node. At that point, the hydra scripts were known but my cardano-node was not yet still 100% synchronized. Then my hydra-node started exibiting some strange behaviors as it stumbled upon old head initialization transactions on-chain and became stuck on waiting for this old head to be initialized.

That's interesting. That's fair that the node observe an old head being opened as there is no way for it to know it's old. Then, maybe it was an old head that we left open and just forgot about during the tests.

What I did is I just waited for the cardano-node to be fully synchronized and restarted my hydra-node (has to erase its persistent state before so it would only look at the last transactions on chain).

AB on #410

🎉 Finally got to the bottom of things: Turns out the culprit was our genPayment function and more precisely the use of suchThat combinator from QuickCheck which retries a generator if some predicate is false. To find the issue I have added traces to quickcheck-dynamic functions in order to zoom in on the function, retrying with the same seed over and over until I can locate the function livelocking.

Created PR.

Things to do, assuming we merge this PR, in order to :

- Actually validate L1 transactions against a ledger hosted by the mock chain, so that the

ModelSpecproperties can actually run contracts' code - Run clients in multiple threads in order to emulate more faithfully what happens IRL

AB on #410

So the problem definitely lies in the way we are looking for a UTxO when either we want to create a new payment or when we wait for the tx to be observed later on. I have tried to rewrite the waitForUTxOToSpend function and it fails/deadlocks earlier, at a new payment. So it's really surprising it succeeds to post tx in the synchronous case but fails to observe later on the Utxo

- Putting back the blocking

waitForNextcall in thewaitForUTxOToSpendfunction

It now blocks again while waiting to observe the last transaction posted.

- Adding a

timeoutaroundwaitForNextto ensure we retryGetUTxO, I now have a deadlock when posting andAbortTx:(

It's possible the arbitrary TimeHandle generated produces some invalid value that raises an exception?

- Adding a trace after we query

currentPointInTimeto see if we can always post a tx - Normally it should raise an exception if there is a problem but does it?

- The postTx happens so it seems blocked after that...

Is it possible some exception never make its way up the stack and a thread gets stuck?

Using seed 889788874 to ensure I retest the same trace as there apparently are 2 problems:

- when waiting to observe the last posted tx

- when aborting

So it seems the node is receiving correctly the response after retrieving a few unrelated outputs

- But then the test hangs, and I don't see the trace saying the utxo has been observed. Trying to increase the timeout to see if that changes anything, to no avail

- Could this have to do with some RTS issue? Or am I not observing what I think I am observing, eg. some traces get swallowed and never output?

Adding a trace to check whether the UTxO is really found or not:

- So the UTxO is found! What should happen next is a trace about

observed ...but it's not. - Oddly enough this trace is also not shown for the previous node so perhaps this is just an artifact of Haskell's lazy IO?

Trying to get some low-level (OS thread sampling) information to see if it helps:

- Going to try https://www.brendangregg.com/blog/2014-06-22/perf-cpu-sample.html

- Got a sample of the process on Mac OS usign the

Activity Monitor. - I tried to use

perfbut it requiressudowhich breakscabalbecause now all the paths are wrong. I should of course switch the user after runningsudobut I was lazy and thought it would work just as fine on a Mac. - There's really nothing that stands out in the process dump: Threads are waiting, and that's it.

Next attempt: Getting a dump of IOSim events' trace

- It does not show anything significant: The

mainthread is stopped but some threads appear to be blocked?

Adding labels to some TVar and TQueue we are using here and there, and it appears the nodes are blocked on the event-queue. Could it just be the case that the node's threads keep running forever because they are not linked to the main thread? And perhaps I am lucky in other test runs?

- Ensuring all the node's threads are also

cancelled does not solve the issue, but the trace is more explicit at least

All threads now properly terminated:

Time 380.1s - ThreadId [1] chain - EventThrow AsyncCancelled

Time 380.1s - ThreadId [1] chain - EventMask MaskedInterruptible

Time 380.1s - ThreadId [1] chain - EventMask MaskedInterruptible

Time 380.1s - ThreadId [1] chain - EventDeschedule Interruptable

Time 380.1s - ThreadId [1] chain - EventTxCommitted [Labelled (TVarId 2) (Just "async-ThreadId [1]")] [] Nothing

Time 380.1s - ThreadId [] main - EventTxWakeup [Labelled (TVarId 2) (Just "async-ThreadId [1]")]

Time 380.1s - ThreadId [1] chain - EventUnblocked [ThreadId []]

Time 380.1s - ThreadId [1] chain - EventDeschedule Yield

Time 380.1s - ThreadId [] main - EventTxCommitted [] [] Nothing

Time 380.1s - ThreadId [] main - EventUnblocked []

Time 380.1s - ThreadId [] main - EventDeschedule Yield

Time 380.1s - ThreadId [1] chain - EventThreadFinished

Time 380.1s - ThreadId [1] chain - EventDeschedule Terminated

Time 380.1s - ThreadId [] main - EventThrowTo AsyncCancelled (ThreadId [2])

Time 380.1s - ThreadId [] main - EventTxBlocked [Labelled (TVarId 11) (Just "async-ThreadId [2]")] Nothing

Time 380.1s - ThreadId [] main - EventDeschedule Blocked

Time 380.1s - ThreadId [2] node-17f477f5 - EventThrow AsyncCancelled

Time 380.1s - ThreadId [2] node-17f477f5 - EventMask MaskedInterruptible

Time 380.1s - ThreadId [2] node-17f477f5 - EventMask MaskedInterruptible

Time 380.1s - ThreadId [2] node-17f477f5 - EventDeschedule Interruptable

Time 380.1s - ThreadId [2] node-17f477f5 - EventTxCommitted [Labelled (TVarId 11) (Just "async-ThreadId [2]")] [] Nothing

Time 380.1s - ThreadId [] main - EventTxWakeup [Labelled (TVarId 11) (Just "async-ThreadId [2]")]

Time 380.1s - ThreadId [2] node-17f477f5 - EventUnblocked [ThreadId []]

Time 380.1s - ThreadId [2] node-17f477f5 - EventDeschedule Yield

Time 380.1s - ThreadId [] main - EventTxCommitted [] [] Nothing

Time 380.1s - ThreadId [] main - EventUnblocked []

Time 380.1s - ThreadId [] main - EventDeschedule Yield

Time 380.1s - ThreadId [2] node-17f477f5 - EventThreadFinished

Time 380.1s - ThreadId [2] node-17f477f5 - EventDeschedule Terminated

Time 380.1s - ThreadId [] main - EventThrowTo AsyncCancelled (ThreadId [3])

Time 380.1s - ThreadId [] main - EventTxBlocked [Labelled (TVarId 18) (Just "async-ThreadId [3]")] Nothing

Time 380.1s - ThreadId [] main - EventDeschedule Blocked

Time 380.1s - ThreadId [3] node-ae3f4619 - EventThrow AsyncCancelled

Time 380.1s - ThreadId [3] node-ae3f4619 - EventMask MaskedInterruptible

Time 380.1s - ThreadId [3] node-ae3f4619 - EventMask MaskedInterruptible

Time 380.1s - ThreadId [3] node-ae3f4619 - EventDeschedule Interruptable

Time 380.1s - ThreadId [3] node-ae3f4619 - EventTxCommitted [Labelled (TVarId 18) (Just "async-ThreadId [3]")] [] Nothing

Time 380.1s - ThreadId [] main - EventTxWakeup [Labelled (TVarId 18) (Just "async-ThreadId [3]")]

Time 380.1s - ThreadId [3] node-ae3f4619 - EventUnblocked [ThreadId []]

Time 380.1s - ThreadId [3] node-ae3f4619 - EventDeschedule Yield

Time 380.1s - ThreadId [] main - EventTxCommitted [] [] Nothing

Time 380.1s - ThreadId [] main - EventUnblocked []

Time 380.1s - ThreadId [] main - EventDeschedule Yield

Time 380.1s - ThreadId [3] node-ae3f4619 - EventThreadFinished

Time 380.1s - ThreadId [3] node-ae3f4619 - EventDeschedule Terminated

Time 380.1s - ThreadId [] main - EventThrowTo AsyncCancelled (ThreadId [4])

Time 380.1s - ThreadId [] main - EventTxBlocked [Labelled (TVarId 25) (Just "async-ThreadId [4]")] Nothing

Time 380.1s - ThreadId [] main - EventDeschedule Blocked

Time 380.1s - ThreadId [4] node-94455e3e - EventThrow AsyncCancelled

Time 380.1s - ThreadId [4] node-94455e3e - EventMask MaskedInterruptible

Time 380.1s - ThreadId [4] node-94455e3e - EventMask MaskedInterruptible

Time 380.1s - ThreadId [4] node-94455e3e - EventDeschedule Interruptable

Time 380.1s - ThreadId [4] node-94455e3e - EventTxCommitted [Labelled (TVarId 25) (Just "async-ThreadId [4]")] [] Nothing

Time 380.1s - ThreadId [] main - EventTxWakeup [Labelled (TVarId 25) (Just "async-ThreadId [4]")]

Time 380.1s - ThreadId [4] node-94455e3e - EventUnblocked [ThreadId []]

Time 380.1s - ThreadId [4] node-94455e3e - EventDeschedule Yield

Time 380.1s - ThreadId [] main - EventTxCommitted [] [] Nothing

Time 380.1s - ThreadId [] main - EventUnblocked []

Time 380.1s - ThreadId [] main - EventDeschedule Yield

Time 380.1s - ThreadId [4] node-94455e3e - EventThreadFinished

Time 380.1s - ThreadId [4] node-94455e3e - EventDeschedule Terminated

Time 380.1s - ThreadId [] main - EventThreadFinished

yet test still blocks...

- Try to figure out

AcquirePointNotOnChaincrashes. Looking at logs from @pgrange - cardano-node logged

Switched to a fork, new tip, looking for that in the logs - Seems like the consensus does update it’s “followers” of a switch to a fork in

Follower.switchFork. Not really learning much here though. Likely we will see a rollback to the intersection point. Let’s see where we acquire in thehydra-node. (seeouroboros-consensus/src/Ouroboros/Consensus/Storage/ChainDB/Impl/Follower.hs) - Try to move the traces for roll forward / backward even closer into the chain sync handler.

- It’s not clear whether we get a rollback or forward with the

AcquirePointNotOnChainproblem. Switching focus to tackle theAcquirePointTooOldproblem. -

AcquireFailurePointTooOldis returned when requestedpoint < immutablePoint. What isimmutablePoint? (seeouroboros-consensus/src/Ouroboros/Consensus/MiniProtocol/LocalStateQuery/Server.hs) - It’s the

anchorPointof the chain db (seeouroboros-consensus/src/Ouroboros/Consensus/Network/NodeToClient.hs,castPoint . AF.anchorPoint <$> ChainDB.getCurrentChain getChainDB) - Can’t reproduce the

AcquireFailedPointTooOld:( - Seems like the devnet is not writing to

db/immutable, but ony todb/volatile. Because blocks are empty? - Wallet initialization is definitely problematic. How can we efficiently initialize it without trying to

Acquirethe possibly far in the pastChainPoint? - Finally something I can fix: by not querying the tip in the wallet we see an

IntersectionNotFounderror when starting the direct chain on an unknown point. This is what we want!

- Discussing seen / confirmed ledger situation https://github.com/input-output-hk/hydra-poc/issues/612

- Not fully stuck: other transactions could proceed

- ModelSpec would catch this quite easily with a non-perfect model

- Why do we keep a local / seen ledger in the first place (if we wait for finaly / confirmed transactions anyways)?

- We walk through our ledger state update diagrams on miro

- Weird situation:

- Use the confirmed ledger to validate NewTx and also answer GetUTxO

- Use & update the seen ledger when we do validate ReqTx

- The simple without conflict resolution is incomplete in this regards (of course)

- With conflict resolution, we would update the seen ledger with the actually snapshotted ledger state

- We still need a seen state

- To know which transactions can be requested as snapshot

- At least on the next snapshot leader

- But then this would be more complicated

- We drew an updated trace of ledger states and messages in oure miro: https://miro.com/app/board/o9J_lRyWcSY=/?moveToWidget=3458764538771279527&cot=14

- We really need to get a model up, which could produce counter examples like the one we drew

Adding a test to check that all the clients receive all the events after a restart

- the test fail

- because history is stored on disk in the reverse order as how it stored in memory

- fixing that

- Moving

ChainContextout ofChainState: Makes state smaller and it’s all configured anyways. (Later goal: state is just some UTxOs) -

genChainStateWithTxis a bit tricky.. if we want to remove the context fromChainStatewe need to use big tuples and lots of passing around. - The block generation in

HandlersSpecis indicating nicely how contexts / states can be treated separately and maybe merged later?

stepCommits ::

-- | The init transaction

Tx ->

[ChainContext] ->

StateT [Block] Gen [InitialState]- Even after making all

hydra-nodetests pass, I gothydra-clustererrors indicating that theWalletdoes not know UTxOs. Maybe our test coverage is lacking there (now)?

{..., "reason":"ErrTranslationError (TranslationLogicMissingInput (TxIn (TxId {_unTxId = SafeHash \"12f387e5b49b3c86af215ee1eef5a25b2e31699c0f629d161219d5975819f0ac\"}) (TxIx 1)))","tag":"InternalWalletError"}

AB on #410

- Working on making a (dummy) block out of a single ledger tx, as we do in the

WalletSpec, Managed to have theModelrun, connecting all the parts together along with a wallet and dummy state

I can now run ModelSpec with direct chain wired in but it deadlocks on the first commit, I need the logs but I don't have them if I timeout using the within function from QC.

-

Suggestion from Ulf: Add an internal "kill switch", something like a thread with a timeout when seeding?

-

Added a tracer that uses

Debug.trace... -> Got a first answer: Seems like theTickthreaded is flooding the event queuesGot an error trying to post a transaction:

postTxError = NoSeedInputwhich obviously makes sense! Need to provide the seed input from the wallet?

- Upgrade with last version 0.8.0

- Manage persistent storage

- Restart the node

- Had to deal with the preview respin

- Upgrade cardano node

- whipe cardano database

- Upgrade node configuration

- configuration was not fully synced

- re-publish the scripts (had to wait for preview upgrade)

- re-send funds to my node

- mark funds as fuel

- Had to deal with the preview respin

AB on #410

Trying to tie up the knot in the Model to dispatch transactions to the nodes

- My idea was to

flushTQueuefor all txs in between 2 ticks and put them all in a block but this function has only been recently added to theio-simpckalge: https://github.com/input-output-hk/io-sim/commit/051d04f1fb1823837c2600abe1a739c7f8dbb1bc - going to do something much more more simple and simply put each transaction in one block

-

Properties / proofs in updated HeadV1 spec

- maybe not all needed, definitely need to redo them

- would be good to have them to refer from code / tests

- we will start work as the current state of the spec is good -> done by 30th of Nov

-

React on mainchain (L1) protocol changes

- should: not only react, but involve in changes on early stages

- in general: not expect drastic changes, rather gradual

- besides hard-forks and protocol updates: desaster recovery plan exists, but highly unlikely and special handling required

- also: changes to the cardano-node / interfaces (similar to #191)

- make dependencies clear upstream -> as an artifact

-

Hard-forks:

- definition: incompatible changes in cardano concensus / ledger rules

- scope is case-by-case, not necessarily tx format changes

- usually backward compatible, but not necessarily

- not as often, likely maintenance required always

- Next: SECP-features

- Concrete artifact: write-up process (also mention rough 6 months lead time)

-

Protocol parameters:

- to balance block production time budgets (sizes, limits, cost models), e.g.

maxTxExecutionUnits - security paramaters to keep ledger state memory in check, e.g.

utxoCostPerByte - sizes likely never decrease again (many applications depend on them)

- collateral should be fine

- cost models updates are tricky -> they will affect costs / possibility of spending script UTxOs

- Idea: just identify if L1 params are more restrictive than L2 and inform user on changes

- to balance block production time budgets (sizes, limits, cost models), e.g.

Going through the code to gain more domain knowledge. The code is written pretty nicely, I can follow along everything I see so it is just a matter of learning how things operate together. Miro graphs are quite helpful there too. I started from a Chain module in hydra-node since this is something I had mostly seen before. One type alias is maybe not needed but I decided not to touch it for now

type ChainCallback tx m = ChainEvent tx -> m ()

Reading through I though that the chain handle should reflect that in the type name so decided to

change the Chain to ChainHandle. In general seeing a lot of words like chain throws me off a bit

since I am a visual guy.

In order to learn more about how things work I am going from the Main module and working my way down

in order to understand the flows. Sometimes I get confused when we use the work callback many times when talking

about different code. In the chain scenario and withChain/withDirectChain functions the callback means we just put some chain event

into the queue. Quite simple. The action here is that we want to post a tx on-chain where on-chain is the L1 chain.

This action is the actual content of our friend ChainHandle, namely postTx which is there - you guessed it - to post a tx

on a L1 chain (and perhaps fail and throw since it has a MonadThrow constraint).

We define what kind of txs can the ChainHandle post in PostChainTx type which is also defined in the Chain.hs module.

These can be any of Init, Commit, Close, Contest, Fanout and the last but not the least CollectCom. Of course this is all

inline with what I read in the paper.

Ok going back to the withDirectChain - jumping to the Direct.hs module.

withDirectChain takes in many params and one of the capabilities is that we are able to run the main chain from pre-defined

ChainPoint (defined in cardano api) which is neat.

Here is also where we construct our internal wallet TinyWallet whose address is derived from our loaded verification key.

I am tempted to rename the TinyWallet to TinyWalletHandle but I know that the plan is to allow using external wallets so

I will leave it be since it will go away soon anyway. This wallet has capabilities to get its utxos, sign txs and apparently

manage its own internal state which is just a set of wallet utxos. We can update this utxo set when we apply a new block which

contains a tx that modifies our wallet utxo set. I think I'll know more about when exactly this happens after going through a bit

more code.

Ok digging further I discover that we actually use the chain sync ouroboros protocol to tie in this wallet update on a new block.

I should mention we are interested in roll forward and backward which is defined in the appropriate ChainSyncHandler

Maybe I shouldn't get into details but this is how I memorize the best.

Trying to make sense of Hydra V1 specification document, seems weird to me this whole discussion happens in Word!

Here is an attempt at formalising in Haskell one of the validators, namely initial. There are lot of things to define of course but it's possible to massage the code in such a way that it looks very close to what's in the paper...

initial ("context") = do

-- First option: spent by Commit Tx

(pid, val, pkh, val', nu', pid', eta', oMega) <- parse $ do

pid <- omega_ctx & delta

val <- omega_ctx & value

pkh <- [pkh | (p, pkh, 1) <- val, pid == p] !! 1

[(val', nu', (pid', eta'))] <- o !! 1

ci <- rho_ctx -- List of outRefs pointing to committed inputs

-- Needed to tell committed inputs apart from fee inputs

-- Omega is a list of all committed (val,nu,delta)

-- The ordering in Omega corresponds to the ordering in CI

-- (which is assumed implicitly in the next line)

oMega <- [omega | (i_j, omega) <- i, (i_j & outRef) `elem` ci]

return (pid, val, pkh, val', nu', pid', eta', oMega)

check

[ -- PT is there

(pid, pkh, 1) `elem` val'

, -- Committed value in output

val' - (pid, pkh, 1) == val + sum [omega & value | omega <- oMega]

, -- Correct script

nu' == nu_commit

, -- Correct PID

pid' == pid

, -- Committed UTxOs recorded correctly

eta' == recordOutputs (oMega)

, -- Authorization

pks == [pkh]

, -- No forge/burn

mint == 0

]Just thinking this might be even closer in Python

Going through various comments in the paper:

- Kept the idea of having

Ibeing the set of all inputs but the one providing context for the validator, even though it's not technically always the case we do implement this separation - Accepted most comments from SN

- There's no need to check burning in the minting policy as it's checked in the Abort and Fanout

- we dropped the subscript

_SMonI_SMandO_SMbecause they appeared confusing, expressing the opposite of what other similarly subscripted symbols meant - We had an inconclusive discussion over the ordering of committed UTxO: Sandro has written the

ν_initialvalidator for hte general case of multiple UTxO committed off-chain, which means thecollectComwill generate a hash of list of (ordered) UTxO hashes, not of the list of actual UTxO. This might be a problem and a gap to address.

- Debugging EndToEnd: I see a

ToPostforCollectComTx, but anOnChainEventofOnCommitTxafter it. Does it try to collect too early? Is there a state update "race" condition? - Helpful jq filter:

jq 'del(.message.node.event.chainEvent.newChainState.chainState.contents)'to not renderchainStatecontents - Oh no.. I think there is a problem with this approach:

- Commits happen concurrently, but the chain state is updated in isolation

- For example, in a three party Head we will see three commits

- Two threads will be reading/updating the state

- Receiving transactions will

callback, theHydraNodeStateprovides the latestchainStateto the continuation:- The continuation will evolve that chain state into a

cs'and put it as anewChainStateinto the queue

- The continuation will evolve that chain state into a

- The main thread processes events in the

HydraNodequeue:- Processing the

Observationevent for a commit tx will put it'scs'as the latestchainState

- Processing the

- So it's a race condition between when we get callbacks (query the state) and process events (update the state).

- Maybe using

modifyHeadStatein thechainCallbackis not such a dirty hack? We could avoid dealing with updatingchainStatein the head logic, but this is really increasing complexity of data flow.

- When threading through the

chainStateinHeadLogic: The field access and huge amount of arguments inHeadLogicreally needs refactoring. Individual state types should help here. - When debugging, I realize that the

ChainContextis always in the Hydra node logs (as JSON) and it's HUGE.. need to move this out ofChainStateor somehow switch it's JSON instance depending on log/persistence. - After encountering the bug in the head logic, I had the idea of checking chain states in the

simulatedChaintest driver. However, thechainStateVaris shared between nodes and this messes things up. - This will be even a bigger problem with the

Modelusing the realChainStateType Tx. The simulation needs to keep track of a chain state perHydraNode.

- Starting a fresh adr18 branch as it's a PITA to rebase on top of persistence now.

- Start this time with all the types as we had them last time. e.g. that

Observationonly contains a new chain state - E2E tests with new

ChainCallbackare interesting.. shall we really do the chain state book-keeping in the tests?

After further investigation, we discover that, once again, one of of us had the wrong keys for another node. Fixing configuration solved the problem.

Problem with the tui because of the way the docker image handles parameters (add --). #567

Problem with peers blinking. They connect/disconnect in a loop. We see deserialization errors in the logs. It happens we don't use the exact same latest version. Some docker build github action did not use the latest latest image but a not so latest one. Anyway, we should add some tag to our images to be sure about which version is running. See #568

Also, it is hard to read through the logs as there are quite some noise there. Since it's json stuff we should explore how to help filter out interesting logs more easily. See #569

Pascal is unable to initiate a head. Not enough funds error but he has the funds. Maybe the problem comes from the fact that he has two UTxO marked as fuel. One is too small, the other one is OK. Is there a problem with UTxO selection in our code? See #570

TxHash TxIx Amount

--------------------------------------------------------------------------------------

043b9fcb61006b42967714135607b632a9f0d50efbf710822365d214b237a504 0 89832651 lovelace + TxOutDatumNone

043b9fcb61006b42967714135607b632a9f0d50efbf710822365d214b237a504 1 10000000 lovelace + TxOutDatumHash ScriptDataInBabbageEra "a654fb60d21c1fed48db2c320aa6df9737ec0204c0ba53b9b94a09fb40e757f3"

13400285bb2db7179ac470f525fe341b0b2f91f579da265a350fa1db6829bf7f 0 10000000 lovelace + TxOutDatumNone

e9c45dd07cdafd96a399b52c9c73f5d9886bbd283b09b7eae054092e5b926b58 1 3654400 lovelace + TxOutDatumHash ScriptDataInBabbageEra "a654fb60d21c1fed48db2c320aa6df9737ec0204c0ba53b9b94a09fb40e757f3"

Sebastian initializes a head but nobody can see it. Same with Franco and same with Sasha. We discover that some of us still have Arnaud in their setup so it might be the case that the init transaction, including Arnaud, is ignore by other peers that don't know Arnaud. We fix the configurations but we still can't initialize the head. We have to investigate more.

First step, clarify: do we want to improve how we display errors in the TUI, like the size of the error window, scrolling capability, etc.? Or do we want to improve the meaning of the message?

Second, try to reproduce an error using the TUI:

- tried to upgrade a node but has problems with the nodeId parameter format... probably a version error

- tried to FIX xterm error Sasha has observed on his node

The xterm error is due to the static binary of the tui depending, at runtime, to some terminal profile. Adding this to the docker image and setting TERMINFO env variable appropriately solved the issue.

My code has crashed with the following uncatched exception:

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: hydra-node: QueryException "AcquireFailurePointNotOnChain"

Looking at previous logs, it might be the case that the layer 1 switch to a fork after hydra has taken some block into consideration. Then, querying layer 1 led to an exception. Here are some, probably significatn logs:

# new tip taken into account by hydra-node:

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: [bc6522f8:cardano.node.ChainDB:Notice:66] [2022-10-14 15:49:41.10 UTC] Chain extended, new tip: 787b2e7bb45f5a77740c6679ca2a35332420eb14a795a1bedcff237126aa84ea at slot 5759381

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: {"timestamp":"2022-10-14T15:49:41.143911009Z","threadId":49,"namespace":"HydraNode-1","message":{"directChain":{"contents":{"newUTxO":{"3b54e9e04dd621d99b1b5c40d32d9d2c50f19c520ca37b788629d6bfce27a0f6#TxIx 0":{"address":"60edc8831194f67c170d90474a067347b167817ce04e8b6be991e828b5","datum":null,"referenceScript":null,"value":{"lovelace":100000000,"policies":{}}},"e9c45dd07cdafd96a399b52c9c73f5d9886bbd283b09b7eae054092e5b926b58#TxIx 1":{"address":"60edc8831194f67c170d90474a067347b167817ce04e8b6be991e828b5","datum":"a654fb60d21c1fed48db2c320aa6df9737ec0204c0ba53b9b94a09fb40e757f3","referenceScript":null,"value":{"lovelace":3654400,"policies":{}}}},"tag":"ApplyBlock"},"tag":"Wallet"},"tag":"DirectChain"}}

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: {"timestamp":"2022-10-14T15:49:41.143915519Z","threadId":49,"namespace":"HydraNode-1","message":{"directChain":{"point":"At (Block {blockPointSlot = SlotNo 5759381, blockPointHash = 787b2e7bb45f5a77740c6679ca2a35332420eb14a795a1bedcff237126aa84ea})","tag":"RolledForward"},"tag":"DirectChain"}}

# layer 1 swithes to a fork

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: [bc6522f8:cardano.node.ChainDB:Info:66] [2022-10-14 15:49:41.22 UTC] Block fits onto some fork: 8adda8ee035fb15aac00c0adbec662c2197cc9c1fbd4282486f6f6513c7700f5 at slot 5759381

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: [bc6522f8:cardano.node.ChainDB:Notice:66] [2022-10-14 15:49:41.22 UTC] Switched to a fork, new tip: 8adda8ee035fb15aac00c0adbec662c2197cc9c1fbd4282486f6f6513c7700f5 at slot 5759381

# ignoring some "closing connections" logs

# hydra node crashes with an exception:

Oct 14 15:49:41 ip-172-31-45-238 bc6522f81da8[34927]: hydra-node: QueryException "AcquireFailurePointNotOnChain"

Trying to figure out where the problem was in the code I had two issues:

- figuring out that the last message meant that the code abruptedly crashed because of an uncaught exception (trick for later: it's not JSON)

- figuring out which version of the code was running on my node

On my running version, the excepetion is thrown in CardanoClient.hs:294]([https://github.com/input-output-hk/hydra-poc/blob/979a5610eed0372f2826b094419245435ddaa22c/hydra-node/src/Hydra/Chain/CardanoClient.hs#L294) Which would correspond to CardanoClient:295 in current version of the code.

- How do express the rollback tests? One idea: add slot to all server outputs, then we can create

Rollbackevents easily - Problem: it’s a big change and it feels like leaking internal information to the client

- Alternative: Add a

rollbackmethod to the simulated chain, taking a number of “steps” again. - We encounter the problem:

simulatedChainAndNetworkneeds to be abstract overtxand so it’s quite tricky to keep a chain state - We worked around it by adding a

advanceSlottoIsChainState, that way we can increase slots without knowing whether it’s aSimpleTxorTxchain state - Things are becoming quite messy here and there and we discuss & reflect a bit:

- Having the

ChainSlotinside theChainStateType txis maybe more complicated than necessary - Why do all chain events have a

chainStatein the first place? - It would be much clearer if each

ChainEventhas a slot, but onlyObservationmay result in a newchainState

- Having the

-

We mostly discussed rollbacks and how they are handled in the protocol

-

Originating comment triggering this discussion: "wait sufficiently long with first off-chain tx so no rollback will happen below open"

-

First scenario:

- Head is opened with a

collectComtransaction with someu0, recorded as someη0(typically a hash ofu0) - Some off-chain transactions happen leading to a

u1and snapshot signatureξ1 - A rollback happens where

collectComand one party'scommitis not included in the chain anymore - The affected party does

commita *different UTxO into the head and opens the head again withcollectCom - Now, the head could be closed with a proof

ξ1ofu1, although it does not apply tou0

- Head is opened with a

-

Solution:

- Incorporate

η0into anyξ, so snapshot signatures will need to be about the newu, but also aboutu0 - That way, any head opened with different UTxOs will require different signatures

- Incorporate

-

We also consider to include the

HeadIdinto the signature to be clear thatξis about a single, unique head instance -

Second scenario:

- Head gets closed with a

close, leading to a contestation deadlineDL - Some party does

contestcorrectly beforeDL, but that transaction gets rolled back just before the deadline - Now this party has no chance in

contestin time

- Head gets closed with a

-

This would also happen with very small contestation periods.

-

The discussion went around that it would not be a problem on the theoretical

ouroboros, but is one oncardano-> not super conclusive. -

We think we are okay with the fact that a participant in the Head protocol would know the network security parameter and hence how risky some contestation period is.

Pairing on #535

I would like to write a small script to generate a graph out of the execution trace, to ease the understanding of the flow of actions

We have 2 problems:

- In the state model, we have a UTxO with no value which does not really make sense -> we need to remove a fully consumed UTxO

- In the

NewTxgenerator, we generate a tx with 0 value which does not make sense either - The state model is not really faithful to the eUTxO model: A UTxO should just be "consumed" and not "updated"

it "removes assets with 0 amount from Value when mappending" $ do

let zeroADA = lovelaceToValue 0

noValue = mempty

zeroADA <> zeroADA `shouldBe` noValue

but this property fails

forAll genAdaValue $ \val -> do

let zeroADA = lovelaceToValue 0

zeroADA <> val == val

&& val <> zeroADA == val

with the following error:

test/Hydra/ModelSpec.hs:104:3:

1) Hydra.Model ADA Value respects monoid laws

Falsified (after 3 tests):

valueFromList [(AdaAssetId,0)]

We realise that genAdaValue produces 0 ADA Value which is problematic because:

- When

mappending 2Value, there is a normalisation process that removes entries with a 0 amount - In the ledger rules, there's a minimum ada value requirement: Check https://github.com/input-output-hk/cardano-ledger/blob/master/doc/explanations/min-utxo-mary.rst which implies there cannot be a UTxO with no ADAs anyway

What do we do?

- Easy: Fix the generator to ensure it does not generate 0 ADA values

- fix

genAdaValue? - fix the call site -> Commit generator in the

Model

- fix

- less easy: handle this problem at the Model levle? eg. raise an observable error if someone crafts a 0 UTxO? but this should not be possible at all 🤔

In https://github.com/input-output-hk/cardano-ledger/blob/master/eras/shelley-ma/test-suite/src/Test/Cardano/Ledger/ShelleyMA/Serialisation/Generators.hs#L174 we actually can generate 0-valued multi assets.

Got a weird error in CI when it tries to generate documentation (https://github.com/input-output-hk/hydra-poc/actions/runs/3223955249/jobs/5274722994#step:6:110):

Error: Client bundle compiled with errors therefore further build is impossible.

SyntaxError: /home/runner/work/hydra-poc/hydra-poc/docs/benchmarks/tests/hydra-node/hspec-results.md: Expected corresponding JSX closing tag for <port>. (49:66)

47 | <li parentName="ul">{`parses --host option given valid IPv4 and IPv6 addresses`}</li>

48 | <li parentName="ul">{`parses --port option given valid port number`}</li>

> 49 | <li parentName="ul">{`parses --peer `}<host>{`:`}<port>{` option`}</li>

| ^

50 | <li parentName="ul">{`does parse --peer given ipv6 addresses`}</li>

51 | <li parentName="ul">{`parses --monitoring-port option given valid port number`}</li>

52 | <li parentName="ul">{`parses --version flag as a parse error`}</li>

error Command failed with exit code 1.

It seems angle brackets in markdown are not properly escaped !!?? 😮

Managed to get markdown pages generated properly for tests and copied to docs/ directory

Turns out there's no need for a dedicated index page, the subdirectory tests/ will automatically be added as a card on the benchmarks page and the item structure will appear on the side. However, the structure is not quite right:

- The page's title is the first level 1 item of the page which is not what I want

- The top-level directory is named after the directory's name which is ugly: I would like to replace

testswithTest Results