-

Notifications

You must be signed in to change notification settings - Fork 367

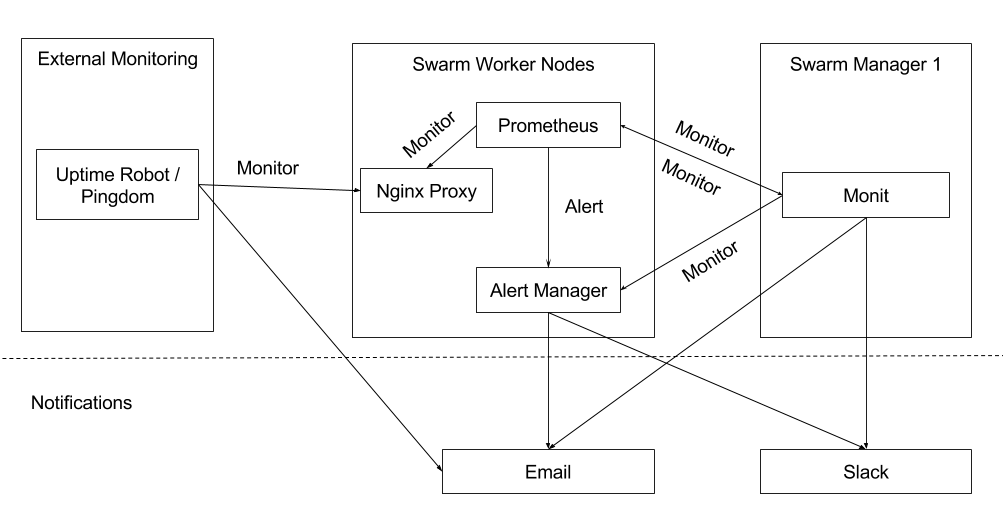

Monitoring the monitoring system

Deepak Narayana Rao edited this page Oct 17, 2017

·

3 revisions

Image: Edit Link

- Prometheus and alert manager are running as services inside docker swarm. These services being stateful, are made to run on single agent node labeled to run the service. These services can go down in following scenarios

- SC1: The agent node on which there services run goes down

- SC2: These service are removed from docker swarm by bug or mistake in deploy scripts

- SC3: Complete datacenter failure

- We need a system which alerts us when prometheus or alert manager service goes down

- The system monitoring prometheus and alert manager should not be inside docker swarm to tolerate swarm failures

- Prometheus and alertmanager should not need to be exposed to outside world

- There should be a monitoring system outside the datacenter to handle datacenter failures

- As shown in figure above, monit service is run on one of the swarm manager node(not as a service in swarm)

- Monit is configured to probe prometheus and alert manager http endpoints periodically (every minute)

- Monit sends alert notifications when prometheus or alert manager is down for 5 checks (5 minutes)

- This setup covers failure scenarios SC1 and SC2 listed above

Note: This work has not started yet

- External monitoring services like https://uptimerobot.com/ or https://www.pingdom.com/ is configured to check one or more HTTP endpoints like portal root url

- This setup covers failure scenarios SC3 listed above

-

DeadmanSnitch: In this case prometheus and alert manager should made to periodically ping DeadmanSnitch. If the DeadmanSnitch doesn't receive ping from these services, it will raise an alert. Drawbacks are

- Complexity needed in making prometheus and alert manager ping periodically DeadmanSnitch

- There only paid plans