-

Notifications

You must be signed in to change notification settings - Fork 10

Meeting Notes (2020 06 02)

Alex McLain edited this page Jun 25, 2020

·

6 revisions

Brainstorming

- An animation could start as a seed which has a motion vector and color vector.

- Pixel-based animations could use keyframes and tweening.

- Does chameleon in its current state help us with pixel blending?

-

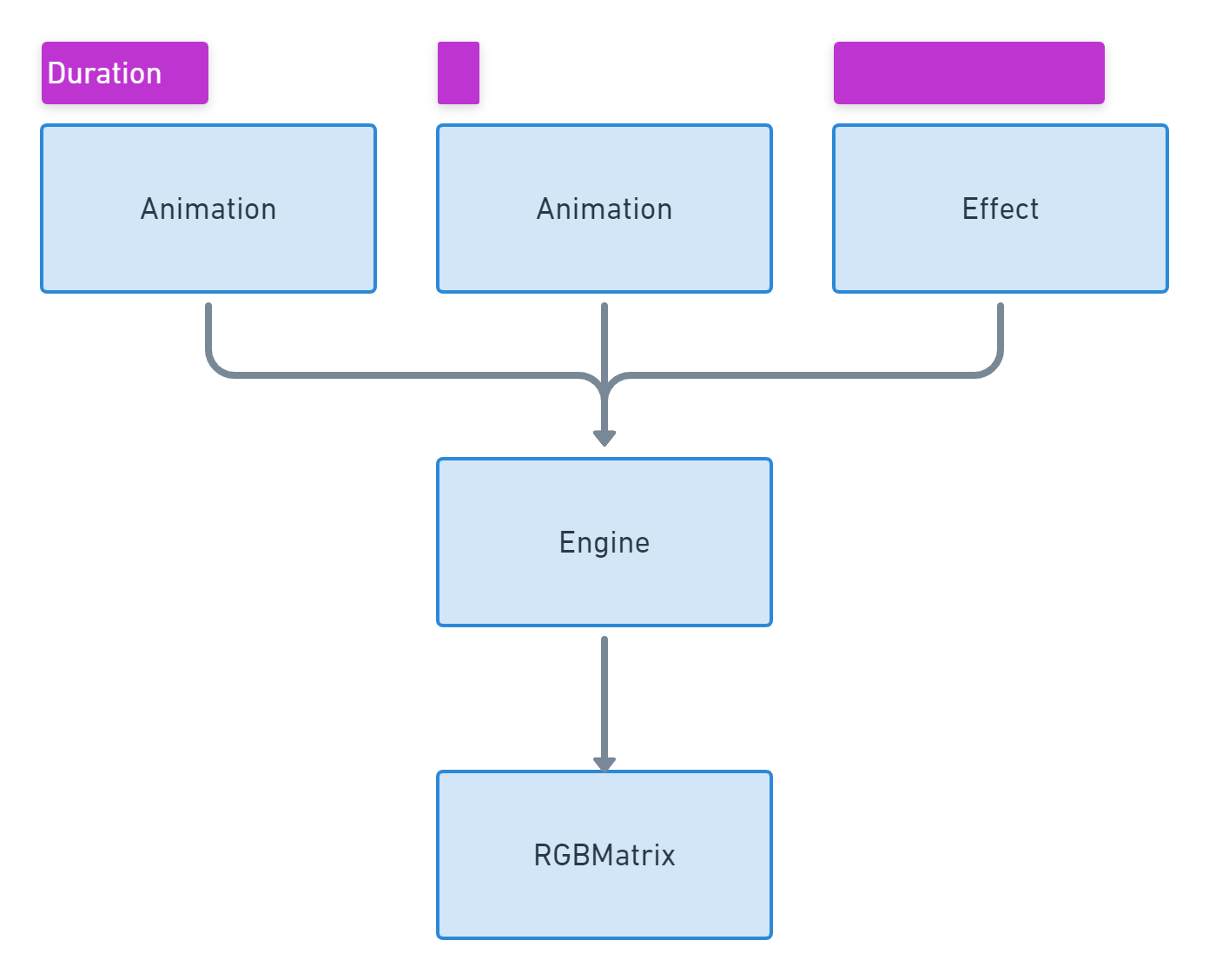

AnimationvsEffect- Should frame-based animations be a different system than the math-based animations? - Should an animation have a black box state field that it can store state in that nothing else can access?

- How do we support something like Conway's Game of Life that relies on previous pixel state?

- How do we support the Simon game?

- Animations as a black box in general?

- The overall consensus seems to be yes, conceptually.

- Implementation: Should an animation be a GenServer?

- Pro: It could be a completely isolated component that can store its own internal state and emits messages (frames) to be rendered.

- Con: Emitting messages introduces concurrency between an animation and the renderer and leaves the frames to the mercy of the scheduler (unpredictable timing -- and ordering if we support multiple animations running at once). Frame pile-up.

- How can an animation know when to draw the next frame, and/or what the frame's content should be, when an event is received?

- Do we need a render

Engineto control timing, which animations are running, animation blending?- Should we have the concept of "base" properties that live inside an animation and have a "global" scaling factor for those properties that the engine can control?

- Probably not. It would be cool if each animation could receive events and modify its own properties.

- There are some animations that don't actually need to animate (solid colors). Do we really need to be requesting a next frame for these animations?

- An animation could return a term for not having a new frame to render.

- The compiler may already optimize for this case if the current frame is passed to the renderer as the next frame because it should be passing a pointer to the same data.

- What if the animation sent out a "program" (instruction set) to the render engine instead of frames?

- Should we have the concept of "base" properties that live inside an animation and have a "global" scaling factor for those properties that the engine can control?

- Integer rollover is a potential issue in a tick-based system, which could cause an animation glitch when the rollover happens.

- It should be very infrequent, but we know it will happen at some point.

- If we care about mitigating this, one way we could solve it is by allowing an animation designer to specify the tick where resetting the tick counter is seamless.

- We could also use a timestamp rather than an integer.

- Another option is to compute a delta value every time a frame is received rather than using a tick number.

- How do we integrate key presses into the system?

- Pub/sub system? A "key publisher"?

- An animation could subscribe to the key presses it cares about, for example.

- Bus architecture is also great for time-of-day based events.

- If blending animations, do we need an alpha channel?

- Should we have a

Gametype of animation that gives some kind of more direct access to the frame buffer(s)?

QMK

- There are runners and animations.

- Solid reactive cross (animation). link

- It returns an

effect_runnerthat determines how the animation plays. - There is an animation function that takes input params determined by the effect runner and outputs an

HSVa pixel color.

- It returns an

- The rainbow animation (nexus). link

- Hit tracker - Coordinates of the keys that have been pressed in the past.

- QMK does animation blending by looping over an animation and calculating the effect for each hit in the hit tracker. One effect's output is the next effect's input.

- The code for an effect is really complex and hard to understand what an effect is rendering.

- Chris: Spike some of the ideas from today's call.

- Nick: Play with vector space ideas.