HATETRIS is a variation of the classic game Tetris, written as a JavaScript browser game in 2010 by sci-fi author and programmer qntm. The piece selection, instead of being random, is based on the state of the game and minmaxed to make clearing lines vastly more difficult than Tetris; however, this difficulty is balanced by the game's deterministic nature, which allows the game tree to be searched to an arbitrary depth in an emulator.

The game can be played here.

This repository contains Rust code for a highly-optimized HATETRIS emulator and a beam search routine using a neural network as the pruning heuristic. This repository was used to obtain the current world record of 366 points.

A detailed explanation of the history of HATETRIS records, and of the development of this repository and the techniques in it, can be found on our blog:

The emulator represents a standard 20x10 HATETRIS board as an array of 16 u16 integers, with the four rows above the well line not stored (since they will always be 0). To define some basic terminology:

- A well is the 20 x 10 area in which the game is played. This does not include the piece moving around within the well.

- A state consists of the well and the current score.

- A piece is the tetromino being maneuvered around in the well. When the piece cannot move down any further, it merges with the well and stops moving. This generates a new well.

- A position refers to a legal placement of a piece within the well, be it terminal or not.

- A terminal position is a piece that has reached a position where it can no longer descend, that is, it is going to become part of the well, exactly where it is. Once this piece is placed, we have created a new well.

- A move is a placement of a piece within a well, such that it is terminal, and generates a new well. We do not consider non-terminal motion inside the well to be a ‘move’.

The emulator and the game graph created by the emulator work on moves, and not positions; for instance, a "depth 2" child of the starting well on the game graph does not describe a tetromino which has been moved twice; instead, it describes a well in which two tetrominoes have been placed into a terminal position.

The piece positions within a well are not directly emulated (except during replay code generation); instead, a 64-bit 'waveform' is used to represent all 34 possible positions a piece at a given height can be in. Bitshifting is used to rotate the waveform, and to move it left or right:

w_old = abcd efgh ijkl mnop qrst

w_left = efgh ijkl mnop qrst 0000

w_right = 0000 abcd efgh ijkl mnop

w_rotate = bcda fghe jkli nopm rstq

Bitmasks are created via lazy_static evaluation to calculate terminal positions, scores, and well heights of a given waveform, without the computationally expensive process of rendering the actual well. No well is rendered unless it is a legal move.

Previous versions of this code cached common waveforms and well patterns, to avoid bit operations at the cost of memory and hashmap lookups; the hashmap lookups turned out to be slower than recalculating the bitshifts for each new move. The current version of the emulator averages ~5 μs per move per core to generate all children of a typical well.

The beam search optionally uses quiescence lookahead to judge each well in a beam search not by its current heuristic, but by the maximum heuristic of any well reachable by a continuous series of lineclears. While some wells which cannot force the piece needed to clear the line are overestimated this way, parametric studies across a variety of heuristics and beam widths show the quiescence lookahead to be a considerable improvement.

The concept of a beam search for HATETRIS was first implemented by threepipes in 2019, and has been used in every HATETRIS record achieved by anyone since 2021. The quiescence lookahead was first implemented for HATETRIS by us, in 2022.

The neural network is NNUE (stylized ƎUИИ), an efficiently updatable neural network design first implemented by Yu Nasu in 2018 for use in computer shogi (English) and later used in the Stockfish chess engine, among others. It is a fast neural network which is sparse enough to use the CPU rather than GPU; this repository does not yet make use of optimizations such as the accumulator, quantization, or Single Instruction Multiple Data, but performance can likely be significantly improved.

NNUE was first implemented for HATETRIS by knewjade, in 2022.

While HATETRIS has always been deterministic, before June of 2021 it was possible (albeit not yet done) to force the game into an infinite loop and clear lines indefinitely. To prevent this, qntm added a loop prevention rule to the piece selection algorithm, which now ranks pieces by an ordered preference:

- If all pieces lead into previously seen wells, choose the piece corresponding to the well which has been seen the fewest times.

- If any piece does not lead into a previously seen well, choose that piece.

- Otherwise, choose whichever piece minmaxes the well height (i.e. the piece which, when placed to minimize well height, causes the highest well height).

- Otherwise, break ties in the order S -> Z -> O -> I -> L -> J -> T.

This repository currently accounts for rules 2-4; for performance reasons, we do not currently account for the case of all pieces leading to previously seen wells. We have not yet found any case of even two pieces out of seven leading to a repeat well and suspect that rule 1) cannot be triggered under normal HATETRIS rules, so implementing the rule is not currently a priority, but we will be glad to implement it if you use this repository and encounter a repeat well, (and we would also approve a PR that adds this functionality).

The program should work in any environment which has the Rust programming language installed, but we have only run this on Linux, and the exact commands may differ on Mac or Windows.

First, go to the desired directory and clone the repository:

cd /path/to/desired/directory

git clone [email protected]:thecog19/HATETRIS-public-beamsearch.gitThen, build and run with the command:

cargo build --releaseThis should install all dependencies.

The code is run from the directory which contains src and Cargo.toml, with the command:

cargo run --releaseWe output all logs to stdout, so you should pipe stdout somewhere if you want to review the logs later:

mkdir Output\ Logs/

cargo run --release 2>&1 | tee -a Output\ Logs/NAME-OF-OUTPUT-LOG.txtBy default, training is performed with the training_cycle() function:

use neural::training_cycle;

fn main() {

training_cycle();

}You can run a beam search without active training; for instance, to run a beam with a width of 1,000 based on the Generation 3 network, without saving the beam or using it for training:

use constants::NET_VERSION;

use savefile::load_file;

use searches::beam_search_network_full;

use types::{SearchConf, State};

fn main() {

let mut conf = SearchConf::testing();

conf.quiescent = true;

conf.generation = 3;

conf.beam_width = 1_000;

let neural_network_path = conf.neural_network_path();

let weight = load_file(&neural_network_path, NET_VERSION).unwrap();

let starting_state = State::new();

println!("");

println!("Starting beam with width {}", conf.beam_width);

beam_search_network_full(&starting_state, &weight, &conf);

}HATETRIS training consists of a series of discrete 'generations', each one of which trains and uses a new neural network:

- The network is created, based on either the previous generation or (for Generation 0) with random weights.

- The network is used for a beam search of width

MASTER_BEAM_WIDTH, using the quiescent lookahead. - Wells are taken at random from the beam search results. Each well is used to run a small beam of width

TRAINING_BEAM_WIDTHfor at mostTRAINING_BEAM_DEPTHsteps without the quiescent lookahead; the best heuristic in that beam is used as the training target for that well. If the beam does not lastTRAINING_BEAM_DEPTHsteps, the well's training target is -1.0. - A new network is trained by taking the previous network and backpropagating the wells and training targets gathered in step 2. This new network is used for the next generation of training.

Our results from training with nearly-default parameters showed rapid improvement; the table below lists the scores of the networks on the master beam searches, and the scores those same networks would have achieved on the master beam searches without the quiescence lookahead. Random initialization (and the fact that we modified this code and its default parameters several times during training) will ensure that no two runs are identical; still, we'd expect these general trends to hold when training beams of width ~10^6:

| Generation | Score w/ Quiescence | Score w/o Quiescence |

|---|---|---|

| 0 | 8 | 4 |

| 1 | 89 | 26 |

| 2 | 160 | 41 |

| 3 | 186 | 69 |

| 4 | 204 | 87 |

| 5 | 302 | 91 |

| 6 | 366 | 123 |

A network typically becomes good enough to find loops when its million-width beam search score surpasses ~300; we did not encounter any for generations 0 through 5.

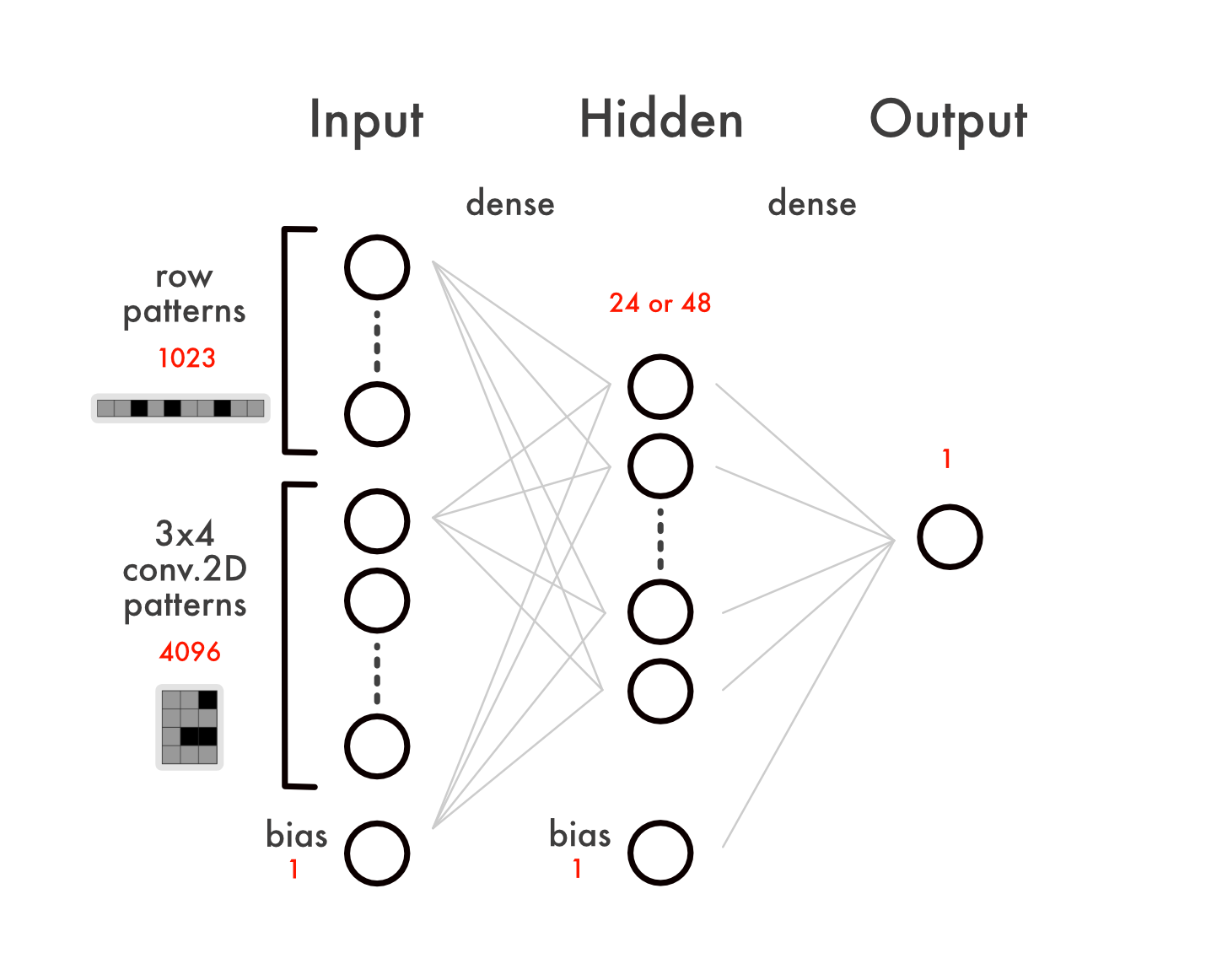

The network has two fully-connected layers with the tanh() activation function within.

The input layer is sparse and convolutional. The two convolutions used here are 4x3 and 1x10:

4x3is a rectangle containing four rows and three columns. Each4x3window in a well can be any one of 2^(4*3) = 4096 values.1x10is a single row of has 10 squares, and can be any one of 2^10 - 1 = 1023 values (not 1024, since the row corresponding to a full clear can never be reached in a real well).- This repository uses positional convolutions, meaning that e.g. an empty row on line 10 and an empty row on line 11 will contribute different amounts to the hidden layer.

We take this network from the work of knewjade; the two differences between our network and his are that our network has positional convolutions and lacks a bias neuron.

Source: knewjade

The hidden layer is dense, and converts HIDDEN neurons to a single output representing the 'quality' of the well. The output is scaled from -1.0 to +1.0; to make some sorting functions easier, we represent this as an isize in the beam search, ranging from -1,000,000 to +1,000,000.

training_cycle() will check the AEON constant in constants.rs to determine which folder to start in. For instance, if AEON is 0 (the default), then training_cycle() will check for (or create) a set of folders under Training / Aeon 0 in the base directory:

HATETRIS-PUBLIC-BEAMSEARCH

├── Output Logs

├── src

├── target

│ └── release

│ ├── ...

└── Training

└── Aeon 0

├── Generation 0

├── Generation 1

├── Generation 2

├── ...

The folder for Generation N will contain:

- An NNUE network file called

Network N.bin. - A

Replayfolder, containing for each move:move_i.bin: The wells in the beam at depthi. The beam will have a maximum width ofMASTER_BEAM_WIDTH.parent_i.bin: The parent graph of all wells in the beam at depthi.loops_i.bin: If no loops were found during movei, this file will not be created.

- A

Trainingfolder, containing:all_epochs.bin, containingMAX_EPOCHS * TRAINING_BEAM_WIDTHwells taken at random from the master beam search stored inReplay.epoch_i.bin, whereiranges from 0 toMAX_EPOCHS. Each epoch contains the starting well and the final result ofMINIBATCHsmall beam searches fromMINIBATCHdifferent wells, each of which has maximum widthTRAINING_BEAM_WIDTH.

Generation N

├── Network N.bin

├── Replay

│ ├── move_0.bin

│ ├── move_10.bin

│ ├── move_100.bin

│ ├── move_101.bin

│ ├── move_102.bin

│ ├── ...

│ ├── parent_10.bin

│ ├── parent_100.bin

│ ├── parent_101.bin

│ ├── parent_102.bin

│ ├── ...

│ ├── loops_47.bin [OPTIONAL]

└── Training

├── all_epochs.bin

├── epoch_0.bin

├── ...

├── epoch_255.bin

The training cycle can be restarted from any point within training. However, if the program is stopped in the middle of a file-write, the resulting partially-written file will not be valid and the program will panic. To clean this up, you will need to delete the relevant parent_i.bin and move_i.bin of the latest timestep (previous timesteps are unaffected and will not need to be deleted), or the relevant epoch_i.bin (again, the epoch will not be affected).

The output piped to stdout uses a consistent pattern from one run to another:

Startup:

Creating new training cycle.

No previous generation files found, starting from generation 0

Within a Beam Search:

Wells are always wrapped in a State (containing a well and a score), a StateH (containing a well, a score, and a heuristic estimate from the neural network), or StateP (containing a well, score, heuristic estimate, and index of a parent). Each row is represented as a decimal number; for instance, 775 would represent a row 1100000111, meaning that the leftmost two and rightmost three squares in that row are occupied, while the middle five squares are empty.

...

Depth 364

Time: 48604 seconds

Total children: 33344910

New well count: 1048576

Maximum score: StateH { heuristic: 806128, score: 142, well: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 515, 999, 975, 1007, 446] }

Worst heuristic: StateH { heuristic: 633172, score: 139, well: [0, 768, 896, 768, 257, 769, 771, 769, 257, 771, 783, 831, 513, 959, 999, 510] }

Best heuristic: StateH { heuristic: 915909, score: 137, well: [0, 0, 1, 63, 127, 575, 543, 783, 799, 775, 771, 775, 911, 1007, 1007, 446] }

Family distribution: [573463, 475113, 0, 0, 0, 0, 0, 0, 0, 0]

Score distribution: [(135, 344), (136, 40237), (137, 246369), (138, 362463), (139, 323610), (140, 62781), (141, 12674), (142, 98)]

Loops: 2

...

Most lines in the output are self-explanatory; Family distribution refers to the lowest rows of each well. The lowest row has never been cleared (and might be impossible to clear); this makes it a decent proxity for well and strategy diversity in the early game. For sufficiently large beams and sufficiently good networks, the lowest row will eventually become 0111111110 (in decimal, 510), so this stops being useful in the late game.

The terms in this per-move output are controlled by the print_progress() function in searches.rs. You can modify your output names here if needed.

After a Beam Search

After a beam search, the keyframes of the longest possible game (note: this is usually, but not necessarily, the game with the highest score) are output in reverse chronological order:

StateH { well: [1022, 1011, 507, 895, 991, 767, 1007, 991, 383, 959, 502, 442, 639, 1021, 637, 510], score: 302, heuristic: -999801 }

StateH { well: [962, 1011, 507, 895, 991, 767, 1007, 991, 383, 959, 502, 442, 639, 1021, 637, 510], score: 302, heuristic: -999771 }

StateH { well: [2, 1011, 507, 895, 991, 767, 1007, 991, 383, 959, 502, 442, 639, 1021, 637, 510], score: 302, heuristic: -999727 }

StateH { well: [0, 1008, 506, 895, 991, 767, 1007, 991, 383, 959, 502, 442, 639, 1021, 637, 510], score: 302, heuristic: -999741 }

...

StateH { well: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], score: 0, heuristic: 931545 }

Afterwards, the game's replay is output. Each keystroke is represented by L, R, D, or U (for left, right, down, and rotate moves, respectively). This is an extremely inefficient encoding, conveying only two bits of usable information per character, but any other encoding will not be guaranteed to split properly when wanting to e.g. separately describe the stem and repeating section of a loop.

HATETRIS has used hexadecimal (4 bits per character), Base65536 (16 bits per character) and currently Base2048 (11 bits per character, but Twitter-compatible) to represent replays. Currently, the easist way to convert a run from this representation to Base2048 (for an equally valid, but far more compact, replay string) is to paste the replay into the game itself, allow the game to finish replaying, and copy the resulting Base2048 replay from the end screen. We may eventually port Base2048 to Rust to avoid this step. (Or, if you're looking to make a contribution to this repository, we would approve a PR...)

RRRDDDDDDDDDDDDDDDDDDRRDDDDDDDDDDDDDDDDDLLLDDD...

Next, the training routine infers the number of well states present in each move_i.bin snapshot file and selects a random number of well states to extract from each snapshot, so that the total number of moves is MAX_EPOCHS * MINIBATCH. This is unfortunately bottlenecked by file read times; it make take a couple hours to load and extract random wells from all of the files, even though we only want a miniscule amount of data from each one individually. This is an area for potential optimization, but not something we have prioritized.

770163758 states identified from 789 timesteps in 0 seconds.

1048576 selections allocated in 0 seconds.

...

19 states extracted from timestep 782 by 7802 seconds.

23 states extracted from timestep 783 by 7803 seconds.

16 states extracted from timestep 784 by 7803 seconds.

0 states extracted from timestep 785 by 7803 seconds.

4 states extracted from timestep 786 by 7803 seconds.

0 states extracted from timestep 787 by 7803 seconds.

0 states extracted from timestep 788 by 7803 seconds.

Training path found, training data populated up to -1 out of 256.

Each epoch is then assigned MINIBATCH wells and processed in an independent thread. Depending on the size of MINIBATCH, TRAINING_BEAM_WIDTH, and TRAINING_BEAM_DEPTH, each epoch could take 30 minutes to an hour to run.

...

Training data generated for epoch 237 out of 256 in 2903 seconds.

Training data generated for epoch 238 out of 256 in 2930 seconds.

Training data generated for epoch 239 out of 256 in 2927 seconds.

Training data generated for epoch 240 out of 256 in 2870 seconds.

...

The neural network is backpropagated on the wells and training targets on one epoch at a time. If three consecutive epochs occur where the loss goes up rather than down, training is halted early to prevent overfitting.

Baseline α = 0.0005, mul = 1

Epoch 1: Loss: 0.9634717347545582 -> 0.48708445806935374

Saving epoch 1

Epoch 2: Loss: 0.5124996676330884 -> 0.22140142596518755

Saving epoch 2

Epoch 3: Loss: 0.24569042568008911 -> 0.12269242728472703

Saving epoch 3

Epoch 4: Loss: 0.13440167007141396 -> 0.08684355795403566

Saving epoch 4

...

After training is finished, the network for the next generation is finished, and the training cycle begins again.

These functions are the ones most likely to be worth modifying or editing, if you want to change how the beam search or training routine works.

emulator.rs:neural_heuristic_loop(): Calculates the legal children and their associated heuristics from a given state; the loop-prevention rule is used.quiescent_heuristic(): Calculates the heuristics of a set of states based on the maximum heuristic values of their descendants reachable by continuous S (or Z) line clears.

neural.rs:forward_pass(): Computes a linear layer andtanh()activation layer for a set of neurons. Currently the bottleneck for beam searches; it might be optimizable with Single Instruction Multiple Data (SIMD) or an accumulator.generate_training_data(): Splits the total list of states into epochs; useful to modify if you want something other than a raw beam search result to determine the training target for each state.

searches.rs:beam_search_network_full(): Performs a beam search with all the bells and whistles; multithreading, loop prevention, and optional quiescence lookahead. This is the function to use if you want to run a standalone beam search.complete_search(): Starts from a given state and does a complete search of the game tree from that state, with no heuristic of any kind. Useful for near-endgame wells or small well dimensions. Does not currently implement the loop-prevention rule; results for a well width of 4 are not accurate as a result.print_progress(): Prints some aggregate statistics about an ongoing beam search every move.

types.rsSearchConf: the configuration passed tobeam_search_network_full()andneural_heuristic_loop(), as well as the other heuristic and search functions, to pass in global parameters. This is where the beam width, depth, print behavior, and quiescent behavior are stored.WeightT: describes the creation and initialization of neural network weights. You need to change this if you want to change the neural architecture used.

- Well Geometry: Dimensions of the well itself.

WELL_HEIGHTWELL_WIDTH

- Neural Network: Dimensions of the neural network.

CONVOLUTIONS: A list of the (x,y) rectangles used for convolutions. If you want different shapes, or want to add more shapes, use this array.ALL_CONV: The total count of all convolutions in the network.HIDDEN: Number of hidden nodes in the network. More hidden nodes means a potentially better network, but will increase evaluation time.WEIGHT_COUNT: The total number off64weights in the neural network.

- Training: Parameters which influence the training cycle.

MINIBATCH: How many wells are used for each epoch of the neural network training.MAX_EPOCHS: How many epochs the neural network is trained for.MASTER_BEAM_WIDTH: The size of the master beam search for a full generation. This beam search is done once per generation.TRAINING_BEAM_WIDTH: The size of the small beams used to evaluate each position within an epoch.TRAINING_BEAM_DEPTH: The number of timesteps to run the small beams for.AEON: Bookkeeping; used to separate multiple training runs from each other. Useful when modifying hyperparameters or training routines.

- Multithreading:

THREAD_NUMBER: Number of threads available. Change based on your CPU and computational availability. By default, this only affects non-rayonprocesses;rayonprocesses will use all available cores. There are two ways of restrictingrayonthreads:- Set the environmental variable

RAYON_NUM_THREADSto the desired thread count. - Add

rayon::ThreadPoolBuilder::new().num_threads(constants::THREAD_NUMBER).build_global().unwrap();to the top offn main()inmain.rs.

- Set the environmental variable

THREAD_BATCH: Default batch size of threads. Again, this only affects non-rayonthreads.

- threepipes

- Create an AI to conquer HATETRIS [JAPANESE]

- First known HATETRIS beam search.

- hatetris (GitHub Repository)

- No emulator; calls the original JavaScript functions directly.

- Evaluates wells with a manual heuristic; uses Optuna to optimize the heuristic's hyperparameters.

- Highest score: 18.

- Create an AI to conquer HATETRIS [JAPANESE]

- knewjade

- What I did to get 66L in HATETRIS [JAPANESE]

- Covers the world records between 32 and 66 points.

- 32 points: an exhaustive search on the ending of the current 31 point world record.

- 34, 41 points: a transposition table to store favorable 'terrains' and a manual heuristic approximating the 'quality' of each well. Beam width of 1 million.

- 45 points: an improved heuristic and a wider beam (12 million).

- 66 points: further heuristic improvements, and a wider beam (25 million).

- Reached 289 lines in HATETRIS using Neural Networks [JAPANESE]

- First use of NNUE for HATETRIS, as well as using the quiescence lookahead.

- Introduction of the master beam search / training beam search technique for (respectively) training samples and training targets.

- Beam width: 200,000.

- First discovered loop.

- What I did to get 66L in HATETRIS [JAPANESE]

- Dave & Felipe (Us!)

- Getting the world record in HATETRIS

- A detailed breakdown of the techniques that ultimately culminated in the 86 point world record .

- Details about various thought processes that led to the current approach.

- Covers AlphaGo approaches, MCTS etc.

- A good overview of well structure and heuristic approaches.

- Impractical Rust: The HATETRIS World Record

- Technical detail on the MCTS approaches, Rust-specific difficulties, etc.

- Overview of hyperparameter optimization (and why it's not easy).

- Eerie tales of mutex errors and ghost nodes.

- Losing the world record in HATETRIS

- Introduces the 'bird in hand' heuristic, later refined into the quiescent lookahead.

- A detailed overview of various emulator improvements (most still in use).

- Covers the discovery of loops.

- Concludes with a detailed run-down of NNUE and how it is used.

- Getting the world record in HATETRIS