forked from protolambda/erigon

-

Notifications

You must be signed in to change notification settings - Fork 15

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[WIP] Upstream v3.0.0 #215

Open

mininny

wants to merge

5,134

commits into

op-erigon

Choose a base branch

from

upstream-v3.0.0

base: op-erigon

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

part of erigontech#11032 Fixes "block too soon error" which was due to incorrectly calculated block producer priorities. ``` DBUG[08-06|13:31:23.898] [sync] onNewBlockEvent: couldn't connect a header to the local chain tip, ignoring err="canonicalChainBuilder.Connect: invalid header error Block 10391894 was created too soon. Signer turn-ness number is 2\n" ``` Removes `SpansCache` in favour of `heimdallService.Producers` which returns correct producer priorities.

Simplifies PatriciaContext interface: return state Update instead of filling the Cell is more general and allows other trie implementation follow that interface without converting their inner representation of Cell/Node/etc. Slightly reduces code complexity as now Cell has state part (Update) and intrinsic parts (lens and other unexproted fields like apk/spk/downHashedKey). Finally that allows us as of next step to remove batch of `Process*` functions and keep just `Process(context.Context, updates *Updates, logPrefix string) ([]byte, error)`. In that case `Updates.mode` only will decide if need to collect `Update` during execution or not. In general, we don't really need keep Update close to key because it's already in `SharedDomains` if it's just a regular exec.

Part of erigontech#11149 Teku vc is able to work properly with this pr. known issue: teku's event source client periodically disconnects, but vc is still can well work event to be added: block_gossip chain_reorg light_client_finality_update light_client_optimistic_update payload_attributes

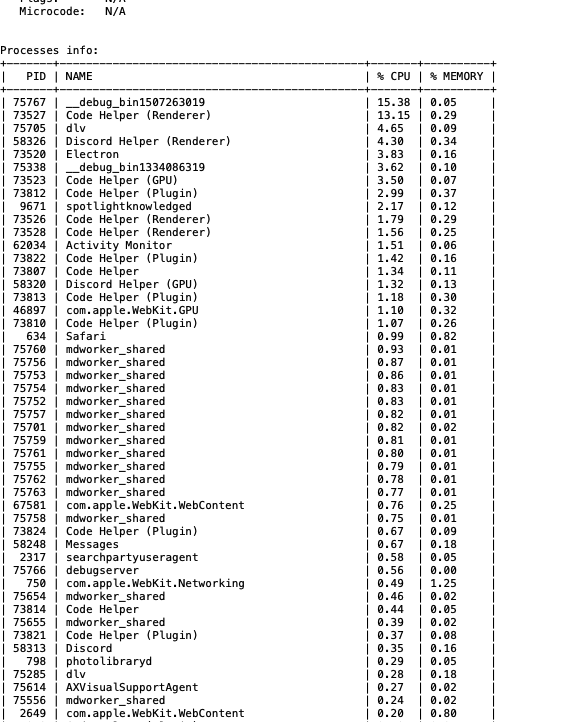

- Updated gopsutil version as it has improvements in getting processes and memory info.

`murmur3.New*` methods return interface. And need call minimum 3 methods on it. `16ns` -> `11ns` Also i did bench `github.com/segmentio/murmur3` vs `github.com/twmb/murmur3` on 60bytes hashed string 2nd is faster but adding asm deps. So i stick to go's dep (because asm deps are not friendly for cross-compilation), maybe will try it later - after our new release pipeline is ready. Bench results: intel: `20ns` -> `14ns` amd: `31ns` -> `26ns`

Before this PR we called heimdall.Synchronize as part of heimdall.CheckpointsFromBlock and heimdall.MilestonesFromBlock. The previous implementation of Synchronize was waiting on all scrappers to be synchronised. This is inefficient because `heimdall.CheckpointsFromBlock` needs only the `checkpoints` scrapper to be synchronised. For the initial sync we first only need to wait for the checkpoints to be downloaded and then we can start downloading blocks from devp2p. While we are doing that we can let the spans and milestones be scrapped in the background. Note this is based on the fact that fetching checkpoints has been optimised by doing bulk fetching and finishes in seconds, while fetching Spans has not yet been optimised and for bor-mainnet can take a long time. Changes in the PR: - splits Synchronize into 3 more fine grained SynchronizeCheckpoints, SynchronizeMilestones and SynchronizeSpans calls which are invoked by the Sync algorithm at the right time - Optimises SynchronizeSpans to check if it already has the corresponding span for the given block number before blocking - Moves synchronisation point for Spans and State Sync Events in `Sync.commitExecution` just before we call ExecutionEngine.UpdateForkChoice to make it clearer what data is necessary to be sync-ed before calling Execution - Changes EventNotifier and Synchronize funcs to return err if ctx is cancelled or other errors have happened - Input consistency between the heimdallSynchronizer and bridgeSynchronizer - use blockNum instead of *type.Header - Interface tidy ups

Make Cell unexported Remove ProcessTree/Keys/Update Reviewed and refreshed all unit/bench/fuzz tests related to commitment erigontech#11326

Change test scheduling and timeouts after Ottersync introduction. Now we can execute tests more frequently due to the significant reduction in test time. Scheduled to run every night: - tip-tracking - snap-download - sync-from-scratch for mainnet, minimal node Scheduled to run on Sunday: - sync-from-scratch for testnets, archive node

- Collecting CPU and Memory usage info about all processes running on the machine - Running loop 5 times with 2 seconds delay and to calculate average - Sort by CPU usage - Write result to report file Result:

closes erigontech#11173 Adds tests for the Heimdall Service which cover: - Milestone scrapping - Span scrapping - Checkpoint scrapping - `Producers` API - compares the results with results from the `bor_getSnapshotProposerSequence` RPC API

- Added totals for CPU and Memory usage to processes table - Added CPU usage by cores Example output:

forgot to silence the logging in the heimdall service tests in a previous PR the logging lvl can be tweaked at times of need if debugging is necessary

Refactored table utils to have an option to generate table and return it as string which will used for saving data to file.

…ot and added clearIndexing command (erigontech#11539) Main checks: * No gap in steps/blocks * Check if all indexing present * Check if all idx, history, domain present

closes erigontech#11177 - adds unwind logic to the new polygon sync stage which uses astrid - seems like we've never done running for bor heimdall so removing empty funcs

Refactored printing cpu info: - move CPU details to table - move CPU usage next to details table - refactor code

…ch#11549) relates to: erigontech#10734 erigontech#11387 restart Erigon with `SAVE_HEAP_PROFILE = true` env variable wait until we reach 45% or more alloc in stage_headers when "noProgressCounter >= 5" or "Rejected header marked as bad"

and also move `design` into `docs` in order to reduce the number of top-level directories

Before we had transaction-wide cache (map) Now i changing it to evm-wide. EVM - is thread-unsafe object - it's ok to use thread-unsafe LRU. But ExecV3 already using 1-ENV per worker. Means we will share between blocks (not on chain-tip for now) bench: - on `mainnet`: it shows 12% improvement on large eth_getLogs call (re-exec large historical range of blocks near 6M block) - on hot state. About chain-tip: - don't see much impact (even if make cache global) - because mainnet/bor-mainnet current bottleneck is "flush" changes to db. but `integration loop_exec --unwind=2` shows 5% improvement. - in future PR we can share 1 lru for many new blocks - currently creating new one every stage loop iteration.

…se workflow (erigontech#11848) New workflow ci-cd-main-branch-docker-images.yml New Dockerfile targets for the new workflow in Dockerfile.release Changes in release workflow: rename arg. See issue erigontech#10251 for more info.

Add tip-tracking test for bor-mainnet using a dedicated self-hosted runner

It is necessary when using temporal KV remotely. Additional changes: - remove what I think is an oversight in `IndexRange`, where `req.PageSize` was checked and cut to `PageSizeLimit`, but then not used (`PageSizeLimit` itself was used instead) - remove useless `limit--` in `HistoryRange`

and rename to `Reader/Writer` remove interfaces related to it - to improve inlining

Fix on-trigger (correct branch) Grammar fixes

…ontech#11813) (erigontech#11866) **Existing behaviour:** - Add up the possible value that user must pay beforehand to buy gas - Deduct that amount from the sender's account in `intraBlockState`, but: - Don't deduct the gas value amount if the user doesn't have enough, and `gasBailout` is set **New behaviour:** - Don't check if sender's balance is enough to pay gas value amount, nor deduct it, if `gasBailout` is set **More rationale** This would mean the sender's account would show `"balance": "="` in `trace_call` rpc method, that is, no change, if gas is the only thing the user pays for. This is fine because the gas price can fluctuate in a real transaction. This also removes the inconsistency of sometimes having to bother deducting the amount if it is less than sender's balance, thereby causing a bug/inconsistency.(erigontech#11813)

…ch#11867) fixes erigontech#11818 issue was: - when at tip we receive new block hashes and new block events - we had an if statement which checked if the canonical chain builder tip changed after connecting new headers to the tree - that if statement was used to determine whether we should call `InsertBlocks` for the blocks we've just connected and also to `commitExecution` (call `UpdateForkChoice`) - this meant that when at the tip, we would not insert new blocks which would not change the tip of the canonical chain builder - this is wrong because we should be inserting these blocks as they may end up being on the canonical path several blocks later in case the forks change in their favour based on the connected ancestors fix is: - augment `canonicalChainBuilder.Connect` to return the newly connected headers to the tree - always insert newly connected headers (upon successful connection to the root)

) Next chain tip error caught and fixed for astrid stage integration: ``` append with gap blockNum=11561329, but current height=11561327 ``` happens after unwind due to a fork change in the corresponding fork choice update. This is due to a bug in the logic of handling fork choice updates in the stage integration. The issue is that when processing the `cachedForkChoice` after we have done the unwind, `fixCanonicalChain` returns empty `newNodes` (correctly, since the chain was fixed before we cached the fork choice). The solution is to cache the new nodes as the `cachedForkChoice` so that when we process the cached fork choice in the next iteration we can correctly update the tx nums for the new nodes. Full logs: ``` INFO[09-04|16:14:31.018] [2/6 PolygonSync] update fork choice block=11561328 age=0 hash=0x41ebb5e01406c1f013f06ee4e53ab68b125f071717d50bcdcfa4597a0a052cfe INFO[09-04|16:14:31.019] [2/6 PolygonSync] new fork - unwinding and caching fork choice DBUG[09-04|16:14:31.021] UnwindTo block=11561327 block_hash=0xc8ba20e1e4dc312bda4aadc5108722205693783b1c2d6103cb70949bda58a460 err=nil stack="[sync.go:171 stage_polygon_sync.go:1391 stage_polygon_sync.go:1356 stage_polygon_sync.go:1478 stage_polygon_sync.go:501 stage_polygon_sync.go:175 default_stages.go:479 sync.go:531 sync.go:410 stageloop.go:249 stageloop.go:101 asm_arm64.s:1222]" DBUG[09-04|16:14:31.021] [2/6 PolygonSync] DONE in=5.45216175s DBUG[09-04|16:14:31.021] [1/6 OtterSync] DONE in=21.167µs INFO[09-04|16:14:31.021] [2/6 PolygonSync] forward progress=11561327 INFO[09-04|16:14:31.021] [2/6 PolygonSync] new fork - processing cached fork choice after unwind INFO[09-04|16:14:31.022] [2/6 PolygonSync] update fork choice block=11561328 age=0 hash=0x41ebb5e01406c1f013f06ee4e53ab68b125f071717d50bcdcfa4597a0a052cfe DBUG[09-04|16:14:31.022] [2/6 PolygonSync] DONE in=186.792µs DBUG[09-04|16:14:31.022] [3/6 Senders] DONE in=236.458µs INFO[09-04|16:14:31.024] [4/6 Execution] Done Commit every block blk=11561327 blks=1 blk/s=1125.7 txs=2 tx/s=2.25k gas/s=0 buf=0B/512.0MB stepsInDB=0.00 step=24.3 alloc=600.4MB sys=1.7GB DBUG[09-04|16:14:31.024] [4/6 Execution] DONE in=2.020375ms DBUG[09-04|16:14:31.024] [5/6 TxLookup] DONE in=74.292µs DBUG[09-04|16:14:31.024] [6/6 Finish] DONE in=2.958µs INFO[09-04|16:14:31.024] Timings (slower than 50ms) PolygonSync=5.452s alloc=600.5MB sys=1.7GB DBUG[09-04|16:14:31.025] [6/6 Finish] Prune done in=5.625µs DBUG[09-04|16:14:31.025] [5/6 TxLookup] Prune done in=237.084µs DBUG[09-04|16:14:31.025] [4/6 Execution] Prune done in=65.958µs DBUG[09-04|16:14:31.025] [3/6 Senders] Prune done in=2.75µs DBUG[09-04|16:14:31.025] [2/6 PolygonSync] Prune done in=2.25µs DBUG[09-04|16:14:31.025] [snapshots] Prune Blocks to=11559976 limit=10 DBUG[09-04|16:14:31.026] [snapshots] Prune Bor Blocks to=11559976 limit=10 DBUG[09-04|16:14:31.026] [1/6 OtterSync] Prune done in=1.334833ms DBUG[09-04|16:14:31.154] [1/6 OtterSync] DONE in=6.792µs INFO[09-04|16:14:31.154] [2/6 PolygonSync] forward progress=11561327 DBUG[09-04|16:14:33.030] [bridge] processing new blocks from=11561329 to=11561329 lastProcessedBlockNum=11561328 lastProcessedBlockTime=1725462871 lastProcessedEventID=2688 DBUG[09-04|16:14:33.030] [sync] inserted blocks len=1 duration=1.184125ms DBUG[09-04|16:14:33.030] [bor.heimdall] synchronizing spans... blockNum=11561329 DBUG[09-04|16:14:33.031] [bridge] synchronizing events... blockNum=11561329 lastProcessedBlockNum=11561328 INFO[09-04|16:14:33.031] [2/6 PolygonSync] update fork choice block=11561329 age=0 hash=0x298f72d6fbbfdc8d3df098828867dea7e8e7bba787c1eb17f6c6025afa9ac3d1 WARN[09-04|16:14:33.032] [bor.heimdall] an error while fetching path=bor/latest-span queryParams= attempt=1 err="Get \"https://heimdall-api-amoy.polygon.technology/bor/latest-span\": context canceled" DBUG[09-04|16:14:33.032] [bor.heimdall] request canceled reason="context canceled" path=bor/latest-span queryParams= attempt=1 EROR[09-04|16:14:36.032] [2/6 PolygonSync] stopping node err="append with gap blockNum=11561329, but current height=11561327, stack: [txnum.go:149 accessors_chain.go:703 stage_polygon_sync.go:1398 stage_polygon_sync.go:1356 stage_polygon_sync.go:1478 stage_polygon_sync.go:501 stage_polygon_sync.go:175 default_stages.go:479 sync.go:531 sync.go:410 stageloop.go:249 stageloop.go:101 asm_arm64.s:1222]" DBUG[09-04|16:14:36.032] Error while executing stage err="[2/6 PolygonSync] stopped: append with gap blockNum=11561329, but current height=11561327, stack: [txnum.go:149 accessors_chain.go:703 stage_polygon_sync.go:1398 stage_polygon_sync.go:1356 stage_polygon_sync.go:1478 stage_polygon_sync.go:501 stage_polygon_sync.go:175 default_stages.go:479 sync.go:531 sync.go:410 stageloop.go:249 stageloop.go:101 asm_arm64.s:1222]" DBUG[09-04|16:14:36.033] rpcdaemon: the subscription to pending blocks channel was closed ```

Should fix erigontech#11748 and erigontech#11670 --------- Co-authored-by: Mark Holt <[email protected]>

Added notifier which notify that torrent downloading completed. --------- Co-authored-by: Mark Holt <[email protected]>

…igontech#11722) As the value for each to address is not used, keep the same logic for `froms` and `tos`, --------- Signed-off-by: jsvisa <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

No description provided.