-

Notifications

You must be signed in to change notification settings - Fork 0

Graphs and Charts for Buildbot: Application for GSoC 2015

- Name : Prasoon Shukla

- Email : [email protected]

- IRC : prasoon2211

Buildbot gathers large amounts of data from its builds. This captured data is generally a quantity that provides information about the build. A prominent example of this is the build time. At the moment, the user can only get access to this data for an individual build and not collectively which is vital to performing any kind of analysis on data. Also, there is no possibility for the user to make Buildbot gather specialized data from the build - so, for example, timing a single function in the test suite is not possible.

This project aims to rectify both these problems. Specifically, it aims to:

- Allow the user to gather arbitrary statistics about a project (such as the build size).

- Allow collective access to build statistics via InfluxDB. Additionally, provide graph provide graphical analysis of this data via Graphana.

This can be a very beneficial feature for Buildbot users as it would:

- Allow users to measure arbitrary quantities from the build. So, a user can measure, for each build:

- The final build size

- Runtime of a single function

- Total lines of code

- Number of function calls made by a test, etc.

- Let users perform statistical analysis on this data, using influxDB. Influx has several statistical functions that operate on the collected data via its SQL like querying language and produce results in table form. This can help provide insights into the development process. For example, the user can calculate mean build time over a certain time period.

- Produce graphs from gathered test-data which are great for visualizing changes to the project.

The project will consist of the following tasks. Note that theses are also logically separate so they can act as microtasks that can be completed one step at a time.

-

Implementing the new

MetricEventsin metrics module and updating the existing methods.Currently, there are three different

MetricEventsin the metrics module which can be invoked to capture data. These are:-

MetricCountEvent: For making count of a quantity. -

MetricTimeEvent: For keeping track of the time a process takes. ThisMetricEventkeeps track of last 10 reported values and reports the average of those values. -

MetricAlarmEvent: For checking whether an event completed successfully. This has three values:OK,WARN,CRITICALfor representing the state of the systems.

The first thing needed to be done would be to remove the ad-hoc, in-memory storage methods implemented in these

MetricEventsand export the storage to influxDB via its API. The reporting will be done by querying influxDB for required values via the influxDB Python API. These methods (for querying and storage) will be implemented within the respectiveMetricEventsubclasses.Also, since just these three

MetricEventsdon't work for all situations, therefore, I think that the metrics module will benefit from the addition of the following statistical statistical (because these are analogs of standard statistical functions)MetricEventmethods:-

MetricAverageEvent: This gathers the reported statistic and produce the average of all numbers ever reported. For this to work, it will need to interact with influxDB via its API. Influx will do the job of storing and reporting the average. -

MetricsDeviationEvent: Exactly likeMetricAverageEvent. Reports the standard deviation instead. -

MetricMinEvent: It will store the all reported values in the influxDB but report the minimum using influx aggregate function. -

MetricMaxEvent: Similar toMetricMinEvent, except, it reports the maximum value. -

MetricAbsCountEvent: Just likeMetricCountEvent, except it functions as a absolute value accumulator. -

MetricsDifferenceEvent: Record the difference (with sign) of the reported value from the last reported value.

These are some basic

MetricEventsthat act as analogs for standard statistical methods. More such analogous methods could be added later on, as needed. But, I believe that these will suffice for almost all build data analysis needs. -

-

Changing

Steps for collection of statistics. At the moment, Steps run the commands provided by users and try to collect at much data from the output of the command as possible. Also, Steps measure how much time it took them to run. All of this data gets stored in the build properties. For all this data to get to influxDB, we will need to modify Steps by adding a call into metrics module for storage. For example, let us say that we're modifyingsteps.PyLint. Then,PyLint.createSummarycallssetPropertyto set build properties. We'll therefore modify this method to make a call into metrics: For example, we can callMetricsCountEvent.log('Pylint warnings', warnings)to store the number of warning caught by PyLint. -

Implementing a way for users to gather arbitrary statistics.

This is yet another objective of this project. As Buildbot cannot measure every conceivable build statistic on its own, we want to let the user report this statistic to us. Let us take the example of final build size so see how this will happen:

- First, the user will need to create a small script (shell/batch/python or anything else) that when ran, outputs to stdout the build size.

- Then, the user will run this shell script in a new Step, a

MeasureShellCommandthat will be subclassed fromShellCommand. This Step will take one extra argument from the user (as compared toShellCommand) - a unique string identifier for this data. So, for example, here the user will add this to theirmaster.cfg:f.addStep(steps.MeasureShellCommand(command=["sh","scripts/size.sh"], id="build_size")) - Then, once the script finishes running and outputs to stdout, we'll capture this output inside the

MeasureShellCommandand pass it on to the metrics middleware for storage in influxDB.

I've provided another such example in this thread.

-

Changing the frontend to include links to the InfluxDB and Grafana instances. We'll pick up the influxDB URL as well as the URL for the Grafana instance from

master.cfgand add a link to both of them in the Buildbot frontend.

-

Storage via metrics in influxDB. InfluxDB employs a schemaless DB for storage. So, the storage happens as key-value pairs internally. For our storage policy, we will:

- Either take the key (the name of a statistic) from user, as in case of

MeasureShellCommand, or, - Provide a key (such as the build-property names) from within Buildbot itself.

Then, storage is as simple as making a call to influxDB Python API.

- Either take the key (the name of a statistic) from user, as in case of

-

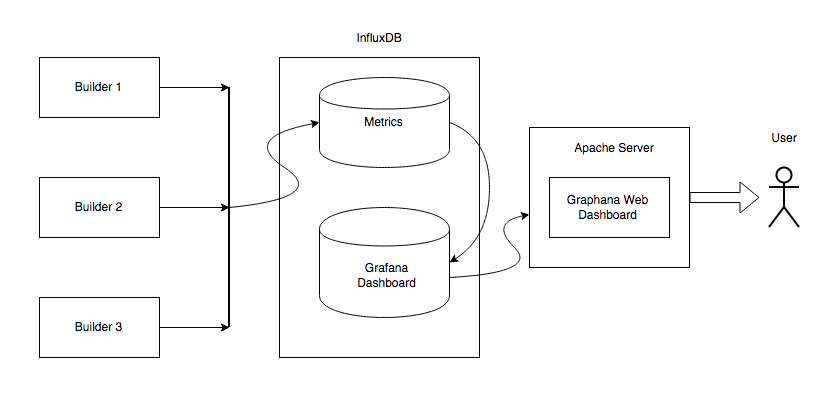

Here's a simple diagram that illustrates the flow of data:

This project will require a lot of documentation to be usable. As such, here are the topics for which I'll provide documentation:

- Installation of Influx and Graphana

- Configuring Influx, Graphana and Apache (to run Graphana)

- How to gather arbitrary statistics along with an example or two.

- Configuration of

master.cfg. - Documentation of the new Step,

MeasureShellCommand

Developer documentation will need to cover all of the configuration options, all changes to the Steps, all the new MetricEvents and documentation on how to add new Steps that can be used to extend support to new testing frameworks/linters etc.

Tests will need to be added for each MetricEvent, checking whether data being fed to influxDB is being stored. These will check the insertion of data using influxDB's REST API to retrieve data and match it against what was inserted.

Also, we'll need tests to confirm that Steps after modification are working properly. For this, we can check whether the data being relayed to metrics module from Steps is being stored in influxDB. Again, for this, we'll use the influx REST API to confirm whether data was inserted in influx's database.

First thing I'd like to say is that this is purely a stretch goal. If there's enough time towards the end, the implementation is possible. That said, here are my thoughts on the porting:

I've briefly read the Eight documentation for steps and also ran a git diff on the master/buildbot/steps/. From this, I gather that the underlying architecture does not change for Steps. So, in Eight, as well as in Nine, Steps capture data and feed it to setProperty of build. So, we can update Steps in Eight - just as we do for Nine - and have the Steps pass the captured values to metrics module (as I've mentioned under the Tasks heading above).

The metrics module itself is relatively unchanged, going from Eight to Nine. As such, we should just be able to copy all the work from Nine (including tests and documentation) and put it in Eight.

As such, I think that once the project is implemented for Nine, it can be ported to Eight without too much hassle although I cannot begin to provide an estimate for how long it will take. I have made concessions in the timeline for this, nevertheless.

Here's a week-by-week division of tasks (mentioned under tasks heading). Also, before any work starts, I will, during the community bonding period (27th Apr - 25th May) work with my mentor to finalise any small details that might remain. I'll also read extensively the documentations of Buildbot, InfluxDB and Grafana.

| Week | Work to be done |

|---|---|

| Week 1 (May 25 - May 31) | Work on implementing new Metric Events and updating old MetricEvents to work with influxDB. Write tests. |

| Weeks 2 and 3 (June 1 - June 14) | Update all existing Steps to be able to make calls into the metrics module middleware for feeding build statistics to influxDB. Write tests. |

| Week 4 (June 15 - June 21) | Implement MeasureShellCommand for capturing arbitrary statistics. Write tests. |

| Week 5 (June 22 - June 28) | Write documentation for the work done until now. |

| Midterm Evaluation | Milestones: |

| 1) Metrics module can now interface with influxDB to store data. | |

| 2) Steps can store gathered statistics in influxDB via the metrics module middleware. | |

3) MeasureShellCommand implemented that can be used for gathering arbitrary statistics. |

|

| 4) Documentation as well as tests have been written for the work done until now. | |

| Week 6 (June 28 - July 5) | Set up a local Grafana instance to work with influxDB. Also, write documentation for setting up Grafana with apache. |

| Week 7 (July 6 - July 13) | Modify the frontend to include links to the Grafana instance. |

| Week 8 (July 13 - July 19) | At this point, the project is nearly done. I'll take this week to deploy the project for Buildbot master and fix any bugs that are encountered. |

| Week 9 and 10 (July 20 - August 9) | Port to Eight (See the heading 'Porting to Eight') [1] |

| 1) Port Metrics in 2-3 days along with tests. | |

2) Port updated Steps as well as the MeasureShellCommand with tests. |

|

| 3) Test deployment for Buildbot master. | |

| Week 11 and 12 (August 10 - August 21) | Buffer Time for any unexpected eventualities. Also, use this time to polish code and make it meet Buildbot coding standards. |

| Final evaluation | All project goals (except maybe porting) finished. Also, a working instance deployed for buildbot master. |

[1] : I realize that two weeks might be too little time for porting. That said, I would like to mention again that porting is a stretch goal - it might not finish entirely during the summer itself.

How much time can you devote to your work on Buildbot on a daily basis? Do you have another summer job or other obligations?

I can devote 7-8 hours on average everyday excluding weekends when I'll work less (3-4 hours). I don't have any summer internships lined up. I will however be relatively occupied during 26th May - 29th May.

Do you have any other time commitments over the summer?

Except for some light travelling (during which time I'll still work) for a week in July, I will be free for the rest of the summer. One more thing to note is that my classes will resume around 21st July (by which time most of the work will be done) so I'll be able to work somewhat less after that. I have, however, made my timeline keeping this in mind.

I have been working with Python as my primary language for the last three years. I believe that I know it quite well. Seeing as this project is almost entirely in Python, I am certain that this past experience with Python will come in quite handy. I am also comfortable with JavaScript (though I don't know it as well as Python) which will be part of this project towards the end, when I have to modify the frontend to include links to the Grafana instance.

I have previously worked on both open source and closed source projects, both mostly in Python.

I've contributed code to a couple of open-source projects before. Chief among those is SymPy[1] in which I participated as a student in last year's summer of code (GSoC 2013)[2]. This project went well. I've contributed to mercurial [3], Servo (Mozilla's new browser engine in Rust) [4], Joomla [5], WordPress [6], Django[7], SimpleCV[8], django-browserid [9], sugarlabs [10]. I also participated as a GSoC 2014 student for sugarlabs [11] which didn't go quite as well as my first GSoC - the project was rather small and I completed it successfully but it never got deployed because I never got the web hosting required for this project (required to run a docker container).

My closed source work consists of three Python/Django applications made for my college's intranet network - one is small used goods buying/selling online market, another is a college-wide website for reporting lost/found items and the third is a response portal for taking student responses about faculty and courses. This work I've done as part a campus group that makes several web application for the residents of our college campus. We've also made our institute's main website. I cannot provide code to this work however, since it is closed source and belongs to the institute.

My GitHub profile : @prasoon2211

I am studying Applied Mathematics in Indian Institute of Technology, Roorkee. I am doing pretty okay here.

Links: [1]: Some important patches: One, Two, Three, Four [2]: Majority of code, Official link [3]: One, Two, Three, Merged Patch [4]: Merged PR [5]: Several PRs [6] : One, Two [7]: Django, Patch [8]: One, Two, Three, Four [9]: django-browserid, Patch [10]: One, Two [11]: Official link

I am planning to add a Buildbot instance to Django (I'm not affiliated with it but I've worked a lot with it) hosted on AWS. I'll probably run both the master and slave on the same system. I'll link the public URL here once it's done.