MMPose v1.2.0 Release Note

RTMW

We are excited to release the alpha version of RTMW:

- The first whole-body pose estimation model with accuracy exceeding 70 AP on COCO-Wholebody benchmark. RTMW-x achieves 70.2 AP.

- More accurate hand details for pose-guided image generation, gesture recognition, and human-computer interaction, etc.

- Compatible with

dw_openpose_fullpreprocessor in sd-webui-controlnet - Try it online with this demo by choosing wholebody(rtmw).

- The technical report will be released soon.

New Algorithms

We are glad to support the following new algorithms:

- (ICCV 2023) MotionBERT

- (ICCVW 2023) DWPose

- (ICLR 2023) EDPose

- (ICLR 2022) Uniformer

(ICCVW 2023) DWPose

We are glad to support the two-stage distillation method DWPose, which achieves the new SOTA performance on COCO-WholeBody.

- Since DWPose is the distilled model of RTMPose, you can directly load the weights of DWPose into RTMPose.

- DWPose has been supported in sd-webui-controlnet.

- You can also try DWPose online with this demo by choosing wholebody(dwpose).

Here is a guide to train DWPose:

-

Train DWPose with the first stage distillation

bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py 8

-

Transfer the S1 distillation models into regular models

# first stage distillation python pth_transfer.py $dis_ckpt $new_pose_ckpt

-

Train DWPose with the second stage distillation

bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py 8

-

Transfer the S2 distillation models into regular models

# second stage distillation python pth_transfer.py $dis_ckpt $new_pose_ckpt --two_dis

- Thanks @yzd-v for helping with the integration of DWPose!

(ICCV 2023) MotionBERT

MotionBERT is the new SOTA method of Monocular 3D Human Pose Estimation on Human3.6M.

You can conviently try MotionBERT via the 3D Human Pose Demo with Inferencer:

python demo/inferencer_demo.py tests/data/coco/000000000785.jpg \

--pose3d human3d --vis-out-dir vis_results/human3d- Supported by @LareinaM

(ICLR 2023) EDPose

We support ED-Pose, an end-to-end framework with Explicit box Detection for multi-person Pose estimation. ED-Pose re-considers this task as two explicit box detection processes with a unified representation and regression supervision. In general, ED-Pose is conceptually simple without post-processing and dense heatmap supervision.

The checkpoint is converted from the official repo. The training of EDPose is not supported yet. It will be supported in the future updates.

You can conviently try EDPose via the 2D Human Pose Demo with Inferencer:

python demo/inferencer_demo.py tests/data/coco/000000197388.jpg \

--pose2d edpose_res50_8xb2-50e_coco-800x1333 --vis-out-dir vis_results- Thanks @LiuYi-Up for helping with the integration of EDPose!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

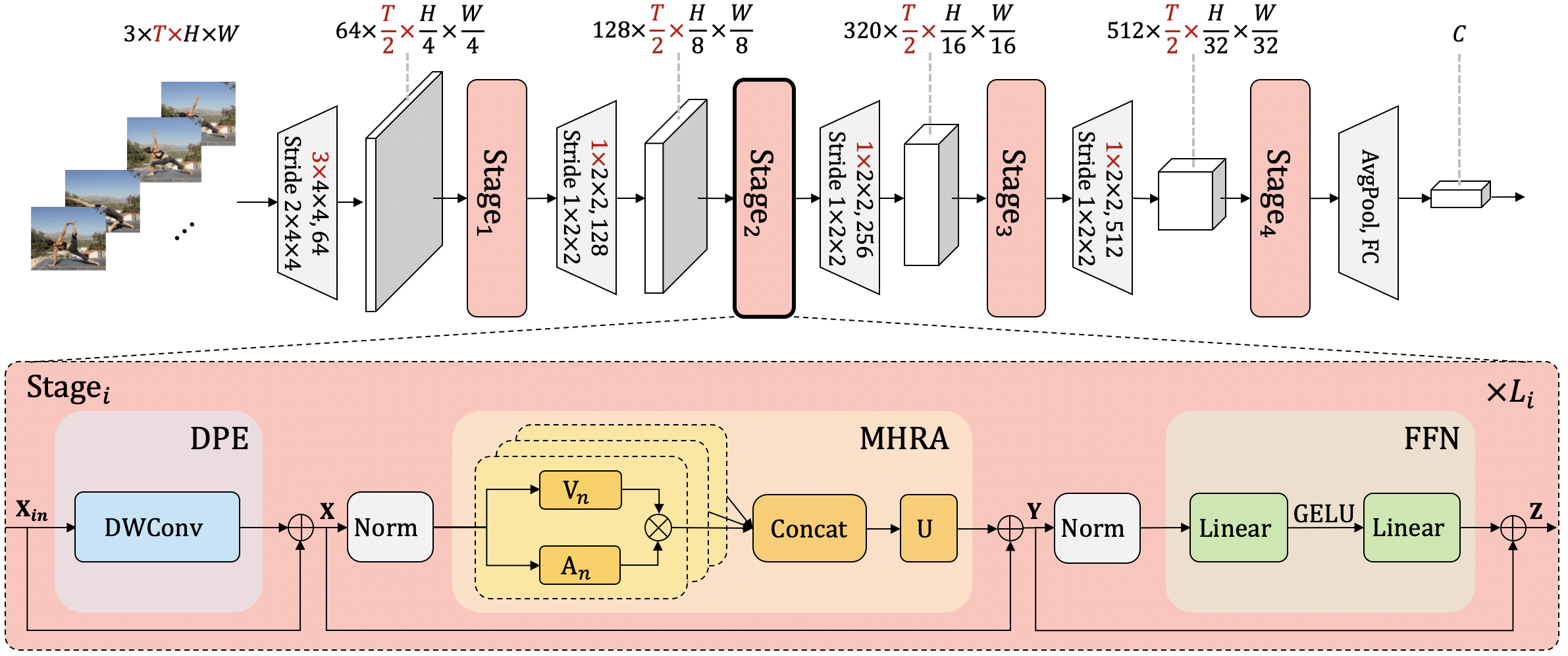

(ICLR 2022) Uniformer

In projects, we implement a topdown heatmap based human pose estimator, utilizing the approach outlined in UniFormer: Unifying Convolution and Self-attention for Visual Recognition (TPAMI 2023) and UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning (ICLR 2022).

- Thanks @xin-li-67 for helping with the integration of Uniformer!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

New Datasets

We have added support for two new datasets:

(CVPR 2023) UBody

UBody can boost 2D whole-body pose estimation and controllable image generation, especially for in-the-wild hand keypoint detection.

- Supported by @xiexinch

300W-LP

300W-LP contains the synthesized large-pose face images from 300W.

- Thanks @Yang-Changhui for helping with the integration of 300W-LP!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!