forked from ZJONSSON/parquetjs

-

Notifications

You must be signed in to change notification settings - Fork 25

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add ability to read decimal columns (#79)

Problem

=======

Often parquet files have a column of type `decimal`. Currently `decimal`

column types are not supported for reading.

Solution

========

I implemented the required code to allow properly reading(only) of

decimal columns without any external libraries.

Change summary:

---------------

* I made a lot of commits as this required some serious trial and error

* modified `lib/codec/types.ts` to allow precision and scale properties

on the `Options` interface for use when decoding column data

* modified `lib/declare.ts` to allow `Decimal` in `OriginalType`, also

modified `FieldDefinition` and `ParquetField` to include precision and

scale.

* In `plain.ts` I modified the `decodeValues_INT32` and

`decodeValues_INT64` to take options so I can determine the column type

and if `DECIMAL`, call the `decodeValues_DECIMAL` function which uses

the options object's precision and scale configured to decode the column

* modified `lib/reader.ts` to set the `originalType`, `precision`,

`scale` and name while in `decodePage` as well as `precision` and

`scale` in `decodeSchema` to retrieve that data from the parquet file to

be used while decoding data for a Decimal column

* modified `lib/schema.ts` to indicate what is required from a parquet

file for a decimal column in order to process it properly, as well as

passing along the `precision` and `scale` if those options exist on a

column

* adding `DECIMAL` configuration to `PARQUET_LOGICAL_TYPES`

* updating `test/decodeSchema.js` to set precision and scale to null as

they are now set to for non decimal types

* added some Decimal specific tests in `test/reader.js` and

`test/schema.js`

Steps to Verify:

----------------

1. Take this code, and paste it into a file at the root of the repo with

the `.js` extenstion:

```

const parquet = require('./dist/parquet')

async function main () {

const file = './test/test-files/valid-decimal-columns.parquet'

await _readParquetFile(file)

}

async function _readParquetFile (filePath) {

const reader = await parquet.ParquetReader.openFile(filePath)

console.log(reader.schema)

let cursor = reader.getCursor()

const columnListFromFile = []

cursor.schema.fieldList.forEach((rec, i) => {

columnListFromFile.push({

name: rec.name,

type: rec.originalType

})

})

let record = null

let count = 0

const previewData = []

const columnsToRead = columnListFromFile.map(col => col.name)

cursor = reader.getCursor(columnsToRead)

console.log('-------------------- data --------------------')

while (record = await cursor.next()) {

previewData.push(record)

console.log(`Row: ${count}`)

console.log(record)

count++

}

await reader.close()

}

main()

.catch(error => {

console.error(error)

process.exit(1)

})

```

2. run the code in a terminal using `node <your file name>.js`

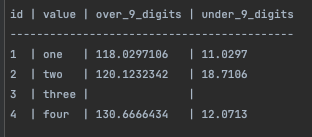

3. Verify that the schema indicates 4 columns, including `over_9_digits`

with scale: 7, and precision 10. As well as a column `under_9_digits`

with scale: 4, precision: 6.

4. The values of those columns should match this table:

- Loading branch information

Showing

10 changed files

with

175 additions

and

23 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Binary file not shown.