-

Notifications

You must be signed in to change notification settings - Fork 287

P2P Protocol

The section describes a p2p protocol used in the current implementation to aim for:

- low-latency event propagation

- fast initial fetching of events

- tolerance to fork-events

Although this p2p protocol works in the same manner like existing p2p protocols for exchanging blocks in other blockchains, it is engineered to handle the graph structure of Lachesis.

The protocol's algorithm includes 2 mechanisms which complement each other:

- DAG streams: Peers request ordered chunks of events from each other by specifying a lexicographic selector. DAG streams are primarily active during initial events fetching.

- Event broadcasts: just after event connection, the node broadcasts the connected event to the interested peers. Broadcasting events to peers is primarily active when node has already synced up.

P2P protocol is a “transport” level in which events are delivered and exchanged by peers. This P2P layer doesn’t depend on a consensus algorithm. It is not necessary for a node to know which peer delivers which event, as long as these events are valid and signed.

In Lachesis consensus, blocks are not exchanged but calculated independently on each node from events.

In other words, blocks are not consensus messages but rather only a result of ordering events by the consensus algorithm.

Hence the P2P protocol exchanges DAGs of events, but not the blocks.

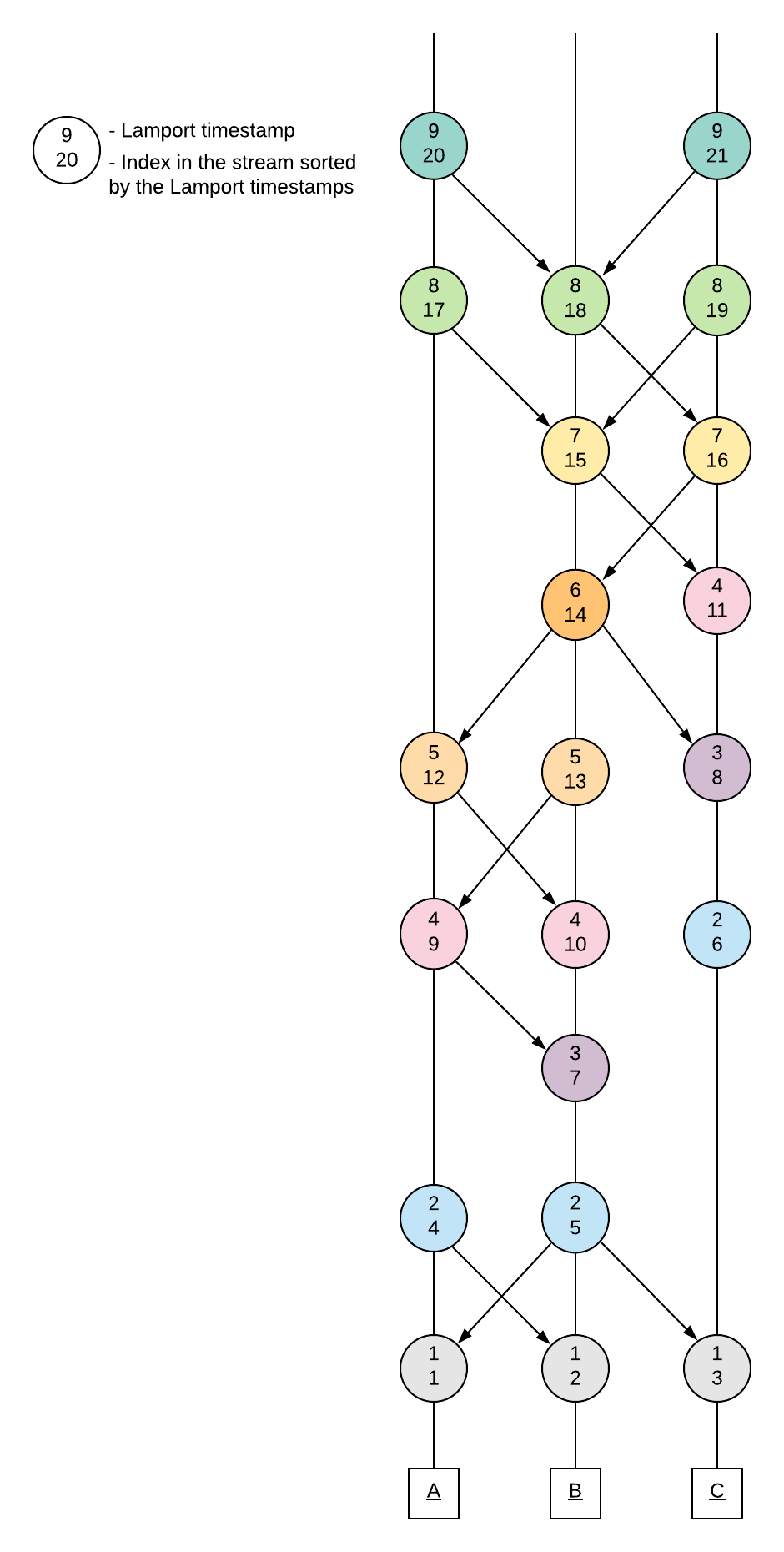

To understand how DAG streams work, it's useful to first understand the Lamport timestamps.

By definition:

If parents list is not empty, then Lamport timestamp of an event v, denoted by LS(v) is max{LS(u) | u is a parent of v} + 1. Otherwise, LS(v) is 1.

Events form a partial ordering by the Lamport timestamps as parents are always sorted before their children. That is, LS(u) is smaller than LS(v) if u is a parent of v.

This property is especially useful for fetching events, as it allows a downloader to request events from a peer in an order in which the events may be connected, knowing only event IDs.

The DAG streams protocol is very simple. It is similar to fetching files:

Key-value databases (such as LevelDB) can iterate over data in a lexicographic order, which allows to

quickly serve the requests from the peers. Lexicographic order is relevant because

event ID is a concatenation of {epoch, Lamport time, hash}.

input: event ID, peer

The function returns false if peer probably knows about the event,

so there's no need to broadcast the event ID (or full event, if propagation is aggressive)

to this peer.

if peer sent me this event ID?

return false

if I sent event ID to this peer?

return false

return (event's epoch == peer.progress.Epoch OR

event's epoch == peer.progress.Epoch+1)Sent once in the beginning of each connection (i.e. used identically to the Status message from the eth62 protocol).

Code = 0

type handshakeData struct {

ProtocolVersion uint32

NetworkID uint64

Genesis common.Hash

}Signals about the current synchronization status.

Sent:

- Second message for each connection (after Handshake message)

- Periodically (every ~10 second) to every peer

- After each epoch sealing to every peer

Code = 1

type PeerProgress struct {

Epoch idx.Epoch

LastBlockIdx idx.Block

LastBlockAtropos hash.Event

HighestLamport idx.Lamport

}Contains a batch of txpool transactions.

Might be either an answer to GetEvmTxsMsg, or be sent during an aggressive transactions propagation.

Code = 2

[]types.TransactionNon-aggressive transactions propagation.

Signals about a new batch of txpool transactions, sending only their hashes.

Code = 3

[]common.HashRequest a batch of txpool transactions by hashes.

Code = 4

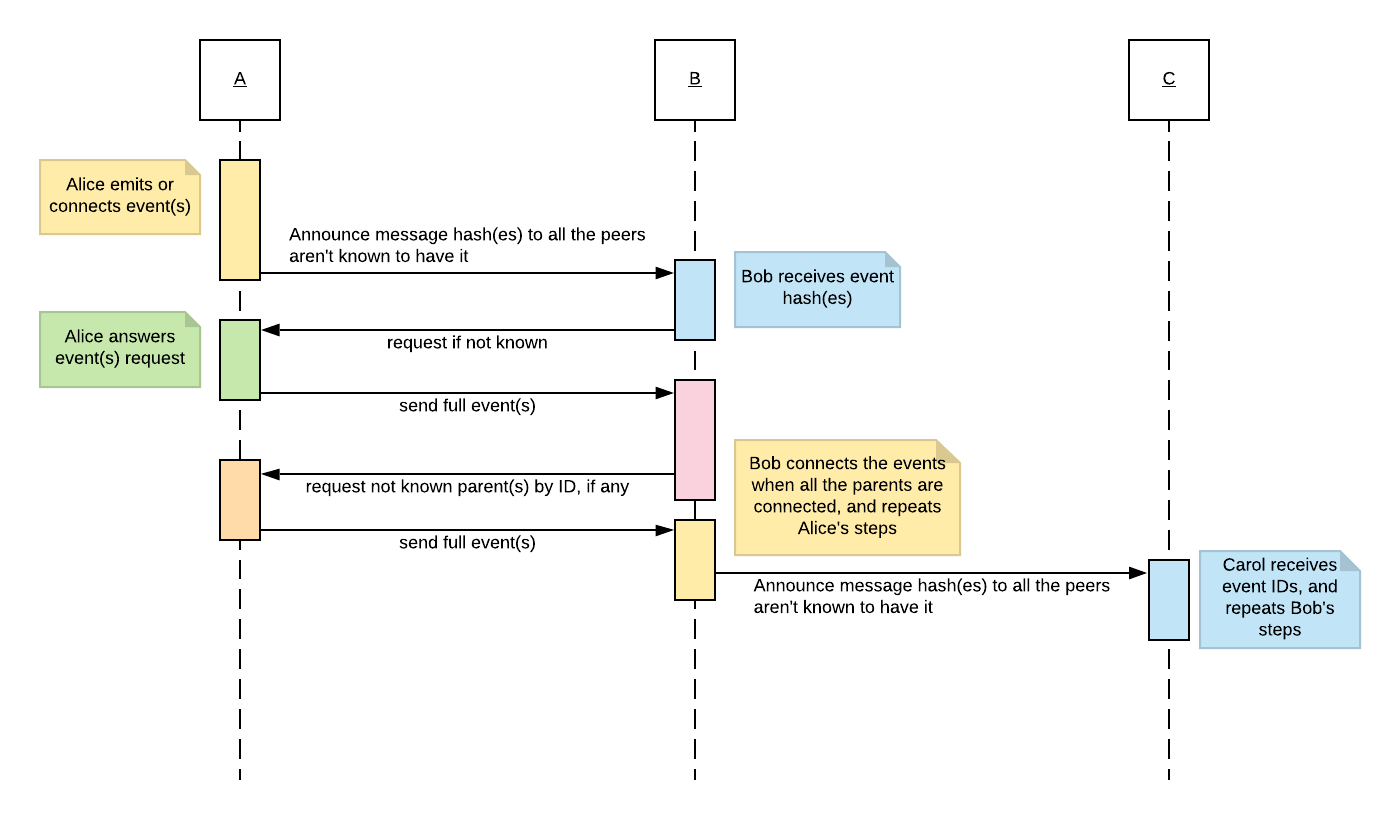

[]common.HashNon-aggressive events propagation.

Signals about newly-connected batch of events, sending only their IDs.

Code = 5

[]hash.EventRequest a batch of events by IDs.

Code = 6

[]hash.EventContains a batch of events.

Might be either an answer to GetEventsMsg, or be sent during an aggressive events propagation.

Code = 7

[]inter.EventPayloadRequest a series of chunks (batches) of events or event IDs by a lexicographic selector.

Limit indicates maximum size for each chunk. Last read event will be appended to the chunk even if it exceeds the limit.

The size of the chunk is calculated as {number of events, total size of events}, even if RequestType is RequestIDs.

MaxChunks specifies how many chunks should be sent as an answer to this request.

Might be used to both initiate a new session and request additional chunks from an existing or newly opened session.

Client shouldn't open more than 2 parallel sessions.

Code = 8

type dag.Metric struct {

Num idx.Event

Size uint64

}

type Session struct {

ID uint32

Start []byte

Stop []byte

}

type RequestType uint8

type dagstream.Request struct {

Session Session

Limit dag.Metric

Type RequestType

MaxChunks uint32

}Sends events or event IDs as a response to the RequestEventsStream.

Flag Done indicates that the session is finished (i.e. all known events were read for the session's lexicographic selector).

Code = 9

type dagstream.Response struct {

SessionID uint32

Done bool

IDs []hash.Event

Events []inter.EventPayload

}Txpool transactions exchanging consists of messages EvmTxsMsg, NewEvmTxHashesMsg, GetEvmTxsMsg.

New txpool transactions are broadcasted with either EvmTxsMsg or NewEvmTxHashesMsg. Unknown transactions hashes

are requested with GetEvmTxsMsg.

By default, every peer will periodically send a random subset of his txpool transaction hashes to one random peer, to ensure that every peer will eventually receive all the transactions even if they missed the broadcast.

Node will send all the tx hashes (chunk-by-chunk) to every newly connected peer.

RLP is used for messages serialization.

RLPx is used as a transport protocol (the same protocol as in Ethereum).

Opera supports the Ethereum discovery protocols - which are used to find connections in the network.