+

+[](https://paperswithcode.com/sota/2d-human-pose-estimation-on-coco-wholebody-1?p=rtmpose-real-time-multi-person-pose)

+

+

+

+

+

+

+- Discord Group:

+ - 🙌 https://discord.gg/raweFPmdzG 🙌

+

+## ⚡ Pipeline 性能 [🔝](#-table-of-contents)

+

+**说明**

+

+- Pipeline 速度测试时开启了隔帧检测策略,默认检测间隔为 5 帧。

+- 环境配置:

+ - torch >= 1.7.1

+ - onnxruntime 1.12.1

+ - TensorRT 8.4.3.1

+ - cuDNN 8.3.2

+ - CUDA 11.3

+

+| Detection Config | Pose Config | Input Size

(Det/Pose) | Model AP

(COCO) | Pipeline AP

(COCO) | Params (M)

(Det/Pose) | Flops (G)

(Det/Pose) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | Download |

+| :------------------------------------------------------------------ | :---------------------------------------------------------------------------- | :---------------------------: | :---------------------: | :------------------------: | :---------------------------: | :--------------------------: | :--------------------------------: | :---------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| [RTMDet-nano](./rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py) | [RTMPose-t](./rtmpose/body_2d_keypoint/rtmpose-t_8xb256-420e_coco-256x192.py) | 320x320

256x192 | 40.3

67.1 | 64.4 | 0.99

3.34 | 0.31

0.36 | 12.403 | 2.467 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_nano_8xb32-100e_coco-obj365-person-05d8511e.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-tiny_simcc-aic-coco_pt-aic-coco_420e-256x192-cfc8f33d_20230126.pth) |

+| [RTMDet-nano](./rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py) | [RTMPose-s](./rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 320x320

256x192 | 40.3

71.1 | 68.5 | 0.99

5.47 | 0.31

0.68 | 16.658 | 2.730 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_nano_8xb32-100e_coco-obj365-person-05d8511e.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-s_simcc-aic-coco_pt-aic-coco_420e-256x192-fcb2599b_20230126.pth) |

+| [RTMDet-nano](./rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py) | [RTMPose-m](./rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 320x320

256x192 | 40.3

75.3 | 73.2 | 0.99

13.59 | 0.31

1.93 | 26.613 | 4.312 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_nano_8xb32-100e_coco-obj365-person-05d8511e.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-256x192-63eb25f7_20230126.pth) |

+| [RTMDet-nano](./rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py) | [RTMPose-l](./rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 320x320

256x192 | 40.3

76.3 | 74.2 | 0.99

27.66 | 0.31

4.16 | 36.311 | 4.644 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_nano_8xb32-100e_coco-obj365-person-05d8511e.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-256x192-f016ffe0_20230126.pth) |

+| [RTMDet-m](./rtmdet/person/rtmdet_m_640-8xb32_coco-person.py) | [RTMPose-m](./rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 640x640

256x192 | 62.5

75.3 | 75.7 | 24.66

13.59 | 38.95

1.93 | - | 6.923 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_m_8xb32-100e_coco-obj365-person-235e8209.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-256x192-63eb25f7_20230126.pth) |

+| [RTMDet-m](./rtmdet/person/rtmdet_m_640-8xb32_coco-person.py) | [RTMPose-l](./rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 640x640

256x192 | 62.5

76.3 | 76.6 | 24.66

27.66 | 38.95

4.16 | - | 7.204 | [det](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_m_8xb32-100e_coco-obj365-person-235e8209.pth)

[pose](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-256x192-f016ffe0_20230126.pth) |

+

+## 📊 模型库 [🔝](#-table-of-contents)

+

+**说明**

+

+- 此处提供的模型采用了多数据集联合训练以提高性能,模型指标不适用于学术比较。

+- 表格中为开启了 Flip Test 的测试结果。

+- RTMPose 在更多公开数据集上的性能指标可以前往 [Model Zoo](https://mmpose.readthedocs.io/en/1.x/model_zoo_papers/algorithms.html) 查看。

+- RTMPose 在更多硬件平台上的推理速度可以前往 [Benchmark](./benchmark/README_CN.md) 查看。

+- 如果你有希望我们支持的数据集,欢迎[联系我们](https://uua478.fanqier.cn/f/xxmynrki)/[Google Questionnaire](https://docs.google.com/forms/d/e/1FAIpQLSfzwWr3eNlDzhU98qzk2Eph44Zio6hi5r0iSwfO9wSARkHdWg/viewform?usp=sf_link)!

+

+### 人体 2d 关键点 (17 Keypoints)

+

+| Config | Input Size | AP

(COCO) | Params(M) | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | ncnn-FP16-Latency(ms)

(Snapdragon 865) | Logs | Download |

+| :---------: | :--------: | :---------------: | :-------: | :------: | :--------------------------------: | :---------------------------------------: | :--------------------------------------------: | :--------: | :------------: |

+| [RTMPose-t](./rtmpose/body_2d_keypoint/rtmpose-t_8xb256-420e_coco-256x192.py) | 256x192 | 68.5 | 3.34 | 0.36 | 3.20 | 1.06 | 9.02 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-tiny_simcc-aic-coco_pt-aic-coco_420e-256x192-cfc8f33d_20230126.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-tiny_simcc-aic-coco_pt-aic-coco_420e-256x192-cfc8f33d_20230126.pth) |

+| [RTMPose-s](./rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 256x192 | 72.2 | 5.47 | 0.68 | 4.48 | 1.39 | 13.89 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-s_simcc-aic-coco_pt-aic-coco_420e-256x192-fcb2599b_20230126.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-s_simcc-aic-coco_pt-aic-coco_420e-256x192-fcb2599b_20230126.pth) |

+| [RTMPose-m](./rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 256x192 | 75.8 | 13.59 | 1.93 | 11.06 | 2.29 | 26.44 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-256x192-63eb25f7_20230126.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-256x192-63eb25f7_20230126.pth) |

+| [RTMPose-l](./rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 256x192 | 76.5 | 27.66 | 4.16 | 18.85 | 3.46 | 45.37 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-256x192-f016ffe0_20230126.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-256x192-f016ffe0_20230126.pth) |

+| [RTMPose-m](./rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py) | 384x288 | 77.0 | 13.72 | 4.33 | 24.78 | 3.66 | - | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-384x288-a62a0b32_20230228.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-384x288-a62a0b32_20230228.pth) |

+| [RTMPose-l](./rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py) | 384x288 | 77.3 | 27.79 | 9.35 | - | 6.05 | - | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-384x288-97d6cb0f_20230228.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-aic-coco_pt-aic-coco_420e-384x288-97d6cb0f_20230228.pth) |

+

+#### 模型剪枝

+

+**说明**

+

+- 模型剪枝由 [MMRazor](https://github.com/open-mmlab/mmrazor) 提供

+

+| Config | Input Size | AP

(COCO) | Params(M) | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | ncnn-FP16-Latency(ms)

(Snapdragon 865) | Logs | Download |

+| :---------: | :--------: | :---------------: | :-------: | :------: | :--------------------------------: | :---------------------------------------: | :--------------------------------------------: | :--------: | :------------: |

+| RTMPose-s-aic-coco-pruned | 256x192 | 69.4 | 3.43 | 0.35 | - | - | - | [log](https://download.openmmlab.com/mmrazor/v1/pruning/group_fisher/rtmpose-s/group_fisher_finetune_rtmpose-s_8xb256-420e_aic-coco-256x192.json) | [model](https://download.openmmlab.com/mmrazor/v1/pruning/group_fisher/rtmpose-s/group_fisher_finetune_rtmpose-s_8xb256-420e_aic-coco-256x192.pth) |

+

+更多信息,请参考 [GroupFisher Pruning for RTMPose](./rtmpose/pruning/README.md).

+

+### 人体全身 2d 关键点 (133 Keypoints)

+

+| Config | Input Size | Whole AP | Whole AR | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | Logs | Download |

+| :----------------------------- | :--------: | :------: | :------: | :------: | :--------------------------------: | :---------------------------------------: | :--------------------------: | :-------------------------------: |

+| [RTMPose-m](./rtmpose/wholebody_2d_keypoint/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 60.4 | 66.7 | 2.22 | 13.50 | 4.00 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-coco-wholebody_pt-aic-coco_270e-256x192-cd5e845c_20230123.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-coco-wholebody_pt-aic-coco_270e-256x192-cd5e845c_20230123.pth) |

+| [RTMPose-l](./rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 63.2 | 69.4 | 4.52 | 23.41 | 5.67 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-coco-wholebody_pt-aic-coco_270e-256x192-6f206314_20230124.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-coco-wholebody_pt-aic-coco_270e-256x192-6f206314_20230124.pth) |

+| [RTMPose-l](./rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py) | 384x288 | 67.0 | 72.3 | 10.07 | 44.58 | 7.68 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-coco-wholebody_pt-aic-coco_270e-384x288-eaeb96c8_20230125.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-l_simcc-coco-wholebody_pt-aic-coco_270e-384x288-eaeb96c8_20230125.pth) |

+

+### 动物 2d 关键点 (17 Keypoints)

+

+| Config | Input Size | AP

(AP10K) | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | Logs | Download |

+| :---------------------------: | :--------: | :----------------: | :------: | :--------------------------------: | :---------------------------------------: | :--------------------------: | :------------------------------: |

+| [RTMPose-m](./rtmpose/animal_2d_keypoint/rtmpose-m_8xb64-210e_ap10k-256x256.py) | 256x256 | 72.2 | 2.57 | 14.157 | 2.404 | [Log](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-ap10k_pt-aic-coco_210e-256x256-7a041aa1_20230206.json) | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmpose-m_simcc-ap10k_pt-aic-coco_210e-256x256-7a041aa1_20230206.pth) |

+

+### 脸部 2d 关键点

+

+| Config | Input Size | NME

(COCO-WholeBody-Face) | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | Logs | Download |

+| :--------------------------------------------------: | :--------: | :-------------------------------: | :------: | :--------------------------------: | :---------------------------------------: | :---------: | :---------: |

+| [RTMPose-m](./rtmpose/face_2d_keypoint/wflw/rtmpose-m_8xb64-60e_coco-wholebody-face-256x256.py) | 256x256 | 4.57 | - | - | - | Coming soon | Coming soon |

+

+### 手部 2d 关键点

+

+| Config | Input Size | PCK

(COCO-WholeBody-Hand) | FLOPS(G) | ORT-Latency(ms)

(i7-11700) | TRT-FP16-Latency(ms)

(GTX 1660Ti) | Logs | Download |

+| :--------------------------------------------------: | :--------: | :-------------------------------: | :------: | :--------------------------------: | :---------------------------------------: | :---------: | :---------: |

+| [RTMPose-m](./rtmpose/hand_2d_keypoint/coco_wholebody_hand/rtmpose-m_8xb32-210e_coco-wholebody-hand-256x256.py) | 256x256 | 81.5 | - | - | - | Coming soon | Coming soon |

+

+### 预训练模型

+

+我们提供了 UDP 预训练的 CSPNeXt 模型参数,训练配置请参考 [pretrain_cspnext_udp folder](./rtmpose/pretrain_cspnext_udp/)。

+

+| Model | Input Size | Params(M) | Flops(G) | AP

(GT) | AR

(GT) | Download |

+| :-------: | :--------: | :-------: | :------: | :-------------: | :-------------: | :-----------------------------------------------------------------------------------------------------------------------------: |

+| CSPNeXt-t | 256x192 | 6.03 | 1.43 | 65.5 | 68.9 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/cspnext-tiny_udp-aic-coco_210e-256x192-cbed682d_20230130.pth) |

+| CSPNeXt-s | 256x192 | 8.58 | 1.78 | 70.0 | 73.3 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/cspnext-s_udp-aic-coco_210e-256x192-92f5a029_20230130.pth) |

+| CSPNeXt-m | 256x192 | 13.05 | 3.06 | 74.8 | 77.7 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/cspnext-m_udp-aic-coco_210e-256x192-f2f7d6f6_20230130.pth) |

+| CSPNeXt-l | 256x192 | 32.44 | 5.33 | 77.2 | 79.9 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmpose/cspnext-l_udp-aic-coco_210e-256x192-273b7631_20230130.pth) |

+

+我们提供了 ImageNet 分类训练的 CSPNeXt 模型参数,更多细节请参考 [RTMDet](https://github.com/open-mmlab/mmdetection/blob/dev-3.x/configs/rtmdet/README.md#classification)。

+

+| Model | Input Size | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Download |

+| :----------: | :--------: | :-------: | :------: | :-------: | :-------: | :---------------------------------------------------------------------------------------------------------------------------------: |

+| CSPNeXt-tiny | 224x224 | 2.73 | 0.34 | 69.44 | 89.45 | [Model](https://download.openmmlab.com/mmdetection/v3.0/rtmdet/cspnext_rsb_pretrain/cspnext-tiny_imagenet_600e-3a2dd350.pth) |

+| CSPNeXt-s | 224x224 | 4.89 | 0.66 | 74.41 | 92.23 | [Model](https://download.openmmlab.com/mmdetection/v3.0/rtmdet/cspnext_rsb_pretrain/cspnext-s_imagenet_600e-ea671761.pth) |

+| CSPNeXt-m | 224x224 | 13.05 | 1.93 | 79.27 | 94.79 | [Model](https://download.openmmlab.com/mmdetection/v3.0/rtmdet/cspnext_rsb_pretrain/cspnext-m_8xb256-rsb-a1-600e_in1k-ecb3bbd9.pth) |

+| CSPNeXt-l | 224x224 | 27.16 | 4.19 | 81.30 | 95.62 | [Model](https://download.openmmlab.com/mmdetection/v3.0/rtmdet/cspnext_rsb_pretrain/cspnext-l_8xb256-rsb-a1-600e_in1k-6a760974.pth) |

+

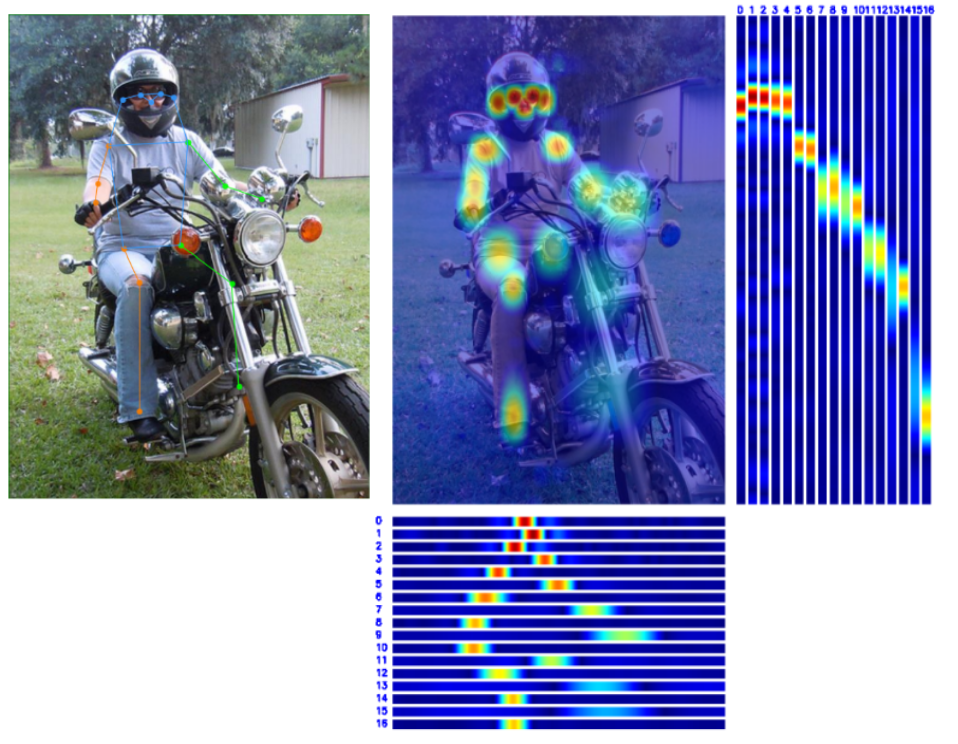

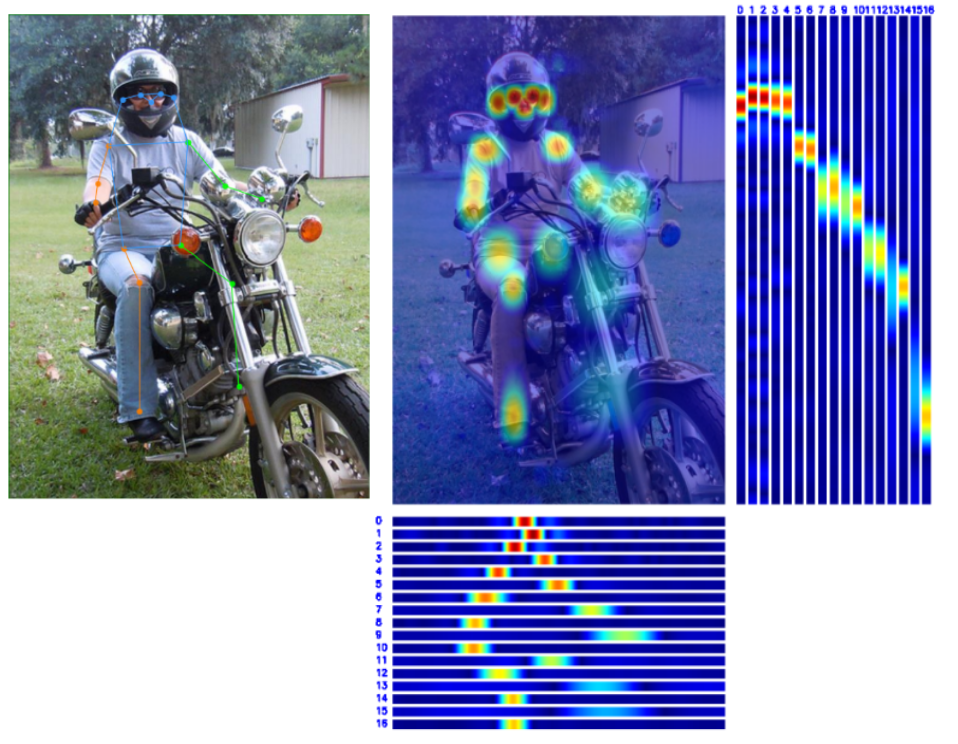

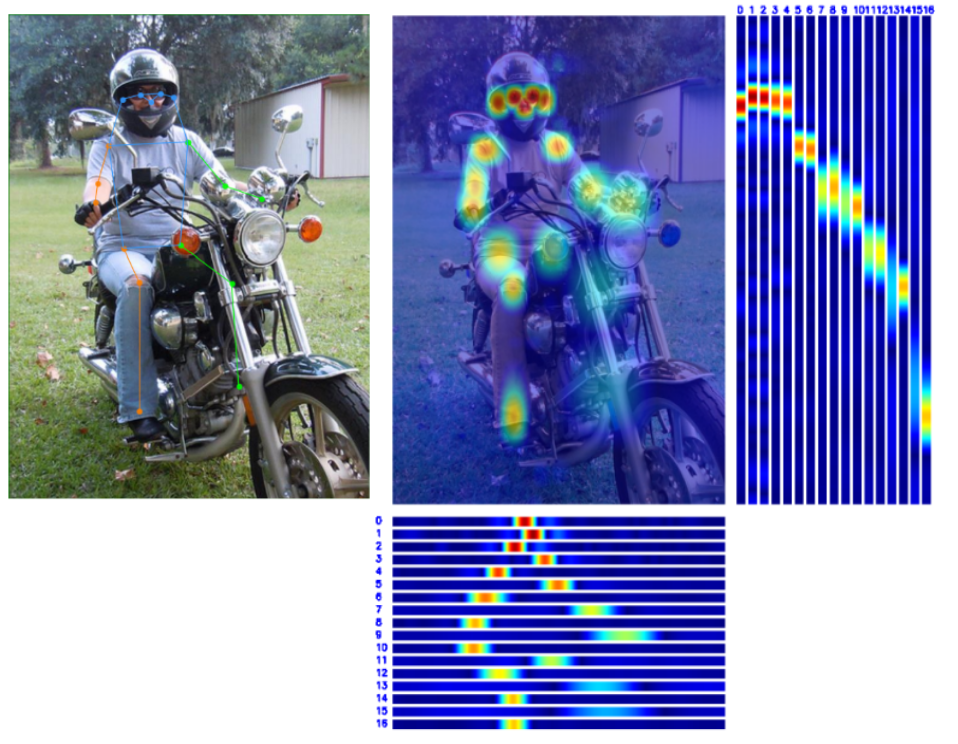

+## 👀 可视化 [🔝](#-table-of-contents)

+

+

+

+

+

(COCO) | Params(M) | FLOPS(G) |

+| :-------------------------------------------------------------------------------: | :--------: | :---------------: | :-------: | :------: |

+| [RTMPose-t](../rtmpose/body_2d_keypoint/rtmpose-tiny_8xb256-420e_coco-256x192.py) | 256x192 | 68.5 | 3.34 | 0.36 |

+| [RTMPose-s](../rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 256x192 | 72.2 | 5.47 | 0.68 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 256x192 | 75.8 | 13.59 | 1.93 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 256x192 | 76.5 | 27.66 | 4.16 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py) | 384x288 | 77.0 | 13.72 | 4.33 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py) | 384x288 | 77.3 | 27.79 | 9.35 |

+

+### Speed Benchmark

+

+- Numbers displayed in the table are inference latencies in millisecond(ms).

+

+| Config | Input Size | ORT

(i7-11700) | TRT-FP16

(GTX 1660Ti) | TRT-FP16

(RTX 3090) | ncnn-FP16

(Snapdragon 865) | TRT-FP16

(Jetson AGX Orin) | TRT-FP16

(Jetson Orin NX) |

+| :---------: | :--------: | :--------------------: | :---------------------------: | :-------------------------: | :--------------------------------: | :--------------------------------: | :-------------------------------: |

+| [RTMPose-t](../rtmpose/body_2d_keypoint/rtmpose-tiny_8xb256-420e_coco-256x192.py) | 256x192 | 3.20 | 1.06 | 0.98 | 9.02 | 1.63 | 1.97 |

+| [RTMPose-s](../rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 256x192 | 4.48 | 1.39 | 1.12 | 13.89 | 1.85 | 2.18 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 256x192 | 11.06 | 2.29 | 1.18 | 26.44 | 2.72 | 3.35 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 256x192 | 18.85 | 3.46 | 1.37 | 45.37 | 3.67 | 4.78 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py) | 384x288 | 24.78 | 3.66 | 1.20 | 26.44 | 3.45 | 5.08 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py) | 384x288 | - | 6.05 | 1.74 | - | 4.93 | 7.23 |

+

+## WholeBody 2d (133 Keypoints)

+

+### Model Info

+

+| Config | Input Size | Whole AP | Whole AR | FLOPS(G) |

+| :------------------------------------------------------------------------------------------- | :--------: | :------: | :------: | :------: |

+| [RTMPose-m](../rtmpose/wholebody_2d_keypoint/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 60.4 | 66.7 | 2.22 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 63.2 | 69.4 | 4.52 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py) | 384x288 | 67.0 | 72.3 | 10.07 |

+

+### Speed Benchmark

+

+- Numbers displayed in the table are inference latencies in millisecond(ms).

+- Data from different community users are separated by `|`.

+

+| Config | Input Size | ORT

(i7-11700) | TRT-FP16

(GTX 1660Ti) | TRT-FP16

(RTX 3090) | TRT-FP16

(Jetson AGX Orin) | TRT-FP16

(Jetson Orin NX) |

+| :-------------------------------------------- | :--------: | :--------------------: | :---------------------------: | :-------------------------: | :--------------------------------: | :-------------------------------: |

+| [RTMPose-m](../rtmpose/wholebody_2d_keypoint/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 13.50 | 4.00 | 1.17 \| 1.84 | 2.79 | 3.51 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 23.41 | 5.67 | 1.44 \| 2.61 | 3.80 | 4.95 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py) | 384x288 | 44.58 | 7.68 | 1.75 \| 4.24 | 5.08 | 7.20 |

+

+## How To Test Speed

+

+If you need to test the inference speed of the model under the deployment framework, MMDeploy provides a convenient `tools/profiler.py` script.

+

+The user needs to prepare a folder for the test images `./test_images`, the profiler will randomly read images from this directory for the model speed test.

+

+```shell

+python tools/profiler.py \

+ configs/mmpose/pose-detection_simcc_onnxruntime_dynamic.py \

+ {RTMPOSE_PROJECT}/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py \

+ ../test_images \

+ --model {WORK_DIR}/end2end.onnx \

+ --shape 256x192 \

+ --device cpu \

+ --warmup 50 \

+ --num-iter 200

+```

+

+The result is as follows:

+

+```shell

+01/30 15:06:35 - mmengine - INFO - [onnxruntime]-70 times per count: 8.73 ms, 114.50 FPS

+01/30 15:06:36 - mmengine - INFO - [onnxruntime]-90 times per count: 9.05 ms, 110.48 FPS

+01/30 15:06:37 - mmengine - INFO - [onnxruntime]-110 times per count: 9.87 ms, 101.32 FPS

+01/30 15:06:37 - mmengine - INFO - [onnxruntime]-130 times per count: 9.99 ms, 100.10 FPS

+01/30 15:06:38 - mmengine - INFO - [onnxruntime]-150 times per count: 10.39 ms, 96.29 FPS

+01/30 15:06:39 - mmengine - INFO - [onnxruntime]-170 times per count: 10.77 ms, 92.86 FPS

+01/30 15:06:40 - mmengine - INFO - [onnxruntime]-190 times per count: 10.98 ms, 91.05 FPS

+01/30 15:06:40 - mmengine - INFO - [onnxruntime]-210 times per count: 11.19 ms, 89.33 FPS

+01/30 15:06:41 - mmengine - INFO - [onnxruntime]-230 times per count: 11.16 ms, 89.58 FPS

+01/30 15:06:42 - mmengine - INFO - [onnxruntime]-250 times per count: 11.06 ms, 90.41 FPS

+----- Settings:

++------------+---------+

+| batch size | 1 |

+| shape | 256x192 |

+| iterations | 200 |

+| warmup | 50 |

++------------+---------+

+----- Results:

++--------+------------+---------+

+| Stats | Latency/ms | FPS |

++--------+------------+---------+

+| Mean | 11.060 | 90.412 |

+| Median | 11.852 | 84.375 |

+| Min | 7.812 | 128.007 |

+| Max | 13.690 | 73.044 |

++--------+------------+---------+

+```

+

+If you want to learn more details of profiler, you can refer to the [Profiler Docs](https://mmdeploy.readthedocs.io/en/1.x/02-how-to-run/useful_tools.html#profiler).

diff --git a/projects/rtmpose/benchmark/README_CN.md b/projects/rtmpose/benchmark/README_CN.md

new file mode 100644

index 0000000000..08578f44f5

--- /dev/null

+++ b/projects/rtmpose/benchmark/README_CN.md

@@ -0,0 +1,116 @@

+# RTMPose Benchmarks

+

+简体中文 | [English](./README.md)

+

+欢迎社区用户在不同硬件设备上进行推理速度测试,贡献到本项目目录下。

+

+当前已测试:

+

+- CPU

+ - Intel i7-11700

+- GPU

+ - NVIDIA GeForce 1660 Ti

+ - NVIDIA GeForce RTX 3090

+- Nvidia Jetson

+ - AGX Orin

+ - Orin NX

+- ARM

+ - Snapdragon 865

+

+### 人体 2d 关键点 (17 Keypoints)

+

+### Model Info

+

+| Config | Input Size | AP

(COCO) | Params(M) | FLOPS(G) |

+| :-------------------------------------------------------------------------------: | :--------: | :---------------: | :-------: | :------: |

+| [RTMPose-t](../rtmpose/body_2d_keypoint/rtmpose-tiny_8xb256-420e_coco-256x192.py) | 256x192 | 68.5 | 3.34 | 0.36 |

+| [RTMPose-s](../rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 256x192 | 72.2 | 5.47 | 0.68 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 256x192 | 75.8 | 13.59 | 1.93 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 256x192 | 76.5 | 27.66 | 4.16 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py) | 384x288 | 77.0 | 13.72 | 4.33 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py) | 384x288 | 77.3 | 27.79 | 9.35 |

+

+### Speed Benchmark

+

+图中所示为模型推理时间,单位毫秒。

+

+| Config | Input Size | ORT

(i7-11700) | TRT-FP16

(GTX 1660Ti) | TRT-FP16

(RTX 3090) | ncnn-FP16

(Snapdragon 865) | TRT-FP16

(Jetson AGX Orin) | TRT-FP16

(Jetson Orin NX) |

+| :---------: | :--------: | :--------------------: | :---------------------------: | :-------------------------: | :--------------------------------: | :--------------------------------: | :-------------------------------: |

+| [RTMPose-t](../rtmpose/body_2d_keypoint/rtmpose-tiny_8xb256-420e_coco-256x192.py) | 256x192 | 3.20 | 1.06 | 0.98 | 9.02 | 1.63 | 1.97 |

+| [RTMPose-s](../rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py) | 256x192 | 4.48 | 1.39 | 1.12 | 13.89 | 1.85 | 2.18 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py) | 256x192 | 11.06 | 2.29 | 1.18 | 26.44 | 2.72 | 3.35 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py) | 256x192 | 18.85 | 3.46 | 1.37 | 45.37 | 3.67 | 4.78 |

+| [RTMPose-m](../rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py) | 384x288 | 24.78 | 3.66 | 1.20 | 26.44 | 3.45 | 5.08 |

+| [RTMPose-l](../rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py) | 384x288 | - | 6.05 | 1.74 | - | 4.93 | 7.23 |

+

+### 人体全身 2d 关键点 (133 Keypoints)

+

+### Model Info

+

+| Config | Input Size | Whole AP | Whole AR | FLOPS(G) |

+| :------------------------------------------------------------------------------------------- | :--------: | :------: | :------: | :------: |

+| [RTMPose-m](../rtmpose/wholebody_2d_keypoint/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 60.4 | 66.7 | 2.22 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 63.2 | 69.4 | 4.52 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py) | 384x288 | 67.0 | 72.3 | 10.07 |

+

+### Speed Benchmark

+

+- 图中所示为模型推理时间,单位毫秒。

+- 来自不同社区用户的测试数据用 `|` 分隔开。

+

+| Config | Input Size | ORT

(i7-11700) | TRT-FP16

(GTX 1660Ti) | TRT-FP16

(RTX 3090) | TRT-FP16

(Jetson AGX Orin) | TRT-FP16

(Jetson Orin NX) |

+| :-------------------------------------------- | :--------: | :--------------------: | :---------------------------: | :-------------------------: | :--------------------------------: | :-------------------------------: |

+| [RTMPose-m](../rtmpose/wholebody_2d_keypoint/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 13.50 | 4.00 | 1.17 \| 1.84 | 2.79 | 3.51 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb64-270e_coco-wholebody-256x192.py) | 256x192 | 23.41 | 5.67 | 1.44 \| 2.61 | 3.80 | 4.95 |

+| [RTMPose-l](../rtmpose/wholebody_2d_keypoint/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py) | 384x288 | 44.58 | 7.68 | 1.75 \| 4.24 | 5.08 | 7.20 |

+

+## 如何测试推理速度

+

+我们使用 MMDeploy 提供的 `tools/profiler.py` 脚本进行模型测速。

+

+用户需要准备一个存放测试图片的文件夹`./test_images`,profiler 将随机从该目录下抽取图片用于模型测速。

+

+```shell

+python tools/profiler.py \

+ configs/mmpose/pose-detection_simcc_onnxruntime_dynamic.py \

+ {RTMPOSE_PROJECT}/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py \

+ ../test_images \

+ --model {WORK_DIR}/end2end.onnx \

+ --shape 256x192 \

+ --device cpu \

+ --warmup 50 \

+ --num-iter 200

+```

+

+The result is as follows:

+

+```shell

+01/30 15:06:35 - mmengine - INFO - [onnxruntime]-70 times per count: 8.73 ms, 114.50 FPS

+01/30 15:06:36 - mmengine - INFO - [onnxruntime]-90 times per count: 9.05 ms, 110.48 FPS

+01/30 15:06:37 - mmengine - INFO - [onnxruntime]-110 times per count: 9.87 ms, 101.32 FPS

+01/30 15:06:37 - mmengine - INFO - [onnxruntime]-130 times per count: 9.99 ms, 100.10 FPS

+01/30 15:06:38 - mmengine - INFO - [onnxruntime]-150 times per count: 10.39 ms, 96.29 FPS

+01/30 15:06:39 - mmengine - INFO - [onnxruntime]-170 times per count: 10.77 ms, 92.86 FPS

+01/30 15:06:40 - mmengine - INFO - [onnxruntime]-190 times per count: 10.98 ms, 91.05 FPS

+01/30 15:06:40 - mmengine - INFO - [onnxruntime]-210 times per count: 11.19 ms, 89.33 FPS

+01/30 15:06:41 - mmengine - INFO - [onnxruntime]-230 times per count: 11.16 ms, 89.58 FPS

+01/30 15:06:42 - mmengine - INFO - [onnxruntime]-250 times per count: 11.06 ms, 90.41 FPS

+----- Settings:

++------------+---------+

+| batch size | 1 |

+| shape | 256x192 |

+| iterations | 200 |

+| warmup | 50 |

++------------+---------+

+----- Results:

++--------+------------+---------+

+| Stats | Latency/ms | FPS |

++--------+------------+---------+

+| Mean | 11.060 | 90.412 |

+| Median | 11.852 | 84.375 |

+| Min | 7.812 | 128.007 |

+| Max | 13.690 | 73.044 |

++--------+------------+---------+

+```

+

+If you want to learn more details of profiler, you can refer to the [Profiler Docs](https://mmdeploy.readthedocs.io/en/1.x/02-how-to-run/useful_tools.html#profiler).

diff --git a/projects/rtmpose/rtmdet/person/rtmdet_m_640-8xb32_coco-person.py b/projects/rtmpose/rtmdet/person/rtmdet_m_640-8xb32_coco-person.py

new file mode 100644

index 0000000000..620de8dc8f

--- /dev/null

+++ b/projects/rtmpose/rtmdet/person/rtmdet_m_640-8xb32_coco-person.py

@@ -0,0 +1,20 @@

+_base_ = 'mmdet::rtmdet/rtmdet_m_8xb32-300e_coco.py'

+

+checkpoint = 'https://download.openmmlab.com/mmdetection/v3.0/rtmdet/cspnext_rsb_pretrain/cspnext-m_8xb256-rsb-a1-600e_in1k-ecb3bbd9.pth' # noqa

+

+model = dict(

+ backbone=dict(

+ init_cfg=dict(

+ type='Pretrained', prefix='backbone.', checkpoint=checkpoint)),

+ bbox_head=dict(num_classes=1),

+ test_cfg=dict(

+ nms_pre=1000,

+ min_bbox_size=0,

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.6),

+ max_per_img=100))

+

+train_dataloader = dict(dataset=dict(metainfo=dict(classes=('person', ))))

+

+val_dataloader = dict(dataset=dict(metainfo=dict(classes=('person', ))))

+test_dataloader = val_dataloader

diff --git a/projects/rtmpose/rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py b/projects/rtmpose/rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py

new file mode 100644

index 0000000000..b93d651735

--- /dev/null

+++ b/projects/rtmpose/rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py

@@ -0,0 +1,110 @@

+_base_ = 'mmdet::rtmdet/rtmdet_l_8xb32-300e_coco.py'

+

+input_shape = 320

+

+model = dict(

+ backbone=dict(

+ deepen_factor=0.33,

+ widen_factor=0.25,

+ use_depthwise=True,

+ ),

+ neck=dict(

+ in_channels=[64, 128, 256],

+ out_channels=64,

+ num_csp_blocks=1,

+ use_depthwise=True,

+ ),

+ bbox_head=dict(

+ in_channels=64,

+ feat_channels=64,

+ share_conv=False,

+ exp_on_reg=False,

+ use_depthwise=True,

+ num_classes=1),

+ test_cfg=dict(

+ nms_pre=1000,

+ min_bbox_size=0,

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.6),

+ max_per_img=100))

+

+train_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ file_client_args={{_base_.file_client_args}}),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(

+ type='CachedMosaic',

+ img_scale=(input_shape, input_shape),

+ pad_val=114.0,

+ max_cached_images=20,

+ random_pop=False),

+ dict(

+ type='RandomResize',

+ scale=(input_shape * 2, input_shape * 2),

+ ratio_range=(0.5, 1.5),

+ keep_ratio=True),

+ dict(type='RandomCrop', crop_size=(input_shape, input_shape)),

+ dict(type='YOLOXHSVRandomAug'),

+ dict(type='RandomFlip', prob=0.5),

+ dict(

+ type='Pad',

+ size=(input_shape, input_shape),

+ pad_val=dict(img=(114, 114, 114))),

+ dict(type='PackDetInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(

+ type='LoadImageFromFile',

+ file_client_args={{_base_.file_client_args}}),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(

+ type='RandomResize',

+ scale=(input_shape, input_shape),

+ ratio_range=(0.5, 1.5),

+ keep_ratio=True),

+ dict(type='RandomCrop', crop_size=(input_shape, input_shape)),

+ dict(type='YOLOXHSVRandomAug'),

+ dict(type='RandomFlip', prob=0.5),

+ dict(

+ type='Pad',

+ size=(input_shape, input_shape),

+ pad_val=dict(img=(114, 114, 114))),

+ dict(type='PackDetInputs')

+]

+

+test_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ file_client_args={{_base_.file_client_args}}),

+ dict(type='Resize', scale=(input_shape, input_shape), keep_ratio=True),

+ dict(

+ type='Pad',

+ size=(input_shape, input_shape),

+ pad_val=dict(img=(114, 114, 114))),

+ dict(

+ type='PackDetInputs',

+ meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

+ 'scale_factor'))

+]

+

+train_dataloader = dict(

+ dataset=dict(pipeline=train_pipeline, metainfo=dict(classes=('person', ))))

+

+val_dataloader = dict(

+ dataset=dict(pipeline=test_pipeline, metainfo=dict(classes=('person', ))))

+test_dataloader = val_dataloader

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='PipelineSwitchHook',

+ switch_epoch=280,

+ switch_pipeline=train_pipeline_stage2)

+]

diff --git a/projects/rtmpose/rtmpose/animal_2d_keypoint/rtmpose-m_8xb64-210e_ap10k-256x256.py b/projects/rtmpose/rtmpose/animal_2d_keypoint/rtmpose-m_8xb64-210e_ap10k-256x256.py

new file mode 100644

index 0000000000..0fa5c5d30c

--- /dev/null

+++ b/projects/rtmpose/rtmpose/animal_2d_keypoint/rtmpose-m_8xb64-210e_ap10k-256x256.py

@@ -0,0 +1,246 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 210

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 150 to 300 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(256, 256),

+ sigma=(5.66, 5.66),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=0.67,

+ widen_factor=0.75,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-m_udp-aic-coco_210e-256x192-f2f7d6f6_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=768,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(8, 8),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True, ))

+

+# base dataset settings

+dataset_type = 'AP10KDataset'

+data_mode = 'topdown'

+data_root = 'data/ap10k/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/pose/ap10k/',

+# f'{data_root}': 's3://openmmlab/datasets/pose/ap10k/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.0),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ap10k-train-split1.json',

+ data_prefix=dict(img='data/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=32,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ap10k-val-split1.json',

+ data_prefix=dict(img='data/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = dict(

+ batch_size=32,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ap10k-test-split1.json',

+ data_prefix=dict(img='data/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,

+ switch_pipeline=train_pipeline_stage2)

+]

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/ap10k-val-split1.json')

+test_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/ap10k-test-split1.json')

diff --git a/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py

new file mode 100644

index 0000000000..b44df792a1

--- /dev/null

+++ b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-256x192.py

@@ -0,0 +1,232 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 420

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 210 to 420 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=1024)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(192, 256),

+ sigma=(4.9, 5.66),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=1.,

+ widen_factor=1.,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-l_udp-aic-coco_210e-256x192-273b7631_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=1024,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(6, 8),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True))

+

+# base dataset settings

+dataset_type = 'CocoDataset'

+data_mode = 'topdown'

+data_root = 'data/coco/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/',

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=256,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_train2017.json',

+ data_prefix=dict(img='train2017/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_val2017.json',

+ # bbox_file=f'{data_root}person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='val2017/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,

+ switch_pipeline=train_pipeline_stage2)

+]

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/person_keypoints_val2017.json')

+test_evaluator = val_evaluator

diff --git a/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py

new file mode 100644

index 0000000000..2468c40d53

--- /dev/null

+++ b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-l_8xb256-420e_coco-384x288.py

@@ -0,0 +1,232 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 420

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 210 to 420 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=1024)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(288, 384),

+ sigma=(6., 6.93),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=1.,

+ widen_factor=1.,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-l_udp-aic-coco_210e-256x192-273b7631_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=1024,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(9, 12),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True))

+

+# base dataset settings

+dataset_type = 'CocoDataset'

+data_mode = 'topdown'

+data_root = 'data/coco/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/',

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=256,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_train2017.json',

+ data_prefix=dict(img='train2017/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_val2017.json',

+ # bbox_file=f'{data_root}person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='val2017/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,

+ switch_pipeline=train_pipeline_stage2)

+]

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/person_keypoints_val2017.json')

+test_evaluator = val_evaluator

diff --git a/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py

new file mode 100644

index 0000000000..c7e3061c53

--- /dev/null

+++ b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py

@@ -0,0 +1,232 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 420

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 210 to 420 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=1024)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(192, 256),

+ sigma=(4.9, 5.66),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=0.67,

+ widen_factor=0.75,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-m_udp-aic-coco_210e-256x192-f2f7d6f6_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=768,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(6, 8),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True))

+

+# base dataset settings

+dataset_type = 'CocoDataset'

+data_mode = 'topdown'

+data_root = 'data/coco/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/',

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=256,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_train2017.json',

+ data_prefix=dict(img='train2017/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_val2017.json',

+ # bbox_file=f'{data_root}person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='val2017/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,

+ switch_pipeline=train_pipeline_stage2)

+]

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/person_keypoints_val2017.json')

+test_evaluator = val_evaluator

diff --git a/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py

new file mode 100644

index 0000000000..16a7b0c493

--- /dev/null

+++ b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-384x288.py

@@ -0,0 +1,232 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 420

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 210 to 420 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=1024)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(288, 384),

+ sigma=(6., 6.93),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=0.67,

+ widen_factor=0.75,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-m_udp-aic-coco_210e-256x192-f2f7d6f6_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=768,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(9, 12),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True))

+

+# base dataset settings

+dataset_type = 'CocoDataset'

+data_mode = 'topdown'

+data_root = 'data/coco/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/',

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=256,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_train2017.json',

+ data_prefix=dict(img='train2017/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_val2017.json',

+ # bbox_file=f'{data_root}person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='val2017/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,

+ switch_pipeline=train_pipeline_stage2)

+]

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/person_keypoints_val2017.json')

+test_evaluator = val_evaluator

diff --git a/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py

new file mode 100644

index 0000000000..dca589bef9

--- /dev/null

+++ b/projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-s_8xb256-420e_coco-256x192.py

@@ -0,0 +1,232 @@

+_base_ = ['mmpose::_base_/default_runtime.py']

+

+# runtime

+max_epochs = 420

+stage2_num_epochs = 30

+base_lr = 4e-3

+

+train_cfg = dict(max_epochs=max_epochs, val_interval=10)

+randomness = dict(seed=21)

+

+# optimizer

+optim_wrapper = dict(

+ type='OptimWrapper',

+ optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.),

+ paramwise_cfg=dict(

+ norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True))

+

+# learning rate

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1.0e-5,

+ by_epoch=False,

+ begin=0,

+ end=1000),

+ dict(

+ # use cosine lr from 210 to 420 epoch

+ type='CosineAnnealingLR',

+ eta_min=base_lr * 0.05,

+ begin=max_epochs // 2,

+ end=max_epochs,

+ T_max=max_epochs // 2,

+ by_epoch=True,

+ convert_to_iter_based=True),

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=1024)

+

+# codec settings

+codec = dict(

+ type='SimCCLabel',

+ input_size=(192, 256),

+ sigma=(4.9, 5.66),

+ simcc_split_ratio=2.0,

+ normalize=False,

+ use_dark=False)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ _scope_='mmdet',

+ type='CSPNeXt',

+ arch='P5',

+ expand_ratio=0.5,

+ deepen_factor=0.33,

+ widen_factor=0.5,

+ out_indices=(4, ),

+ channel_attention=True,

+ norm_cfg=dict(type='SyncBN'),

+ act_cfg=dict(type='SiLU'),

+ init_cfg=dict(

+ type='Pretrained',

+ prefix='backbone.',

+ checkpoint='https://download.openmmlab.com/mmpose/v1/projects/'

+ 'rtmpose/cspnext-s_udp-aic-coco_210e-256x192-92f5a029_20230130.pth' # noqa

+ )),

+ head=dict(

+ type='RTMCCHead',

+ in_channels=512,

+ out_channels=17,

+ input_size=codec['input_size'],

+ in_featuremap_size=(6, 8),

+ simcc_split_ratio=codec['simcc_split_ratio'],

+ final_layer_kernel_size=7,

+ gau_cfg=dict(

+ hidden_dims=256,

+ s=128,

+ expansion_factor=2,

+ dropout_rate=0.,

+ drop_path=0.,

+ act_fn='SiLU',

+ use_rel_bias=False,

+ pos_enc=False),

+ loss=dict(

+ type='KLDiscretLoss',

+ use_target_weight=True,

+ beta=10.,

+ label_softmax=True),

+ decoder=codec),

+ test_cfg=dict(flip_test=True))

+

+# base dataset settings

+dataset_type = 'CocoDataset'

+data_mode = 'topdown'

+data_root = 'data/coco/'

+

+file_client_args = dict(backend='disk')

+# file_client_args = dict(

+# backend='petrel',

+# path_mapping=dict({

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/',

+# f'{data_root}': 's3://openmmlab/datasets/detection/coco/'

+# }))

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=1.),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+train_pipeline_stage2 = [

+ dict(type='LoadImage', file_client_args=file_client_args),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(

+ type='RandomBBoxTransform',

+ shift_factor=0.,

+ scale_factor=[0.75, 1.25],

+ rotate_factor=60),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='mmdet.YOLOXHSVRandomAug'),

+ dict(

+ type='Albumentation',

+ transforms=[

+ dict(type='Blur', p=0.1),

+ dict(type='MedianBlur', p=0.1),

+ dict(

+ type='CoarseDropout',

+ max_holes=1,

+ max_height=0.4,

+ max_width=0.4,

+ min_holes=1,

+ min_height=0.2,

+ min_width=0.2,

+ p=0.5),

+ ]),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=256,

+ num_workers=10,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_train2017.json',

+ data_prefix=dict(img='train2017/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=10,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/person_keypoints_val2017.json',

+ # bbox_file=f'{data_root}person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='val2017/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+custom_hooks = [

+ dict(

+ type='EMAHook',

+ ema_type='ExpMomentumEMA',

+ momentum=0.0002,

+ update_buffers=True,

+ priority=49),

+ dict(

+ type='mmdet.PipelineSwitchHook',

+ switch_epoch=max_epochs - stage2_num_epochs,