diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index c461b497ce1..13e4cc406c4 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -43,3 +43,9 @@ repos:

hooks:

- id: docformatter

args: ["--in-place", "--wrap-descriptions", "79"]

+ - repo: https://github.com/open-mmlab/pre-commit-hooks

+ rev: master # Use the ref you want to point at

+ hooks:

+ - id: check-algo-readme

+ - id: check-copyright

+ args: ["mmdet"] # replace the dir_to_check with your expected directory to check

diff --git a/README.md b/README.md

index 09231c7603d..8fd8f10234a 100644

--- a/README.md

+++ b/README.md

@@ -13,10 +13,10 @@

-[📘Documentation](https://mmdetection.readthedocs.io/en/v2.19.1/) |

-[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.19.1/get_started.html) |

-[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.19.1/model_zoo.html) |

-[🆕Update News](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘Documentation](https://mmdetection.readthedocs.io/en/v2.20.0/) |

+[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.20.0/get_started.html) |

+[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.20.0/model_zoo.html) |

+[🆕Update News](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀Ongoing Projects](https://github.com/open-mmlab/mmdetection/projects) |

[🤔Reporting Issues](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -60,11 +60,10 @@ This project is released under the [Apache 2.0 license](LICENSE).

## Changelog

-**2.19.1** was released in 14/12/2021:

+**2.20.0** was released in 27/12/2021:

-- Release [YOLOX](configs/yolox/README.md) COCO pretrained models

-- Add abstract and sketch of the papers in readmes

-- Fix some weight initialization bugs

+- Support [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection (ICCV 2021 Oral)

+- Support resuming from the latest checkpoint automatically

Please refer to [changelog.md](docs/en/changelog.md) for details and release history.

@@ -149,6 +148,7 @@ Results and models are available in the [model zoo](docs/en/model_zoo.md).

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

Some other methods are also supported in [projects using MMDetection](./docs/en/projects.md).

@@ -209,3 +209,5 @@ If you use this toolbox or benchmark in your research, please cite this project.

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab Model Compression Toolbox and Benchmark.

diff --git a/README_zh-CN.md b/README_zh-CN.md

index 846b4f4a93d..bca9a2a4e65 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -13,10 +13,10 @@

-[📘Documentation](https://mmdetection.readthedocs.io/en/v2.19.1/) |

-[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.19.1/get_started.html) |

-[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.19.1/model_zoo.html) |

-[🆕Update News](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘Documentation](https://mmdetection.readthedocs.io/en/v2.20.0/) |

+[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.20.0/get_started.html) |

+[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.20.0/model_zoo.html) |

+[🆕Update News](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀Ongoing Projects](https://github.com/open-mmlab/mmdetection/projects) |

[🤔Reporting Issues](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -60,11 +60,10 @@ This project is released under the [Apache 2.0 license](LICENSE).

## Changelog

-**2.19.1** was released in 14/12/2021:

+**2.20.0** was released in 27/12/2021:

-- Release [YOLOX](configs/yolox/README.md) COCO pretrained models

-- Add abstract and sketch of the papers in readmes

-- Fix some weight initialization bugs

+- Support [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection (ICCV 2021 Oral)

+- Support resuming from the latest checkpoint automatically

Please refer to [changelog.md](docs/en/changelog.md) for details and release history.

@@ -149,6 +148,7 @@ Results and models are available in the [model zoo](docs/en/model_zoo.md).

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

Some other methods are also supported in [projects using MMDetection](./docs/en/projects.md).

@@ -209,3 +209,5 @@ If you use this toolbox or benchmark in your research, please cite this project.

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab Model Compression Toolbox and Benchmark.

diff --git a/README_zh-CN.md b/README_zh-CN.md

index 846b4f4a93d..bca9a2a4e65 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -13,10 +13,10 @@

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -59,10 +59,9 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

## 更新日志

-最新的 **2.19.1** 版本已经在 2021.12.14 发布:

-- 发布 [YOLOX](configs/yolox/README.md) COCO 预训练模型

-- 在自述文件中添加论文的摘要和草图

-- 修复一些权重初始化错误

+最新的 **2.20.0** 版本已经在 2021.12.27 发布:

+- 支持了 ICCV 2021 Oral 方法 [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection

+- 支持了自动从最新的存储参数节点恢复训练

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/changelog.md)。

@@ -146,6 +145,7 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

我们在[基于 MMDetection 的项目](./docs/zh_cn/projects.md)中列举了一些其他的支持的算法。

@@ -206,6 +206,8 @@ MMDetection 是一款由来自不同高校和企业的研发人员共同参与

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab 光流估计工具箱与测试基准

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab 少样本学习工具箱与测试基准

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 人体参数化模型工具箱与测试基准

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab 自监督学习工具箱与测试基准

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab 模型压缩工具箱与测试基准

## 欢迎加入 OpenMMLab 社区

diff --git a/configs/resnest/metafile.yml b/configs/resnest/metafile.yml

index d7f68e5cd8b..3323fad027a 100644

--- a/configs/resnest/metafile.yml

+++ b/configs/resnest/metafile.yml

@@ -11,7 +11,7 @@ Collections:

Paper:

URL: https://arxiv.org/abs/2004.08955

Title: 'ResNeSt: Split-Attention Networks'

- README: configs/renest/README.md

+ README: configs/resnest/README.md

Code:

URL: https://github.com/open-mmlab/mmdetection/blob/v2.7.0/mmdet/models/backbones/resnest.py#L273

Version: v2.7.0

diff --git a/configs/strong_baselines/README.md b/configs/strong_baselines/README.md

index c1487ef99a3..5ada104bbe2 100644

--- a/configs/strong_baselines/README.md

+++ b/configs/strong_baselines/README.md

@@ -1,6 +1,6 @@

# Strong Baselines

-We train Mask R-CNN with large-scale jittor and longer schedule as strong baselines.

+We train Mask R-CNN with large-scale jitter and longer schedule as strong baselines.

The modifications follow those in [Detectron2](https://github.com/facebookresearch/detectron2/tree/master/configs/new_baselines).

## Results and models

diff --git a/configs/tood/README.md b/configs/tood/README.md

new file mode 100644

index 00000000000..b1522e78565

--- /dev/null

+++ b/configs/tood/README.md

@@ -0,0 +1,44 @@

+# TOOD: Task-aligned One-stage Object Detection

+

+## Abstract

+

+

+

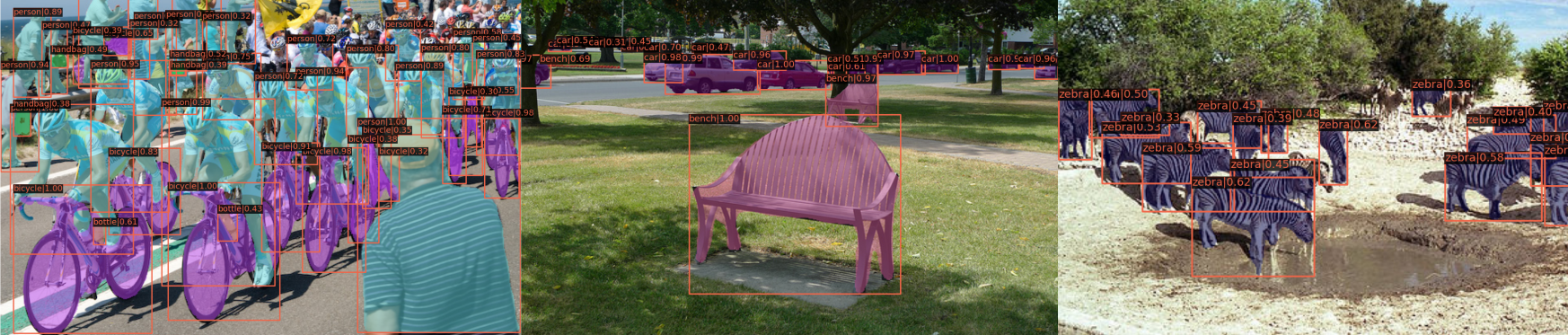

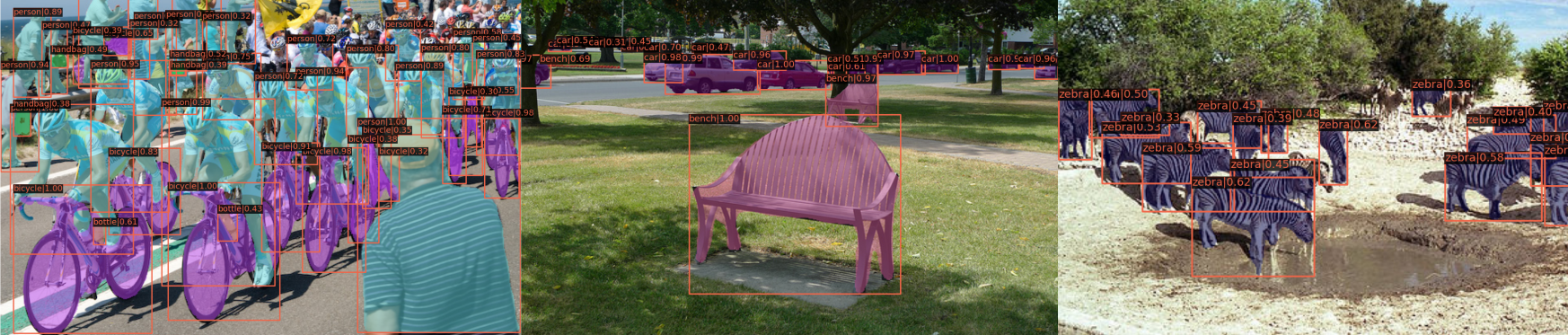

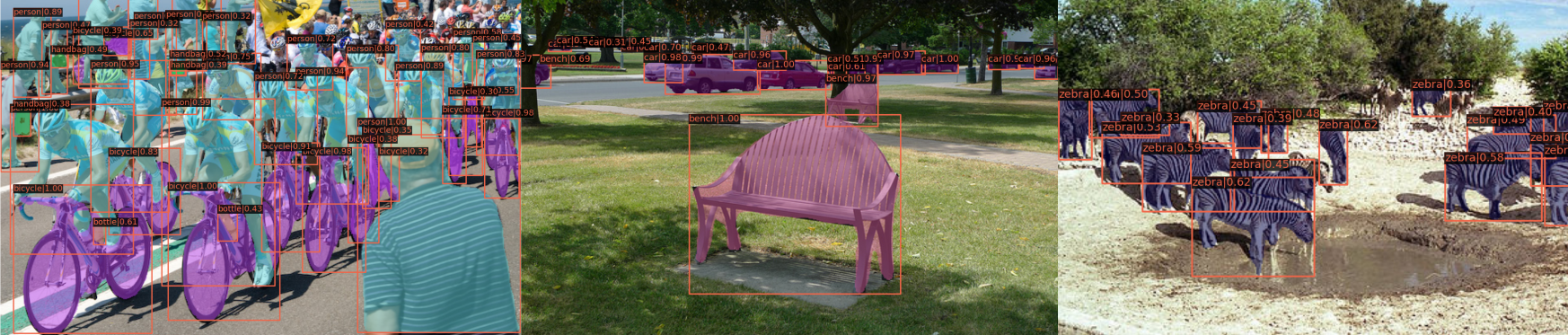

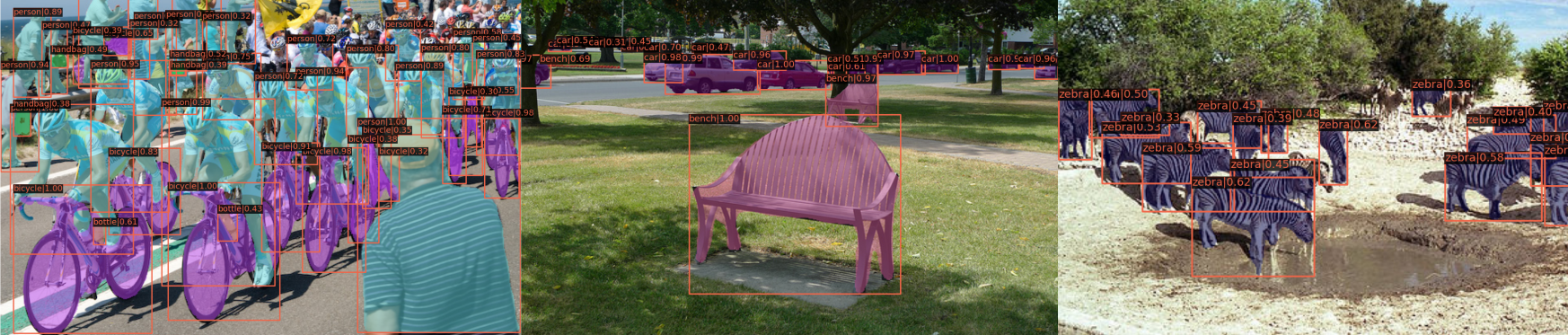

+One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks. In this work, we propose a Task-aligned One-stage Object Detection (TOOD) that explicitly aligns the two tasks in a learning-based manner. First, we design a novel Task-aligned Head (T-Head) which offers a better balance between learning task-interactive and task-specific features, as well as a greater flexibility to learn the alignment via a task-aligned predictor. Second, we propose Task Alignment Learning (TAL) to explicitly pull closer (or even unify) the optimal anchors for the two tasks during training via a designed sample assignment scheme and a task-aligned loss. Extensive experiments are conducted on MS-COCO, where TOOD achieves a 51.1 AP at single-model single-scale testing. This surpasses the recent one-stage detectors by a large margin, such as ATSS (47.7 AP), GFL (48.2 AP), and PAA (49.0 AP), with fewer parameters and FLOPs. Qualitative results also demonstrate the effectiveness of TOOD for better aligning the tasks of object classification and localization.

+

+

+

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -59,10 +59,9 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

## 更新日志

-最新的 **2.19.1** 版本已经在 2021.12.14 发布:

-- 发布 [YOLOX](configs/yolox/README.md) COCO 预训练模型

-- 在自述文件中添加论文的摘要和草图

-- 修复一些权重初始化错误

+最新的 **2.20.0** 版本已经在 2021.12.27 发布:

+- 支持了 ICCV 2021 Oral 方法 [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection

+- 支持了自动从最新的存储参数节点恢复训练

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/changelog.md)。

@@ -146,6 +145,7 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

我们在[基于 MMDetection 的项目](./docs/zh_cn/projects.md)中列举了一些其他的支持的算法。

@@ -206,6 +206,8 @@ MMDetection 是一款由来自不同高校和企业的研发人员共同参与

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab 光流估计工具箱与测试基准

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab 少样本学习工具箱与测试基准

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 人体参数化模型工具箱与测试基准

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab 自监督学习工具箱与测试基准

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab 模型压缩工具箱与测试基准

## 欢迎加入 OpenMMLab 社区

diff --git a/configs/resnest/metafile.yml b/configs/resnest/metafile.yml

index d7f68e5cd8b..3323fad027a 100644

--- a/configs/resnest/metafile.yml

+++ b/configs/resnest/metafile.yml

@@ -11,7 +11,7 @@ Collections:

Paper:

URL: https://arxiv.org/abs/2004.08955

Title: 'ResNeSt: Split-Attention Networks'

- README: configs/renest/README.md

+ README: configs/resnest/README.md

Code:

URL: https://github.com/open-mmlab/mmdetection/blob/v2.7.0/mmdet/models/backbones/resnest.py#L273

Version: v2.7.0

diff --git a/configs/strong_baselines/README.md b/configs/strong_baselines/README.md

index c1487ef99a3..5ada104bbe2 100644

--- a/configs/strong_baselines/README.md

+++ b/configs/strong_baselines/README.md

@@ -1,6 +1,6 @@

# Strong Baselines

-We train Mask R-CNN with large-scale jittor and longer schedule as strong baselines.

+We train Mask R-CNN with large-scale jitter and longer schedule as strong baselines.

The modifications follow those in [Detectron2](https://github.com/facebookresearch/detectron2/tree/master/configs/new_baselines).

## Results and models

diff --git a/configs/tood/README.md b/configs/tood/README.md

new file mode 100644

index 00000000000..b1522e78565

--- /dev/null

+++ b/configs/tood/README.md

@@ -0,0 +1,44 @@

+# TOOD: Task-aligned One-stage Object Detection

+

+## Abstract

+

+

+

+One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks. In this work, we propose a Task-aligned One-stage Object Detection (TOOD) that explicitly aligns the two tasks in a learning-based manner. First, we design a novel Task-aligned Head (T-Head) which offers a better balance between learning task-interactive and task-specific features, as well as a greater flexibility to learn the alignment via a task-aligned predictor. Second, we propose Task Alignment Learning (TAL) to explicitly pull closer (or even unify) the optimal anchors for the two tasks during training via a designed sample assignment scheme and a task-aligned loss. Extensive experiments are conducted on MS-COCO, where TOOD achieves a 51.1 AP at single-model single-scale testing. This surpasses the recent one-stage detectors by a large margin, such as ATSS (47.7 AP), GFL (48.2 AP), and PAA (49.0 AP), with fewer parameters and FLOPs. Qualitative results also demonstrate the effectiveness of TOOD for better aligning the tasks of object classification and localization.

+

+

+

+

+

+

+ -[📘Documentation](https://mmdetection.readthedocs.io/en/v2.19.1/) |

-[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.19.1/get_started.html) |

-[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.19.1/model_zoo.html) |

-[🆕Update News](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘Documentation](https://mmdetection.readthedocs.io/en/v2.20.0/) |

+[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.20.0/get_started.html) |

+[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.20.0/model_zoo.html) |

+[🆕Update News](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀Ongoing Projects](https://github.com/open-mmlab/mmdetection/projects) |

[🤔Reporting Issues](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -60,11 +60,10 @@ This project is released under the [Apache 2.0 license](LICENSE).

## Changelog

-**2.19.1** was released in 14/12/2021:

+**2.20.0** was released in 27/12/2021:

-- Release [YOLOX](configs/yolox/README.md) COCO pretrained models

-- Add abstract and sketch of the papers in readmes

-- Fix some weight initialization bugs

+- Support [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection (ICCV 2021 Oral)

+- Support resuming from the latest checkpoint automatically

Please refer to [changelog.md](docs/en/changelog.md) for details and release history.

@@ -149,6 +148,7 @@ Results and models are available in the [model zoo](docs/en/model_zoo.md).

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

Some other methods are also supported in [projects using MMDetection](./docs/en/projects.md).

@@ -209,3 +209,5 @@ If you use this toolbox or benchmark in your research, please cite this project.

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab Model Compression Toolbox and Benchmark.

diff --git a/README_zh-CN.md b/README_zh-CN.md

index 846b4f4a93d..bca9a2a4e65 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -13,10 +13,10 @@

-[📘Documentation](https://mmdetection.readthedocs.io/en/v2.19.1/) |

-[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.19.1/get_started.html) |

-[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.19.1/model_zoo.html) |

-[🆕Update News](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘Documentation](https://mmdetection.readthedocs.io/en/v2.20.0/) |

+[🛠️Installation](https://mmdetection.readthedocs.io/en/v2.20.0/get_started.html) |

+[👀Model Zoo](https://mmdetection.readthedocs.io/en/v2.20.0/model_zoo.html) |

+[🆕Update News](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀Ongoing Projects](https://github.com/open-mmlab/mmdetection/projects) |

[🤔Reporting Issues](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -60,11 +60,10 @@ This project is released under the [Apache 2.0 license](LICENSE).

## Changelog

-**2.19.1** was released in 14/12/2021:

+**2.20.0** was released in 27/12/2021:

-- Release [YOLOX](configs/yolox/README.md) COCO pretrained models

-- Add abstract and sketch of the papers in readmes

-- Fix some weight initialization bugs

+- Support [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection (ICCV 2021 Oral)

+- Support resuming from the latest checkpoint automatically

Please refer to [changelog.md](docs/en/changelog.md) for details and release history.

@@ -149,6 +148,7 @@ Results and models are available in the [model zoo](docs/en/model_zoo.md).

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

Some other methods are also supported in [projects using MMDetection](./docs/en/projects.md).

@@ -209,3 +209,5 @@ If you use this toolbox or benchmark in your research, please cite this project.

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab Model Compression Toolbox and Benchmark.

diff --git a/README_zh-CN.md b/README_zh-CN.md

index 846b4f4a93d..bca9a2a4e65 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -13,10 +13,10 @@

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -59,10 +59,9 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

## 更新日志

-最新的 **2.19.1** 版本已经在 2021.12.14 发布:

-- 发布 [YOLOX](configs/yolox/README.md) COCO 预训练模型

-- 在自述文件中添加论文的摘要和草图

-- 修复一些权重初始化错误

+最新的 **2.20.0** 版本已经在 2021.12.27 发布:

+- 支持了 ICCV 2021 Oral 方法 [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection

+- 支持了自动从最新的存储参数节点恢复训练

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/changelog.md)。

@@ -146,6 +145,7 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

我们在[基于 MMDetection 的项目](./docs/zh_cn/projects.md)中列举了一些其他的支持的算法。

@@ -206,6 +206,8 @@ MMDetection 是一款由来自不同高校和企业的研发人员共同参与

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab 光流估计工具箱与测试基准

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab 少样本学习工具箱与测试基准

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 人体参数化模型工具箱与测试基准

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab 自监督学习工具箱与测试基准

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab 模型压缩工具箱与测试基准

## 欢迎加入 OpenMMLab 社区

diff --git a/configs/resnest/metafile.yml b/configs/resnest/metafile.yml

index d7f68e5cd8b..3323fad027a 100644

--- a/configs/resnest/metafile.yml

+++ b/configs/resnest/metafile.yml

@@ -11,7 +11,7 @@ Collections:

Paper:

URL: https://arxiv.org/abs/2004.08955

Title: 'ResNeSt: Split-Attention Networks'

- README: configs/renest/README.md

+ README: configs/resnest/README.md

Code:

URL: https://github.com/open-mmlab/mmdetection/blob/v2.7.0/mmdet/models/backbones/resnest.py#L273

Version: v2.7.0

diff --git a/configs/strong_baselines/README.md b/configs/strong_baselines/README.md

index c1487ef99a3..5ada104bbe2 100644

--- a/configs/strong_baselines/README.md

+++ b/configs/strong_baselines/README.md

@@ -1,6 +1,6 @@

# Strong Baselines

-We train Mask R-CNN with large-scale jittor and longer schedule as strong baselines.

+We train Mask R-CNN with large-scale jitter and longer schedule as strong baselines.

The modifications follow those in [Detectron2](https://github.com/facebookresearch/detectron2/tree/master/configs/new_baselines).

## Results and models

diff --git a/configs/tood/README.md b/configs/tood/README.md

new file mode 100644

index 00000000000..b1522e78565

--- /dev/null

+++ b/configs/tood/README.md

@@ -0,0 +1,44 @@

+# TOOD: Task-aligned One-stage Object Detection

+

+## Abstract

+

+

+

+One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks. In this work, we propose a Task-aligned One-stage Object Detection (TOOD) that explicitly aligns the two tasks in a learning-based manner. First, we design a novel Task-aligned Head (T-Head) which offers a better balance between learning task-interactive and task-specific features, as well as a greater flexibility to learn the alignment via a task-aligned predictor. Second, we propose Task Alignment Learning (TAL) to explicitly pull closer (or even unify) the optimal anchors for the two tasks during training via a designed sample assignment scheme and a task-aligned loss. Extensive experiments are conducted on MS-COCO, where TOOD achieves a 51.1 AP at single-model single-scale testing. This surpasses the recent one-stage detectors by a large margin, such as ATSS (47.7 AP), GFL (48.2 AP), and PAA (49.0 AP), with fewer parameters and FLOPs. Qualitative results also demonstrate the effectiveness of TOOD for better aligning the tasks of object classification and localization.

+

+

+

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.19.1/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.19.1/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.20.0/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.20.0/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

@@ -59,10 +59,9 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

## 更新日志

-最新的 **2.19.1** 版本已经在 2021.12.14 发布:

-- 发布 [YOLOX](configs/yolox/README.md) COCO 预训练模型

-- 在自述文件中添加论文的摘要和草图

-- 修复一些权重初始化错误

+最新的 **2.20.0** 版本已经在 2021.12.27 发布:

+- 支持了 ICCV 2021 Oral 方法 [TOOD](configs/tood/README.md): Task-aligned One-stage Object Detection

+- 支持了自动从最新的存储参数节点恢复训练

如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/changelog.md)。

@@ -146,6 +145,7 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

- [x] [YOLOX (ArXiv'2021)](configs/yolox/README.md)

- [x] [SOLO (ECCV'2020)](configs/solo/README.md)

- [x] [QueryInst (ICCV'2021)](configs/queryinst/README.md)

+- [x] [TOOD (ICCV'2021)](configs/tood/README.md)

我们在[基于 MMDetection 的项目](./docs/zh_cn/projects.md)中列举了一些其他的支持的算法。

@@ -206,6 +206,8 @@ MMDetection 是一款由来自不同高校和企业的研发人员共同参与

- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab 光流估计工具箱与测试基准

- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab 少样本学习工具箱与测试基准

- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 人体参数化模型工具箱与测试基准

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab 自监督学习工具箱与测试基准

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab 模型压缩工具箱与测试基准

## 欢迎加入 OpenMMLab 社区

diff --git a/configs/resnest/metafile.yml b/configs/resnest/metafile.yml

index d7f68e5cd8b..3323fad027a 100644

--- a/configs/resnest/metafile.yml

+++ b/configs/resnest/metafile.yml

@@ -11,7 +11,7 @@ Collections:

Paper:

URL: https://arxiv.org/abs/2004.08955

Title: 'ResNeSt: Split-Attention Networks'

- README: configs/renest/README.md

+ README: configs/resnest/README.md

Code:

URL: https://github.com/open-mmlab/mmdetection/blob/v2.7.0/mmdet/models/backbones/resnest.py#L273

Version: v2.7.0

diff --git a/configs/strong_baselines/README.md b/configs/strong_baselines/README.md

index c1487ef99a3..5ada104bbe2 100644

--- a/configs/strong_baselines/README.md

+++ b/configs/strong_baselines/README.md

@@ -1,6 +1,6 @@

# Strong Baselines

-We train Mask R-CNN with large-scale jittor and longer schedule as strong baselines.

+We train Mask R-CNN with large-scale jitter and longer schedule as strong baselines.

The modifications follow those in [Detectron2](https://github.com/facebookresearch/detectron2/tree/master/configs/new_baselines).

## Results and models

diff --git a/configs/tood/README.md b/configs/tood/README.md

new file mode 100644

index 00000000000..b1522e78565

--- /dev/null

+++ b/configs/tood/README.md

@@ -0,0 +1,44 @@

+# TOOD: Task-aligned One-stage Object Detection

+

+## Abstract

+

+

+

+One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks. In this work, we propose a Task-aligned One-stage Object Detection (TOOD) that explicitly aligns the two tasks in a learning-based manner. First, we design a novel Task-aligned Head (T-Head) which offers a better balance between learning task-interactive and task-specific features, as well as a greater flexibility to learn the alignment via a task-aligned predictor. Second, we propose Task Alignment Learning (TAL) to explicitly pull closer (or even unify) the optimal anchors for the two tasks during training via a designed sample assignment scheme and a task-aligned loss. Extensive experiments are conducted on MS-COCO, where TOOD achieves a 51.1 AP at single-model single-scale testing. This surpasses the recent one-stage detectors by a large margin, such as ATSS (47.7 AP), GFL (48.2 AP), and PAA (49.0 AP), with fewer parameters and FLOPs. Qualitative results also demonstrate the effectiveness of TOOD for better aligning the tasks of object classification and localization.

+

+

+ +

+