Major features

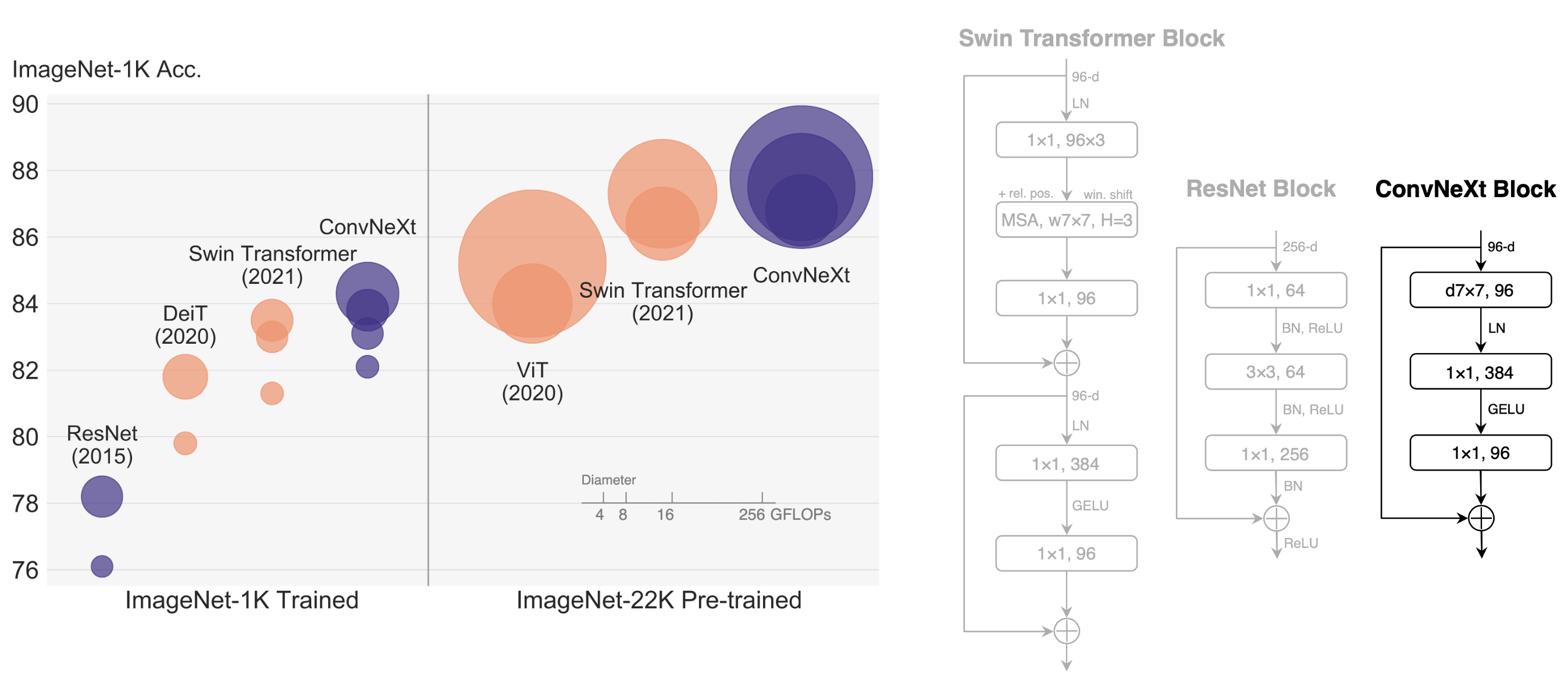

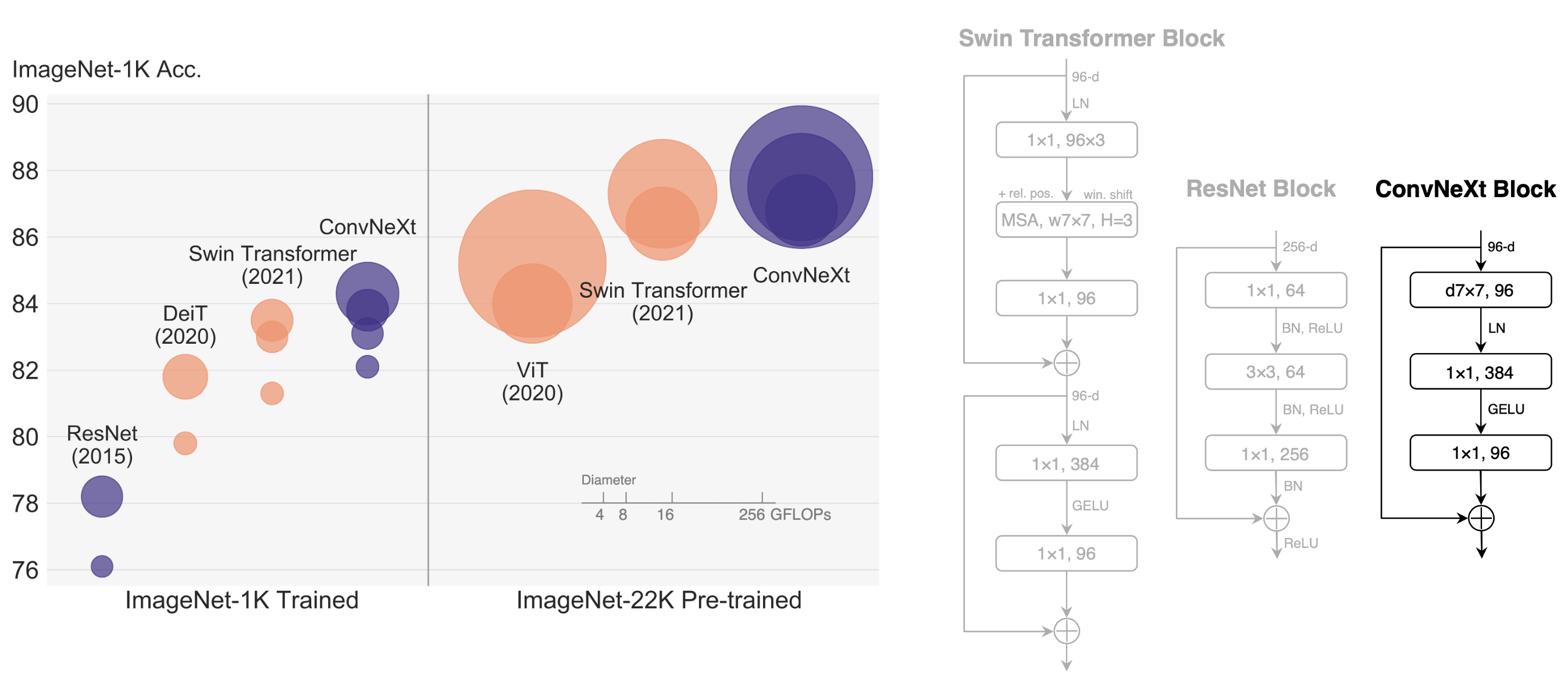

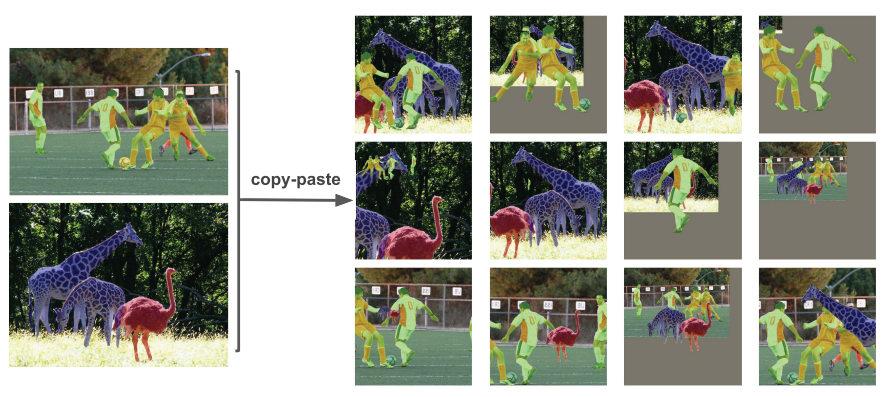

@@ -68,22 +73,42 @@ The master branch works with **PyTorch 1.5+**. Apart from MMDetection, we also released a library [mmcv](https://github.com/open-mmlab/mmcv) for computer vision research, which is heavily depended on by this toolbox. -## License - -This project is released under the [Apache 2.0 license](LICENSE). - -## Changelog +## What's New -**2.24.1** was released in 30/4/2022: +**2.25.0** was released in 1/6/2022: -- Support [Simple Copy Paste](configs/simple_copy_paste) -- Support automatically scaling LR according to GPU number and samples per GPU -- Support Class Aware Sampler that improves performance on OpenImages Dataset +- Support dedicated `MMDetWandbHook` hook +- Support [ConvNeXt](configs/convnext), [DDOD](configs/ddod), [SOLOv2](configs/solov2) +- Support [Mask2Former](configs/mask2former) for instance segmentation +- Rename [config files of Mask2Former](configs/mask2former) Please refer to [changelog.md](docs/en/changelog.md) for details and release history. For compatibility changes between different versions of MMDetection, please refer to [compatibility.md](docs/en/compatibility.md). +## Installation + +Please refer to [Installation](docs/en/get_started.md/#Installation) for installation instructions. + +## Getting Started + +Please see [get_started.md](docs/en/get_started.md) for the basic usage of MMDetection. We provide [colab tutorial](demo/MMDet_Tutorial.ipynb) and [instance segmentation colab tutorial](demo/MMDet_InstanceSeg_Tutorial.ipynb), and other tutorials for: + +- [with existing dataset](docs/en/1_exist_data_model.md) +- [with new dataset](docs/en/2_new_data_model.md) +- [with existing dataset_new_model](docs/en/3_exist_data_new_model.md) +- [learn about configs](docs/en/tutorials/config.md) +- [customize_datasets](docs/en/tutorials/customize_dataset.md) +- [customize data pipelines](docs/en/tutorials/data_pipeline.md) +- [customize_models](docs/en/tutorials/customize_models.md) +- [customize runtime settings](docs/en/tutorials/customize_runtime.md) +- [customize_losses](docs/en/tutorials/customize_losses.md) +- [finetuning models](docs/en/tutorials/finetune.md) +- [export a model to ONNX](docs/en/tutorials/pytorch2onnx.md) +- [export ONNX to TRT](docs/en/tutorials/onnx2tensorrt.md) +- [weight initialization](docs/en/tutorials/init_cfg.md) +- [how to xxx](docs/en/tutorials/how_to.md) + ## Overview of Benchmark and Model Zoo Results and models are available in the [model zoo](docs/en/model_zoo.md). @@ -132,6 +157,7 @@ Results and models are available in the [model zoo](docs/en/model_zoo.md). -

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.21.0/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/stable/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/stable/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/stable/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/stable/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

-## 简介

+

-

-[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/) |

-[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/get_started.html) |

-[👀模型库](https://mmdetection.readthedocs.io/zh_CN/v2.21.0/model_zoo.html) |

-[🆕更新日志](https://mmdetection.readthedocs.io/en/v2.21.0/changelog.html) |

+[📘使用文档](https://mmdetection.readthedocs.io/zh_CN/stable/) |

+[🛠️安装教程](https://mmdetection.readthedocs.io/zh_CN/stable/get_started.html) |

+[👀模型库](https://mmdetection.readthedocs.io/zh_CN/stable/model_zoo.html) |

+[🆕更新日志](https://mmdetection.readthedocs.io/en/stable/changelog.html) |

[🚀进行中的项目](https://github.com/open-mmlab/mmdetection/projects) |

[🤔报告问题](https://github.com/open-mmlab/mmdetection/issues/new/choose)

-## 简介

+

[English](README.md) | 简体中文

+

+

+## 简介

+

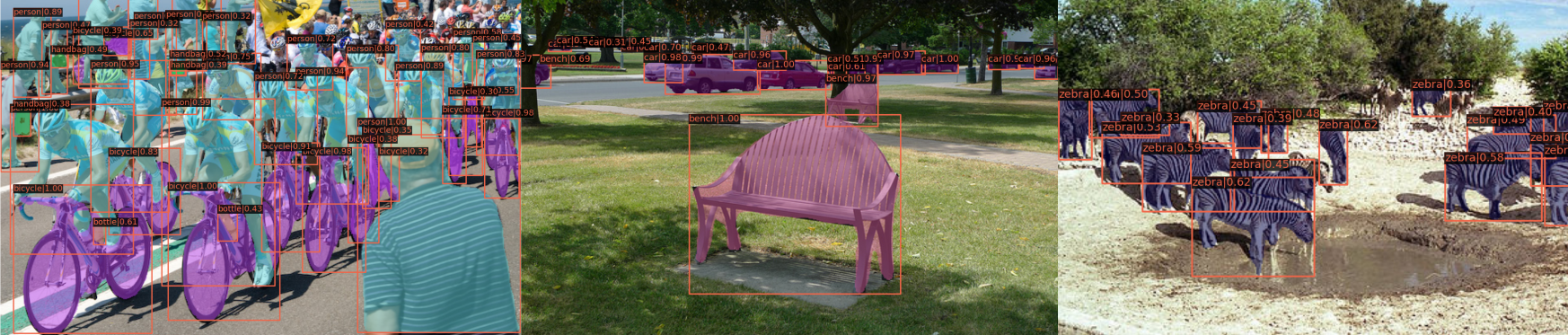

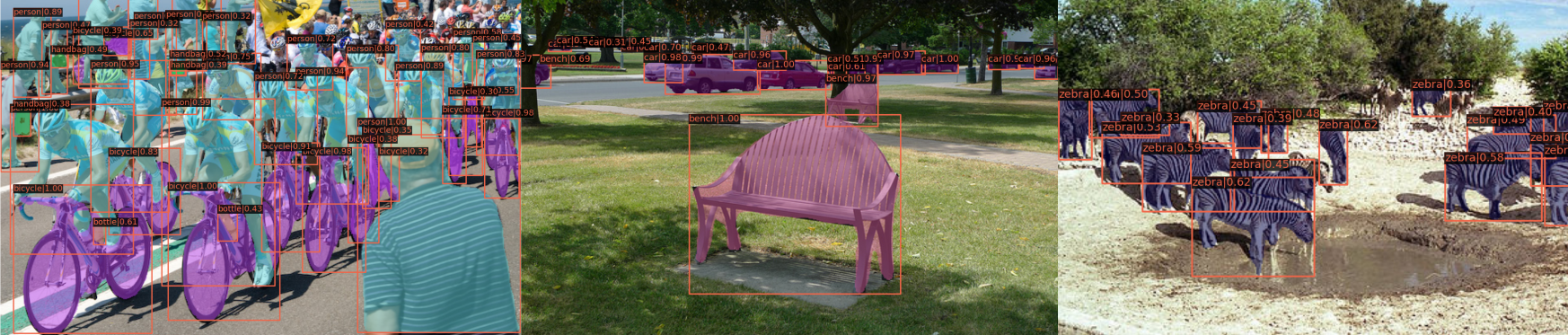

MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [OpenMMLab](https://openmmlab.com/) 项目的一部分。

主分支代码目前支持 PyTorch 1.5 以上的版本。

+ +

+

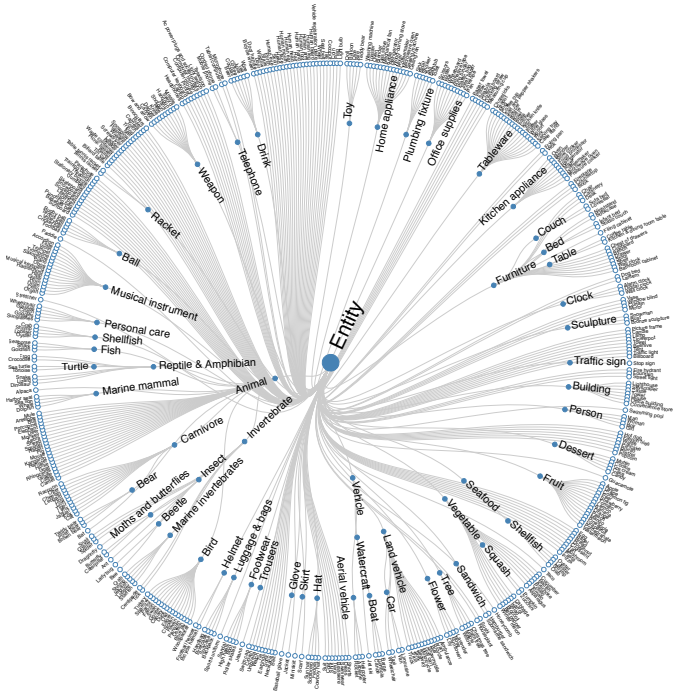

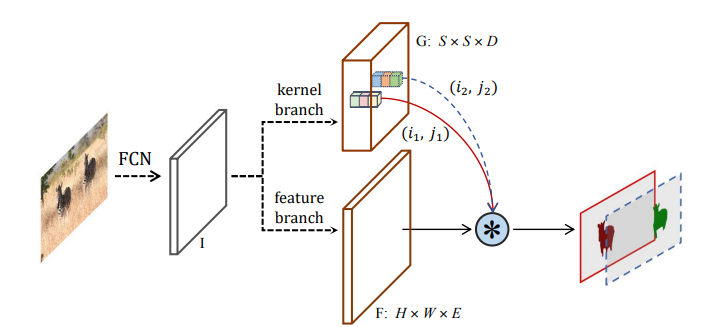

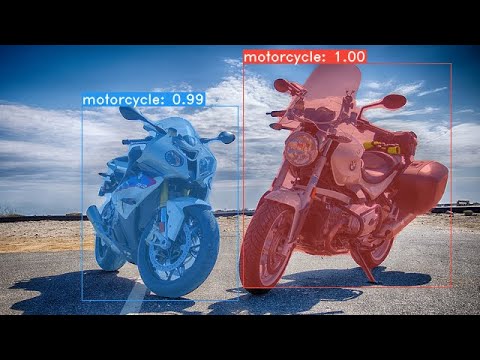

主要特性

@@ -67,22 +72,45 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope 除了 MMDetection 之外,我们还开源了计算机视觉基础库 [MMCV](https://github.com/open-mmlab/mmcv),MMCV 是 MMDetection 的主要依赖。 -## 开源许可证 +## 最新进展 -该项目采用 [Apache 2.0 开源许可证](LICENSE)。 - -## 更新日志 +最新的 **2.25.0** 版本已经在 2022.06.01 发布: -最新的 **2.24.1** 版本已经在 2022.04.30 发布: - -- 支持算法 [Simple Copy Paste](configs/simple_copy_paste) -- 支持训练时根据总 batch 数自动缩放学习率 -- 支持类别可知的采样器来提高算法在 OpenImages 数据集上的性能 +- 支持功能更丰富的 `MMDetWandbHook` +- 支持算法 [ConvNeXt](configs/convnext), [DDOD](configs/ddod) 和 [SOLOv2](configs/solov2) +- [Mask2Former](configs/mask2former) 支持实例分割 +- 为了加入 Mask2Former 实例分割的模型,对 Mask2Former 原有的全景分割的配置文件进行了重命名 如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/en/changelog.md)。 如果想了解 MMDetection 不同版本之间的兼容性, 请参考[兼容性说明文档](docs/zh_cn/compatibility.md)。 +## 安装 + +请参考[安装指令](docs/zh_cn/get_started.md/#Installation)进行安装。 + +## 教程 + +请参考[快速入门文档](docs/zh_cn/get_started.md)学习 MMDetection 的基本使用。 +我们提供了 [检测的 colab 教程](demo/MMDet_Tutorial.ipynb) 和 [实例分割的 colab 教程](demo/MMDet_InstanceSeg_Tutorial.ipynb),也为新手提供了完整的运行教程,其他教程如下 + +- [使用已有模型在标准数据集上进行推理](docs/zh_cn/1_exist_data_model.md) +- [在自定义数据集上进行训练](docs/zh_cn/2_new_data_model.md) +- [在标准数据集上训练自定义模型](docs/zh_cn/3_exist_data_new_model.md) +- [学习配置文件](docs/zh_cn/tutorials/config.md) +- [自定义数据集](docs/zh_cn/tutorials/customize_dataset.md) +- [自定义数据预处理流程](docs/zh_cn/tutorials/data_pipeline.md) +- [自定义模型](docs/zh_cn/tutorials/customize_models.md) +- [自定义训练配置](docs/zh_cn/tutorials/customize_runtime.md) +- [自定义损失函数](docs/zh_cn/tutorials/customize_losses.md) +- [模型微调](docs/zh_cn/tutorials/finetune.md) +- [Pytorch 到 ONNX 的模型转换](docs/zh_cn/tutorials/pytorch2onnx.md) +- [ONNX 到 TensorRT 的模型转换](docs/zh_cn/tutorials/onnx2tensorrt.md) +- [权重初始化](docs/zh_cn/tutorials/init_cfg.md) +- [how to xxx](docs/zh_cn/tutorials/how_to.md) + +同时,我们还提供了 [MMDetection 中文解读文案汇总](docs/zh_cn/article.md) + ## 基准测试和模型库 测试结果和模型可以在[模型库](docs/zh_cn/model_zoo.md)中找到。 @@ -131,6 +159,7 @@ MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [Ope

+ +

+

+

+## Results and models

+

+| Method | Backbone | Pretrain | Lr schd | Multi-scale crop | FP16 | Mem (GB) | box AP | mask AP | Config | Download |

+| :----------------: | :--------: | :---------: | :-----: | :--------------: | :--: | :------: | :----: | :-----: | :-------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| Mask R-CNN | ConvNeXt-T | ImageNet-1K | 3x | yes | yes | 7.3 | 46.2 | 41.7 | [config](./mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco_20220426_154953-050731f4.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco_20220426_154953.log.json) |

+| Cascade Mask R-CNN | ConvNeXt-T | ImageNet-1K | 3x | yes | yes | 9.0 | 50.3 | 43.6 | [config](./cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220509_204200-8f07c40b.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220509_204200.log.json) |

+| Cascade Mask R-CNN | ConvNeXt-S | ImageNet-1K | 3x | yes | yes | 12.3 | 51.8 | 44.8 | [config](./cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220510_201004-3d24f5a4.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220510_201004.log.json) |

+

+**Note**:

+

+- ConvNeXt backbone needs to install [MMClassification](https://github.com/open-mmlab/mmclassification) first, which has abundant backbones for downstream tasks.

+

+```shell

+pip install mmcls>=0.22.0

+```

+

+- The performance is unstable. `Cascade Mask R-CNN` may fluctuate about 0.2 mAP.

+

+## Citation

+

+```bibtex

+@article{liu2022convnet,

+ title={A ConvNet for the 2020s},

+ author={Liu, Zhuang and Mao, Hanzi and Wu, Chao-Yuan and Feichtenhofer, Christoph and Darrell, Trevor and Xie, Saining},

+ journal={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

+ year={2022}

+}

+```

diff --git a/configs/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py b/configs/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

new file mode 100644

index 00000000000..0ccc31d2488

--- /dev/null

+++ b/configs/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

@@ -0,0 +1,32 @@

+_base_ = './cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py' # noqa

+

+# please install mmcls>=0.22.0

+# import mmcls.models to trigger register_module in mmcls

+custom_imports = dict(imports=['mmcls.models'], allow_failed_imports=False)

+checkpoint_file = 'https://download.openmmlab.com/mmclassification/v0/convnext/downstream/convnext-small_3rdparty_32xb128-noema_in1k_20220301-303e75e3.pth' # noqa

+

+model = dict(

+ backbone=dict(

+ _delete_=True,

+ type='mmcls.ConvNeXt',

+ arch='small',

+ out_indices=[0, 1, 2, 3],

+ drop_path_rate=0.6,

+ layer_scale_init_value=1.0,

+ gap_before_final_norm=False,

+ init_cfg=dict(

+ type='Pretrained', checkpoint=checkpoint_file,

+ prefix='backbone.')))

+

+optimizer = dict(

+ _delete_=True,

+ constructor='LearningRateDecayOptimizerConstructor',

+ type='AdamW',

+ lr=0.0002,

+ betas=(0.9, 0.999),

+ weight_decay=0.05,

+ paramwise_cfg={

+ 'decay_rate': 0.7,

+ 'decay_type': 'layer_wise',

+ 'num_layers': 12

+ })

diff --git a/configs/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py b/configs/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

new file mode 100644

index 00000000000..93304c001da

--- /dev/null

+++ b/configs/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

@@ -0,0 +1,149 @@

+_base_ = [

+ '../_base_/models/cascade_mask_rcnn_r50_fpn.py',

+ '../_base_/datasets/coco_instance.py',

+ '../_base_/schedules/schedule_1x.py', '../_base_/default_runtime.py'

+]

+

+# please install mmcls>=0.22.0

+# import mmcls.models to trigger register_module in mmcls

+custom_imports = dict(imports=['mmcls.models'], allow_failed_imports=False)

+checkpoint_file = 'https://download.openmmlab.com/mmclassification/v0/convnext/downstream/convnext-tiny_3rdparty_32xb128-noema_in1k_20220301-795e9634.pth' # noqa

+

+model = dict(

+ backbone=dict(

+ _delete_=True,

+ type='mmcls.ConvNeXt',

+ arch='tiny',

+ out_indices=[0, 1, 2, 3],

+ drop_path_rate=0.4,

+ layer_scale_init_value=1.0,

+ gap_before_final_norm=False,

+ init_cfg=dict(

+ type='Pretrained', checkpoint=checkpoint_file,

+ prefix='backbone.')),

+ neck=dict(in_channels=[96, 192, 384, 768]),

+ roi_head=dict(bbox_head=[

+ dict(

+ type='ConvFCBBoxHead',

+ num_shared_convs=4,

+ num_shared_fcs=1,

+ in_channels=256,

+ conv_out_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ reg_decoded_bbox=True,

+ norm_cfg=dict(type='SyncBN', requires_grad=True),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='GIoULoss', loss_weight=10.0)),

+ dict(

+ type='ConvFCBBoxHead',

+ num_shared_convs=4,

+ num_shared_fcs=1,

+ in_channels=256,

+ conv_out_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.05, 0.05, 0.1, 0.1]),

+ reg_class_agnostic=False,

+ reg_decoded_bbox=True,

+ norm_cfg=dict(type='SyncBN', requires_grad=True),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='GIoULoss', loss_weight=10.0)),

+ dict(

+ type='ConvFCBBoxHead',

+ num_shared_convs=4,

+ num_shared_fcs=1,

+ in_channels=256,

+ conv_out_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.033, 0.033, 0.067, 0.067]),

+ reg_class_agnostic=False,

+ reg_decoded_bbox=True,

+ norm_cfg=dict(type='SyncBN', requires_grad=True),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='GIoULoss', loss_weight=10.0))

+ ]))

+

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+

+# augmentation strategy originates from DETR / Sparse RCNN

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(

+ type='AutoAugment',

+ policies=[[

+ dict(

+ type='Resize',

+ img_scale=[(480, 1333), (512, 1333), (544, 1333), (576, 1333),

+ (608, 1333), (640, 1333), (672, 1333), (704, 1333),

+ (736, 1333), (768, 1333), (800, 1333)],

+ multiscale_mode='value',

+ keep_ratio=True)

+ ],

+ [

+ dict(

+ type='Resize',

+ img_scale=[(400, 1333), (500, 1333), (600, 1333)],

+ multiscale_mode='value',

+ keep_ratio=True),

+ dict(

+ type='RandomCrop',

+ crop_type='absolute_range',

+ crop_size=(384, 600),

+ allow_negative_crop=True),

+ dict(

+ type='Resize',

+ img_scale=[(480, 1333), (512, 1333), (544, 1333),

+ (576, 1333), (608, 1333), (640, 1333),

+ (672, 1333), (704, 1333), (736, 1333),

+ (768, 1333), (800, 1333)],

+ multiscale_mode='value',

+ override=True,

+ keep_ratio=True)

+ ]]),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

+]

+data = dict(train=dict(pipeline=train_pipeline), persistent_workers=True)

+

+optimizer = dict(

+ _delete_=True,

+ constructor='LearningRateDecayOptimizerConstructor',

+ type='AdamW',

+ lr=0.0002,

+ betas=(0.9, 0.999),

+ weight_decay=0.05,

+ paramwise_cfg={

+ 'decay_rate': 0.7,

+ 'decay_type': 'layer_wise',

+ 'num_layers': 6

+ })

+

+lr_config = dict(warmup_iters=1000, step=[27, 33])

+runner = dict(max_epochs=36)

+

+# you need to set mode='dynamic' if you are using pytorch<=1.5.0

+fp16 = dict(loss_scale=dict(init_scale=512))

diff --git a/configs/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco.py b/configs/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco.py

new file mode 100644

index 00000000000..e8a283f5483

--- /dev/null

+++ b/configs/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco.py

@@ -0,0 +1,90 @@

+_base_ = [

+ '../_base_/models/mask_rcnn_r50_fpn.py',

+ '../_base_/datasets/coco_instance.py',

+ '../_base_/schedules/schedule_1x.py', '../_base_/default_runtime.py'

+]

+

+# please install mmcls>=0.22.0

+# import mmcls.models to trigger register_module in mmcls

+custom_imports = dict(imports=['mmcls.models'], allow_failed_imports=False)

+checkpoint_file = 'https://download.openmmlab.com/mmclassification/v0/convnext/downstream/convnext-tiny_3rdparty_32xb128-noema_in1k_20220301-795e9634.pth' # noqa

+

+model = dict(

+ backbone=dict(

+ _delete_=True,

+ type='mmcls.ConvNeXt',

+ arch='tiny',

+ out_indices=[0, 1, 2, 3],

+ drop_path_rate=0.4,

+ layer_scale_init_value=1.0,

+ gap_before_final_norm=False,

+ init_cfg=dict(

+ type='Pretrained', checkpoint=checkpoint_file,

+ prefix='backbone.')),

+ neck=dict(in_channels=[96, 192, 384, 768]))

+

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+

+# augmentation strategy originates from DETR / Sparse RCNN

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(

+ type='AutoAugment',

+ policies=[[

+ dict(

+ type='Resize',

+ img_scale=[(480, 1333), (512, 1333), (544, 1333), (576, 1333),

+ (608, 1333), (640, 1333), (672, 1333), (704, 1333),

+ (736, 1333), (768, 1333), (800, 1333)],

+ multiscale_mode='value',

+ keep_ratio=True)

+ ],

+ [

+ dict(

+ type='Resize',

+ img_scale=[(400, 1333), (500, 1333), (600, 1333)],

+ multiscale_mode='value',

+ keep_ratio=True),

+ dict(

+ type='RandomCrop',

+ crop_type='absolute_range',

+ crop_size=(384, 600),

+ allow_negative_crop=True),

+ dict(

+ type='Resize',

+ img_scale=[(480, 1333), (512, 1333), (544, 1333),

+ (576, 1333), (608, 1333), (640, 1333),

+ (672, 1333), (704, 1333), (736, 1333),

+ (768, 1333), (800, 1333)],

+ multiscale_mode='value',

+ override=True,

+ keep_ratio=True)

+ ]]),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

+]

+data = dict(train=dict(pipeline=train_pipeline), persistent_workers=True)

+

+optimizer = dict(

+ _delete_=True,

+ constructor='LearningRateDecayOptimizerConstructor',

+ type='AdamW',

+ lr=0.0001,

+ betas=(0.9, 0.999),

+ weight_decay=0.05,

+ paramwise_cfg={

+ 'decay_rate': 0.95,

+ 'decay_type': 'layer_wise',

+ 'num_layers': 6

+ })

+

+lr_config = dict(warmup_iters=1000, step=[27, 33])

+runner = dict(max_epochs=36)

+

+# you need to set mode='dynamic' if you are using pytorch<=1.5.0

+fp16 = dict(loss_scale=dict(init_scale=512))

diff --git a/configs/convnext/metafile.yml b/configs/convnext/metafile.yml

new file mode 100644

index 00000000000..20425bff1f9

--- /dev/null

+++ b/configs/convnext/metafile.yml

@@ -0,0 +1,93 @@

+Models:

+ - Name: mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco

+ In Collection: Mask R-CNN

+ Config: configs/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco

+ Metadata:

+ Training Memory (GB): 7.3

+ Epochs: 36

+ Training Data: COCO

+ Training Techniques:

+ - AdamW

+ - Mixed Precision Training

+ Training Resources: 8x A100 GPUs

+ Architecture:

+ - ConvNeXt

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 46.2

+ - Task: Instance Segmentation

+ Dataset: COCO

+ Metrics:

+ mask AP: 41.7

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/convnext/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco/mask_rcnn_convnext-t_p4_w7_fpn_fp16_ms-crop_3x_coco_20220426_154953-050731f4.pth

+ Paper:

+ URL: https://arxiv.org/abs/2201.03545

+ Title: 'A ConvNet for the 2020s'

+ README: configs/convnext/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.16.0/mmdet/models/backbones/swin.py#L465

+ Version: v2.16.0

+

+ - Name: cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco

+ In Collection: Cascade Mask R-CNN

+ Config: configs/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

+ Metadata:

+ Training Memory (GB): 9.0

+ Epochs: 36

+ Training Data: COCO

+ Training Techniques:

+ - AdamW

+ - Mixed Precision Training

+ Training Resources: 8x A100 GPUs

+ Architecture:

+ - ConvNeXt

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 50.3

+ - Task: Instance Segmentation

+ Dataset: COCO

+ Metrics:

+ mask AP: 43.6

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-t_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220509_204200-8f07c40b.pth

+ Paper:

+ URL: https://arxiv.org/abs/2201.03545

+ Title: 'A ConvNet for the 2020s'

+ README: configs/convnext/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.16.0/mmdet/models/backbones/swin.py#L465

+ Version: v2.25.0

+

+ - Name: cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco

+ In Collection: Cascade Mask R-CNN

+ Config: configs/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco.py

+ Metadata:

+ Training Memory (GB): 12.3

+ Epochs: 36

+ Training Data: COCO

+ Training Techniques:

+ - AdamW

+ - Mixed Precision Training

+ Training Resources: 8x A100 GPUs

+ Architecture:

+ - ConvNeXt

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 51.8

+ - Task: Instance Segmentation

+ Dataset: COCO

+ Metrics:

+ mask AP: 44.8

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/convnext/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco/cascade_mask_rcnn_convnext-s_p4_w7_fpn_giou_4conv1f_fp16_ms-crop_3x_coco_20220510_201004-3d24f5a4.pth

+ Paper:

+ URL: https://arxiv.org/abs/2201.03545

+ Title: 'A ConvNet for the 2020s'

+ README: configs/convnext/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.16.0/mmdet/models/backbones/swin.py#L465

+ Version: v2.25.0

diff --git a/configs/cornernet/README.md b/configs/cornernet/README.md

index 55877c4c4bf..d0b9e98645f 100644

--- a/configs/cornernet/README.md

+++ b/configs/cornernet/README.md

@@ -14,11 +14,11 @@ We propose CornerNet, a new approach to object detection where we detect an obje

## Results and Models

-| Backbone | Batch Size | Step/Total Epochs | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-| :-------------: | :--------: |:----------------: | :------: | :------------: | :----: | :------: | :--------: |

-| HourglassNet-104 | [10 x 5](./cornernet_hourglass104_mstest_10x5_210e_coco.py) | 180/210 | 13.9 | 4.2 | 41.2 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco/cornernet_hourglass104_mstest_10x5_210e_coco_20200824_185720-5fefbf1c.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco/cornernet_hourglass104_mstest_10x5_210e_coco_20200824_185720.log.json) |

-| HourglassNet-104 | [8 x 6](./cornernet_hourglass104_mstest_8x6_210e_coco.py) | 180/210 | 15.9 | 4.2 | 41.2 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco/cornernet_hourglass104_mstest_8x6_210e_coco_20200825_150618-79b44c30.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco/cornernet_hourglass104_mstest_8x6_210e_coco_20200825_150618.log.json) |

-| HourglassNet-104 | [32 x 3](./cornernet_hourglass104_mstest_32x3_210e_coco.py) | 180/210 | 9.5 | 3.9 | 40.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco/cornernet_hourglass104_mstest_32x3_210e_coco_20200819_203110-1efaea91.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco/cornernet_hourglass104_mstest_32x3_210e_coco_20200819_203110.log.json) |

+| Backbone | Batch Size | Step/Total Epochs | Mem (GB) | Inf time (fps) | box AP | Config | Download |

+| :--------------: | :---------------------------------------------------------: | :---------------: | :------: | :------------: | :----: | :-------------------------------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| HourglassNet-104 | [10 x 5](./cornernet_hourglass104_mstest_10x5_210e_coco.py) | 180/210 | 13.9 | 4.2 | 41.2 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco/cornernet_hourglass104_mstest_10x5_210e_coco_20200824_185720-5fefbf1c.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_10x5_210e_coco/cornernet_hourglass104_mstest_10x5_210e_coco_20200824_185720.log.json) |

+| HourglassNet-104 | [8 x 6](./cornernet_hourglass104_mstest_8x6_210e_coco.py) | 180/210 | 15.9 | 4.2 | 41.2 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco/cornernet_hourglass104_mstest_8x6_210e_coco_20200825_150618-79b44c30.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_8x6_210e_coco/cornernet_hourglass104_mstest_8x6_210e_coco_20200825_150618.log.json) |

+| HourglassNet-104 | [32 x 3](./cornernet_hourglass104_mstest_32x3_210e_coco.py) | 180/210 | 9.5 | 3.9 | 40.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco/cornernet_hourglass104_mstest_32x3_210e_coco_20200819_203110-1efaea91.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/cornernet/cornernet_hourglass104_mstest_32x3_210e_coco/cornernet_hourglass104_mstest_32x3_210e_coco_20200819_203110.log.json) |

Note:

diff --git a/configs/dcn/README.md b/configs/dcn/README.md

index 7866078af2e..745b01cde2d 100644

--- a/configs/dcn/README.md

+++ b/configs/dcn/README.md

@@ -14,26 +14,26 @@ Convolutional neural networks (CNNs) are inherently limited to model geometric t

## Results and Models

-| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

-|:----------------:|:------------:|:-------:|:-------------:|:------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

-| R-50-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 4.0 | 17.8 | 41.3 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-d68aed1e.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_212941.log.json) |

-| R-50-FPN | Faster | pytorch | - | dpool | 1x | 5.0 | 17.2 | 38.9 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307-90d3c01d.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307_203250.log.json) |

-| R-101-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 6.0 | 12.5 | 42.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-1377f13d.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_230019.log.json) |

-| X-101-32x4d-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 7.3 | 10.0 | 44.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203-4f85c69c.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203_001325.log.json) |

-| R-50-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 15.4 | 41.8 | 37.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203-4d9ad43b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203_061339.log.json) |

-| R-101-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 6.5 | 11.7 | 43.5 | 38.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216-a71f5bce.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216_191601.log.json) |

-| R-50-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 14.6 | 43.8 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-2f1fca44.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_220843.log.json) |

-| R-101-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 6.4 | 11.0 | 45.0 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-3b2f0594.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_224829.log.json) |

-| R-50-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 6.0 | 10.0 | 44.4 | 38.6 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200202-42e767a2.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200202_010309.log.json) |

-| R-101-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 8.0 | 8.6 | 45.8 | 39.7 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204-df0c5f10.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204_134006.log.json) |

-| X-101-32x4d-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 9.2 | | 47.3 | 41.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-e75f90c8.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-20200606_183737.log.json) |

-| R-50-FPN (FP16) | Mask | pytorch | dconv(c3-c5) | - | 1x | 3.0 | | 41.9 | 37.5 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247-c06429d2.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247.log.json) |

+| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

+| :-------------: | :----------: | :-----: | :----------: | :---: | :-----: | :------: | :------------: | :----: | :-----: | :---------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| R-50-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 4.0 | 17.8 | 41.3 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-d68aed1e.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_212941.log.json) |

+| R-50-FPN | Faster | pytorch | - | dpool | 1x | 5.0 | 17.2 | 38.9 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307-90d3c01d.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307_203250.log.json) |

+| R-101-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 6.0 | 12.5 | 42.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-1377f13d.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_230019.log.json) |

+| X-101-32x4d-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 7.3 | 10.0 | 44.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203-4f85c69c.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203_001325.log.json) |

+| R-50-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 15.4 | 41.8 | 37.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203-4d9ad43b.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203_061339.log.json) |

+| R-101-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 6.5 | 11.7 | 43.5 | 38.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216-a71f5bce.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216_191601.log.json) |

+| R-50-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 14.6 | 43.8 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-2f1fca44.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_220843.log.json) |

+| R-101-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 6.4 | 11.0 | 45.0 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-3b2f0594.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_224829.log.json) |

+| R-50-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 6.0 | 10.0 | 44.4 | 38.6 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200202-42e767a2.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200202_010309.log.json) |

+| R-101-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 8.0 | 8.6 | 45.8 | 39.7 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204-df0c5f10.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204_134006.log.json) |

+| X-101-32x4d-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 9.2 | | 47.3 | 41.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-e75f90c8.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-20200606_183737.log.json) |

+| R-50-FPN (FP16) | Mask | pytorch | dconv(c3-c5) | - | 1x | 3.0 | | 41.9 | 37.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247-c06429d2.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247.log.json) |

**Notes:**

- `dconv` denotes deformable convolution, `c3-c5` means adding dconv in resnet stage 3 to 5. `dpool` denotes deformable roi pooling.

- The dcn ops are modified from https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch, which should be more memory efficient and slightly faster.

-- (*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

+- (\*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

- **Memory, Train/Inf time is outdated.**

## Citation

diff --git a/configs/dcnv2/README.md b/configs/dcnv2/README.md

index 1e7e3201b56..d230f202c77 100644

--- a/configs/dcnv2/README.md

+++ b/configs/dcnv2/README.md

@@ -10,19 +10,19 @@ The superior performance of Deformable Convolutional Networks arises from its ab

## Results and Models

-| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

-|:----------------:|:------------:|:-------:|:-------------:|:------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

-| R-50-FPN | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.1 | 17.6 | 41.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130_222144.log.json) |

-| *R-50-FPN (dg=4) | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.2 | 17.4 | 41.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130_222058.log.json) |

-| R-50-FPN | Faster | pytorch | - | mdpool | 1x | 5.8 | 16.6 | 38.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307_203304.log.json) |

-| R-50-FPN | Mask | pytorch | mdconv(c3-c5) | - | 1x | 4.5 | 15.1 | 41.5 | 37.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203_063443.log.json) |

-| R-50-FPN (FP16) | Mask | pytorch | mdconv(c3-c5)| - | 1x | 3.1 | | 42.0 | 37.6 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434.log.json) |

+| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

+| :---------------: | :----: | :-----: | :-----------: | :----: | :-----: | :------: | :------------: | :----: | :-----: | :------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| R-50-FPN | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.1 | 17.6 | 41.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130_222144.log.json) |

+| \*R-50-FPN (dg=4) | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.2 | 17.4 | 41.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130_222058.log.json) |

+| R-50-FPN | Faster | pytorch | - | mdpool | 1x | 5.8 | 16.6 | 38.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307_203304.log.json) |

+| R-50-FPN | Mask | pytorch | mdconv(c3-c5) | - | 1x | 4.5 | 15.1 | 41.5 | 37.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203_063443.log.json) |

+| R-50-FPN (FP16) | Mask | pytorch | mdconv(c3-c5) | - | 1x | 3.1 | | 42.0 | 37.6 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434.log.json) |

**Notes:**

- `mdconv` denotes modulated deformable convolution, `c3-c5` means adding dconv in resnet stage 3 to 5. `mdpool` denotes modulated deformable roi pooling.

- The dcn ops are modified from https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch, which should be more memory efficient and slightly faster.

-- (*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

+- (\*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

- **Memory, Train/Inf time is outdated.**

## Citation

diff --git a/configs/ddod/README.md b/configs/ddod/README.md

new file mode 100644

index 00000000000..9ab1f4869a3

--- /dev/null

+++ b/configs/ddod/README.md

@@ -0,0 +1,31 @@

+# DDOD

+

+> [Disentangle Your Dense Object Detector](https://arxiv.org/pdf/2107.02963.pdf)

+

+

+

+## Abstract

+

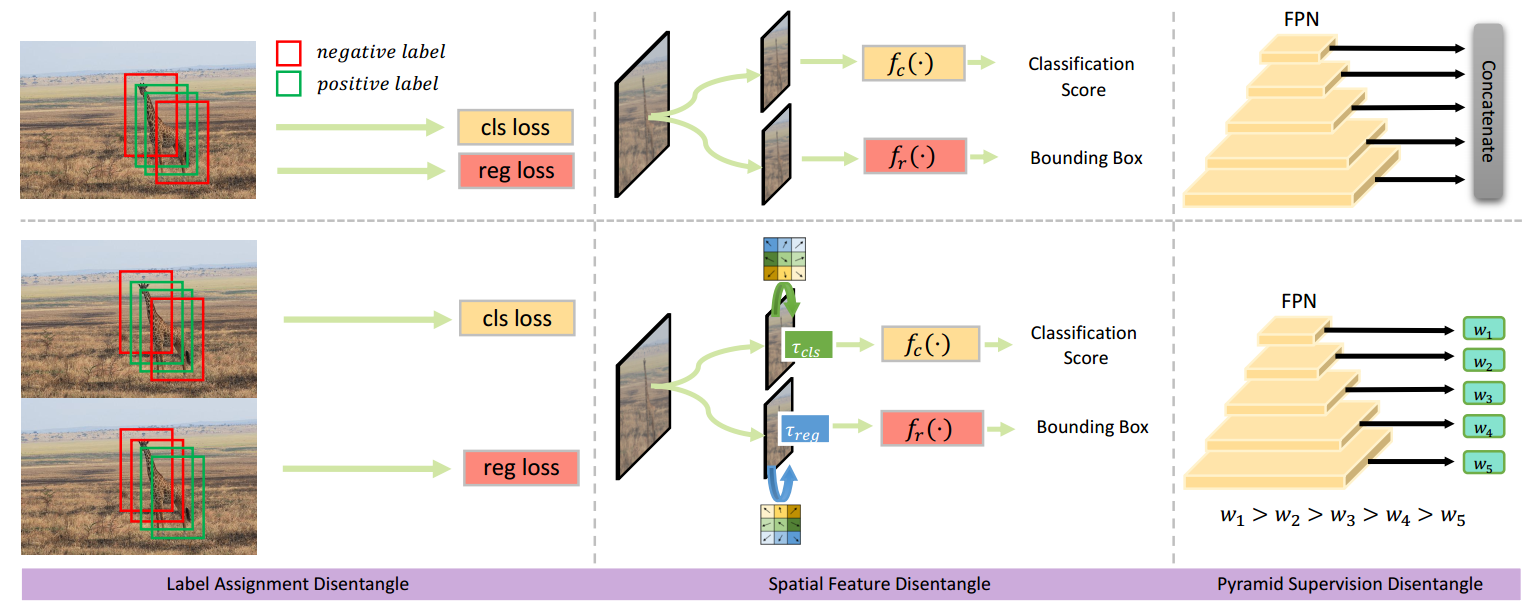

+Deep learning-based dense object detectors have achieved great success in the past few years and have been applied to numerous multimedia applications such as video understanding. However, the current training pipeline for dense detectors is compromised to lots of conjunctions that may not hold. In this paper, we investigate three such important conjunctions: 1) only samples assigned as positive in classification head are used to train the regression head; 2) classification and regression share the same input feature and computational fields defined by the parallel head architecture; and 3) samples distributed in different feature pyramid layers are treated equally when computing the loss. We first carry out a series of pilot experiments to show disentangling such conjunctions can lead to persistent performance improvement. Then, based on these findings, we propose Disentangled Dense Object Detector(DDOD), in which simple and effective disentanglement mechanisms are designed and integrated into the current state-of-the-art dense object detectors. Extensive experiments on MS COCO benchmark show that our approach can lead to 2.0 mAP, 2.4 mAP and 2.2 mAP absolute improvements on RetinaNet, FCOS, and ATSS baselines with negligible extra overhead. Notably, our best model reaches 55.0 mAP on the COCO test-dev set and 93.5 AP on the hard subset of WIDER FACE, achieving new state-of-the-art performance on these two competitive benchmarks. Code is available at https://github.com/zehuichen123/DDOD.

+

+ +

+

+ +

+

+

+## Results and Models

+

+| Model | Backbone | Style | Lr schd | Mem (GB) | box AP | Config | Download |

+| :-------: | :------: | :-----: | :-----: | :------: | :----: | :--------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| DDOD-ATSS | R-50 | pytorch | 1x | 3.4 | 41.7 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/ddod/ddod_r50_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/ddod/ddod_r50_fpn_1x_coco/ddod_r50_fpn_1x_coco_20220523_223737-29b2fc67.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/ddod/ddod_r50_fpn_1x_coco/ddod_r50_fpn_1x_coco_20220523_223737.log.json) |

+

+## Citation

+

+```latex

+@inproceedings{chen2021disentangle,

+title={Disentangle Your Dense Object Detector},

+author={Chen, Zehui and Yang, Chenhongyi and Li, Qiaofei and Zhao, Feng and Zha, Zheng-Jun and Wu, Feng},

+booktitle={Proceedings of the 29th ACM International Conference on Multimedia},

+pages={4939--4948},

+year={2021}

+}

+```

diff --git a/configs/ddod/ddod_r50_fpn_1x_coco.py b/configs/ddod/ddod_r50_fpn_1x_coco.py

new file mode 100644

index 00000000000..02dd2fe8911

--- /dev/null

+++ b/configs/ddod/ddod_r50_fpn_1x_coco.py

@@ -0,0 +1,67 @@

+_base_ = [

+ '../_base_/datasets/coco_detection.py',

+ '../_base_/schedules/schedule_1x.py', '../_base_/default_runtime.py'

+]

+

+model = dict(

+ type='DDOD',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch',

+ init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50')),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ start_level=1,

+ add_extra_convs='on_output',

+ num_outs=5),

+ bbox_head=dict(

+ type='DDODHead',

+ num_classes=80,

+ in_channels=256,

+ stacked_convs=4,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ ratios=[1.0],

+ octave_base_scale=8,

+ scales_per_octave=1,

+ strides=[8, 16, 32, 64, 128]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ loss_cls=dict(

+ type='FocalLoss',

+ use_sigmoid=True,

+ gamma=2.0,

+ alpha=0.25,

+ loss_weight=1.0),

+ loss_bbox=dict(type='GIoULoss', loss_weight=2.0),

+ loss_iou=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0)),

+ train_cfg=dict(

+ # assigner is mean cls_assigner

+ assigner=dict(type='ATSSAssigner', topk=9, alpha=0.8),

+ reg_assigner=dict(type='ATSSAssigner', topk=9, alpha=0.5),

+ allowed_border=-1,

+ pos_weight=-1,

+ debug=False),

+ test_cfg=dict(

+ nms_pre=1000,

+ min_bbox_size=0,

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.6),

+ max_per_img=100))

+

+# This `persistent_workers` is only valid when PyTorch>=1.7.0

+data = dict(persistent_workers=True)

+# optimizer

+optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0001)

diff --git a/configs/ddod/metafile.yml b/configs/ddod/metafile.yml

new file mode 100644

index 00000000000..c22395002bd

--- /dev/null

+++ b/configs/ddod/metafile.yml

@@ -0,0 +1,33 @@

+Collections:

+ - Name: DDOD

+ Metadata:

+ Training Data: COCO

+ Training Techniques:

+ - SGD with Momentum

+ - Weight Decay

+ Training Resources: 8x V100 GPUs

+ Architecture:

+ - DDOD

+ - FPN

+ - ResNet

+ Paper:

+ URL: https://arxiv.org/pdf/2107.02963.pdf

+ Title: 'Disentangle Your Dense Object Detector'

+ README: configs/ddod/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.25.0/mmdet/models/detectors/ddod.py#L6

+ Version: v2.25.0

+

+Models:

+ - Name: ddod_r50_fpn_1x_coco

+ In Collection: DDOD

+ Config: configs/ddod/ddod_r50_fpn_1x_coco.py

+ Metadata:

+ Training Memory (GB): 3.4

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 41.7

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/ddod/ddod_r50_fpn_1x_coco/ddod_r50_fpn_1x_coco_20220523_223737-29b2fc67.pth

diff --git a/configs/deepfashion/README.md b/configs/deepfashion/README.md

index dd4f012bfa3..45daec0badf 100644

--- a/configs/deepfashion/README.md

+++ b/configs/deepfashion/README.md

@@ -53,9 +53,9 @@ or creating your own config file.

## Results and Models

-| Backbone | Model type | Dataset | bbox detection Average Precision | segmentation Average Precision | Config | Download (Google) |

-| :---------: | :----------: | :-----------------: | :--------------------------------: | :----------------------------: | :---------:| :-------------------------: |

-| ResNet50 | Mask RCNN | DeepFashion-In-shop | 0.599 | 0.584 |[config](https://github.com/open-mmlab/mmdetection/blob/master/configs/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion.py)| [model](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/mask_rcnn_r50_fpn_15e_deepfashion_20200329_192752.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/20200329_192752.log.json) |

+| Backbone | Model type | Dataset | bbox detection Average Precision | segmentation Average Precision | Config | Download (Google) |

+| :------: | :--------: | :-----------------: | :------------------------------: | :----------------------------: | :----------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| ResNet50 | Mask RCNN | DeepFashion-In-shop | 0.599 | 0.584 | [config](https://github.com/open-mmlab/mmdetection/blob/master/configs/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/mask_rcnn_r50_fpn_15e_deepfashion_20200329_192752.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/20200329_192752.log.json) |

## Citation

diff --git a/configs/deformable_detr/README.md b/configs/deformable_detr/README.md

index f415be350b9..378e1f26a2d 100644

--- a/configs/deformable_detr/README.md

+++ b/configs/deformable_detr/README.md

@@ -14,11 +14,11 @@ DETR has been recently proposed to eliminate the need for many hand-designed com

## Results and Models

-| Backbone | Model | Lr schd | box AP | Config | Download |

-|:------:|:--------:|:--------------:|:------:|:------:|:--------:|

-| R-50 | Deformable DETR |50e | 44.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.log.json) |

-| R-50 | + iterative bounding box refinement |50e | 46.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.log.json) |

-| R-50 | ++ two-stage Deformable DETR |50e | 46.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.log.json) |

+| Backbone | Model | Lr schd | box AP | Config | Download |

+| :------: | :---------------------------------: | :-----: | :----: | :------------------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| R-50 | Deformable DETR | 50e | 44.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.log.json) |

+| R-50 | + iterative bounding box refinement | 50e | 46.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.log.json) |

+| R-50 | ++ two-stage Deformable DETR | 50e | 46.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.log.json) |

# NOTE

diff --git a/configs/detectors/README.md b/configs/detectors/README.md

index 3504ee2731a..baa245fe986 100644

--- a/configs/detectors/README.md

+++ b/configs/detectors/README.md

@@ -42,15 +42,15 @@ They can be used independently.

Combining them together results in DetectoRS.

The results on COCO 2017 val are shown in the below table.

-| Method | Detector | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

-|:------:|:--------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

-| RFP | Cascade + ResNet-50 | 1x | 7.5 | - | 44.8 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/cascade_rcnn_r50_rfp_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_rfp_1x_coco/cascade_rcnn_r50_rfp_1x_coco-8cf51bfd.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_rfp_1x_coco/cascade_rcnn_r50_rfp_1x_coco_20200624_104126.log.json) |

-| SAC | Cascade + ResNet-50 | 1x | 5.6 | - | 45.0| | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/cascade_rcnn_r50_sac_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_sac_1x_coco/cascade_rcnn_r50_sac_1x_coco-24bfda62.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_sac_1x_coco/cascade_rcnn_r50_sac_1x_coco_20200624_104402.log.json) |

-| DetectoRS | Cascade + ResNet-50 | 1x | 9.9 | - | 47.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_cascade_rcnn_r50_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_cascade_rcnn_r50_1x_coco/detectors_cascade_rcnn_r50_1x_coco-32a10ba0.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_cascade_rcnn_r50_1x_coco/detectors_cascade_rcnn_r50_1x_coco_20200706_001203.log.json) |

-| RFP | HTC + ResNet-50 | 1x | 11.2 | - | 46.6 | 40.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/htc_r50_rfp_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_rfp_1x_coco/htc_r50_rfp_1x_coco-8ff87c51.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_rfp_1x_coco/htc_r50_rfp_1x_coco_20200624_103053.log.json) |

-| SAC | HTC + ResNet-50 | 1x | 9.3 | - | 46.4 | 40.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/htc_r50_sac_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_sac_1x_coco/htc_r50_sac_1x_coco-bfa60c54.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_sac_1x_coco/htc_r50_sac_1x_coco_20200624_103111.log.json) |

-| DetectoRS | HTC + ResNet-50 | 1x | 13.6 | - | 49.1 | 42.6 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_htc_r50_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r50_1x_coco/detectors_htc_r50_1x_coco-329b1453.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r50_1x_coco/detectors_htc_r50_1x_coco_20200624_103659.log.json) |

-| DetectoRS | HTC + ResNet-101 | 20e | 19.6 | | 50.5 | 43.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_htc_r101_20e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r101_20e_coco/detectors_htc_r101_20e_coco_20210419_203638-348d533b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r101_20e_coco/detectors_htc_r101_20e_coco_20210419_203638.log.json) |

+| Method | Detector | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

+| :-------: | :-----------------: | :-----: | :------: | :------------: | :----: | :-----: | :---------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| RFP | Cascade + ResNet-50 | 1x | 7.5 | - | 44.8 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/cascade_rcnn_r50_rfp_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_rfp_1x_coco/cascade_rcnn_r50_rfp_1x_coco-8cf51bfd.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_rfp_1x_coco/cascade_rcnn_r50_rfp_1x_coco_20200624_104126.log.json) |

+| SAC | Cascade + ResNet-50 | 1x | 5.6 | - | 45.0 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/cascade_rcnn_r50_sac_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_sac_1x_coco/cascade_rcnn_r50_sac_1x_coco-24bfda62.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/cascade_rcnn_r50_sac_1x_coco/cascade_rcnn_r50_sac_1x_coco_20200624_104402.log.json) |

+| DetectoRS | Cascade + ResNet-50 | 1x | 9.9 | - | 47.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_cascade_rcnn_r50_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_cascade_rcnn_r50_1x_coco/detectors_cascade_rcnn_r50_1x_coco-32a10ba0.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_cascade_rcnn_r50_1x_coco/detectors_cascade_rcnn_r50_1x_coco_20200706_001203.log.json) |

+| RFP | HTC + ResNet-50 | 1x | 11.2 | - | 46.6 | 40.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/htc_r50_rfp_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_rfp_1x_coco/htc_r50_rfp_1x_coco-8ff87c51.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_rfp_1x_coco/htc_r50_rfp_1x_coco_20200624_103053.log.json) |

+| SAC | HTC + ResNet-50 | 1x | 9.3 | - | 46.4 | 40.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/htc_r50_sac_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_sac_1x_coco/htc_r50_sac_1x_coco-bfa60c54.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/htc_r50_sac_1x_coco/htc_r50_sac_1x_coco_20200624_103111.log.json) |

+| DetectoRS | HTC + ResNet-50 | 1x | 13.6 | - | 49.1 | 42.6 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_htc_r50_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r50_1x_coco/detectors_htc_r50_1x_coco-329b1453.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r50_1x_coco/detectors_htc_r50_1x_coco_20200624_103659.log.json) |

+| DetectoRS | HTC + ResNet-101 | 20e | 19.6 | | 50.5 | 43.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detectors/detectors_htc_r101_20e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r101_20e_coco/detectors_htc_r101_20e_coco_20210419_203638-348d533b.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detectors/detectors_htc_r101_20e_coco/detectors_htc_r101_20e_coco_20210419_203638.log.json) |

*Note*: This is a re-implementation based on MMDetection-V2.

The original implementation is based on MMDetection-V1.

diff --git a/configs/detr/README.md b/configs/detr/README.md

index 5f25357a18d..9f2485d0f42 100644

--- a/configs/detr/README.md

+++ b/configs/detr/README.md

@@ -14,9 +14,9 @@ We present a new method that views object detection as a direct set prediction p

## Results and Models

-| Backbone | Model | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-|:------:|:--------:|:-------:|:--------:|:--------------:|:------:|:------:|:--------:|

-| R-50 | DETR |150e |7.9| | 40.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detr/detr_r50_8x2_150e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detr/detr_r50_8x2_150e_coco/detr_r50_8x2_150e_coco_20201130_194835-2c4b8974.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/detr/detr_r50_8x2_150e_coco/detr_r50_8x2_150e_coco_20201130_194835.log.json) |

+| Backbone | Model | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

+| :------: | :---: | :-----: | :------: | :------------: | :----: | :----------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| R-50 | DETR | 150e | 7.9 | | 40.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/detr/detr_r50_8x2_150e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/detr/detr_r50_8x2_150e_coco/detr_r50_8x2_150e_coco_20201130_194835-2c4b8974.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/detr/detr_r50_8x2_150e_coco/detr_r50_8x2_150e_coco_20201130_194835.log.json) |

## Citation

diff --git a/configs/double_heads/README.md b/configs/double_heads/README.md

index c7507e86916..4a149b5fc33 100644

--- a/configs/double_heads/README.md

+++ b/configs/double_heads/README.md

@@ -14,9 +14,9 @@ Two head structures (i.e. fully connected head and convolution head) have been w

## Results and Models

-| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-| :-------------: | :-----: | :-----: | :------: | :------------: | :----: | :------: | :--------: |

-| R-50-FPN | pytorch | 1x | 6.8 | 9.5 | 40.0 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/double_heads/dh_faster_rcnn_r50_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/double_heads/dh_faster_rcnn_r50_fpn_1x_coco/dh_faster_rcnn_r50_fpn_1x_coco_20200130-586b67df.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/double_heads/dh_faster_rcnn_r50_fpn_1x_coco/dh_faster_rcnn_r50_fpn_1x_coco_20200130_220238.log.json) |

+| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

+| :------: | :-----: | :-----: | :------: | :------------: | :----: | :--------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| R-50-FPN | pytorch | 1x | 6.8 | 9.5 | 40.0 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/double_heads/dh_faster_rcnn_r50_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/double_heads/dh_faster_rcnn_r50_fpn_1x_coco/dh_faster_rcnn_r50_fpn_1x_coco_20200130-586b67df.pth) \| [log](https://download.openmmlab.com/mmdetection/v2.0/double_heads/dh_faster_rcnn_r50_fpn_1x_coco/dh_faster_rcnn_r50_fpn_1x_coco_20200130_220238.log.json) |

## Citation

diff --git a/configs/dyhead/README.md b/configs/dyhead/README.md

index 068a35b1189..8e6aed3619b 100644

--- a/configs/dyhead/README.md

+++ b/configs/dyhead/README.md

@@ -14,10 +14,10 @@ The complex nature of combining localization and classification in object detect

## Results and Models

-| Method | Backbone | Style | Setting | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-|:------:|:--------:|:-------:|:------------:|:-------:|:--------:|:--------------:|:------:|:------:|:--------:|

-| ATSS | R-50 | caffe | reproduction | 1x | 5.4 | 13.2 | 42.5 | [config](./atss_r50_caffe_fpn_dyhead_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dyhead/atss_r50_fpn_dyhead_for_reproduction_1x_coco/atss_r50_fpn_dyhead_for_reproduction_4x4_1x_coco_20220107_213939-162888e6.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dyhead/atss_r50_fpn_dyhead_for_reproduction_1x_coco/atss_r50_fpn_dyhead_for_reproduction_4x4_1x_coco_20220107_213939.log.json) |

-| ATSS | R-50 | pytorch | simple | 1x | 4.9 | 13.7 | 43.3 | [config](./atss_r50_fpn_dyhead_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dyhead/atss_r50_fpn_dyhead_4x4_1x_coco/atss_r50_fpn_dyhead_4x4_1x_coco_20211219_023314-eaa620c6.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dyhead/atss_r50_fpn_dyhead_4x4_1x_coco/atss_r50_fpn_dyhead_4x4_1x_coco_20211219_023314.log.json) |

+| Method | Backbone | Style | Setting | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |