-

Notifications

You must be signed in to change notification settings - Fork 24

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Memory leak + seat reservation expired error #32

Comments

|

+1 Am also out of things to try |

|

The memory leak is a known issue unfortunately (OptimalBits/redbird#237), although circumstances are not clear for when it happens. I suspect it's related to TLS termination at the Node/proxy level. In Arena this problem doesn't exist I believe because TLS termination happens at another level (haproxy or nginx) The upcoming version ( If your cluster is at an inconsistent state, I'd recommend checking for the |

|

Well we terminate Https at the Ingress Controller, which would situate it pretty near to how it's deployed in Arena (Termination is done by a load balancer). So I doubt it has to do with tls termination :/ |

|

Apparently a user of EDIT: not sure it's the same leak we have, sounds reasonable though |

|

So |

|

Yes, it is! |

|

Sounds like we cannot do anything atm to mitigate this? |

I am not sure if I understand this. What do you mean with "inconsistent state"? @endel |

|

We also met this issue several times, so the only solution is to use the 0.15 Preview that @endel provides? Is there any other solution? |

|

ANY UPDATES? |

|

@nzmax It seems to me that the proxy will no longer be fixed. Endel has not commented on this. Looks like we'll have to work with the new architecture in version 0.15. We don't know how this is supposed to work in a Kubernetes environment and are still waiting for news... |

|

We do are interested in fixing this issue. We are still trying to reproduce the memory leak issue in a controlled environment. There are 2 things you can do to help:

|

|

We were seeing consistent memory leaks, gradually growing over time. So far, it seems promising. I will update in a week or so if it resolves the issue. It tends to take about a week before our proxies crash. |

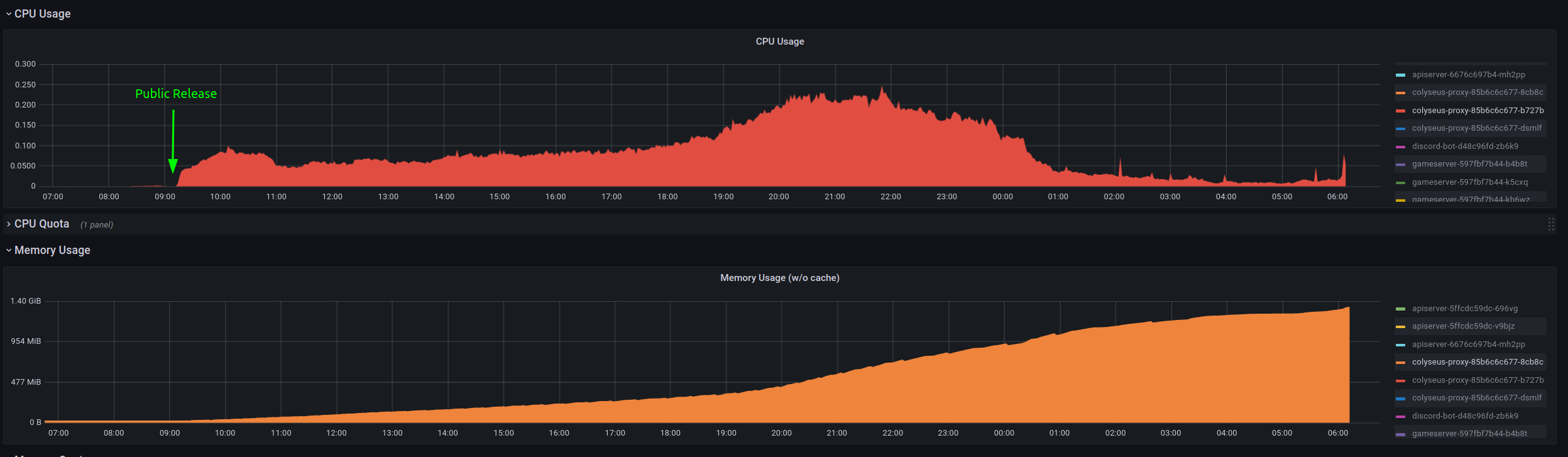

Yesterday, we switched our live system to our new Kubernetes setup, utilizing the Colyseus Proxy together with MongoDB and Redis for load balancing. We had a public beta over the last month with about 800 players a day and everything worked fine. But after about 20k players played for a day we were seeing

seat reservation expiredmore and more often up to a point where nobody was able to join or create any lobby.What we found:

Examining the resource consumption of the Colyseus Proxy over the last 24 hours suggests a memory leak:

Our logs repeatedly show these errors:

Restarting the proxies fixes the problem temporarily.

Setup:

Edit: We were running 2 proxies behind a load balancer and 5 gameserver instances. This might be related to #30.

We really need help with this issue, as I am at my wit's end.

Thank you in advance! 🙏

The text was updated successfully, but these errors were encountered: