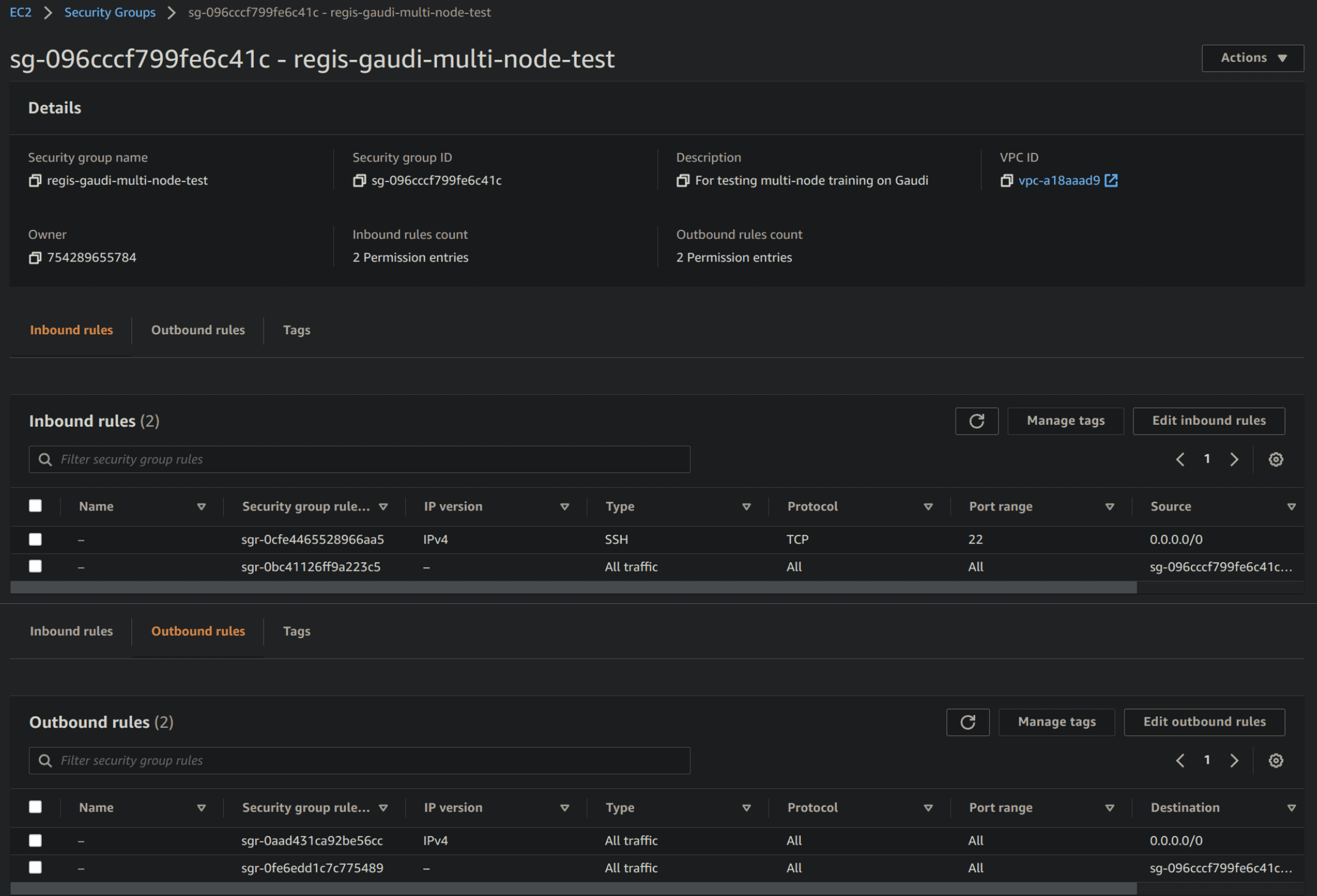

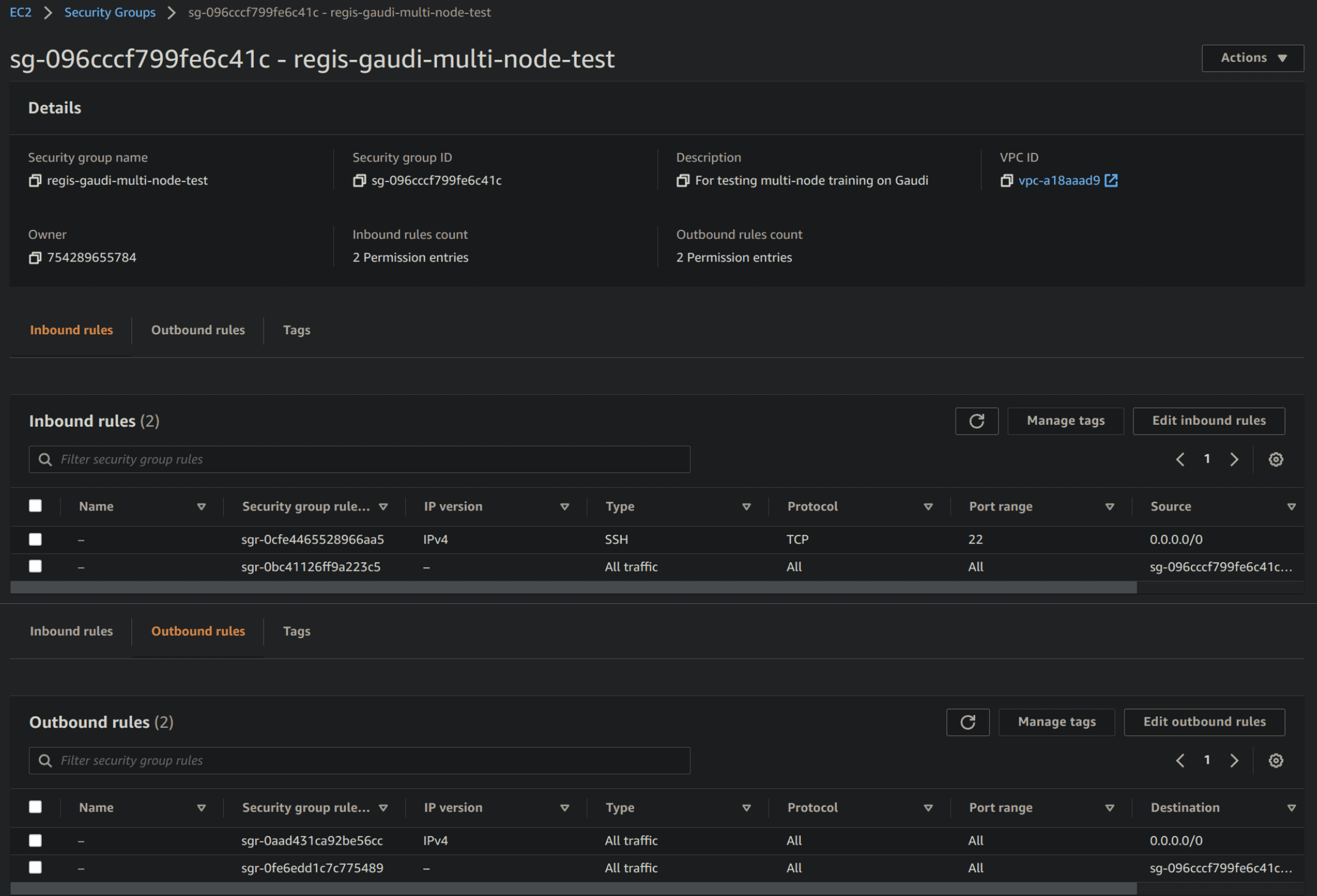

- Security group for multi-node training on AWS DL1 instances

-

- Security group for multi-node training on AWS DL1 instances

- @@ -22,7 +26,6 @@ limitations under the License.

@@ -22,7 +26,6 @@ limitations under the License.

-

# Optimum for Intel® Gaudi® Accelerators

Optimum for Intel Gaudi - a.k.a. `optimum-habana` - is the interface between the Transformers and Diffusers libraries and [Intel Gaudi AI Accelerators (HPU)](https://docs.habana.ai/en/latest/index.html).

@@ -249,4 +252,4 @@ After training your model, feel free to submit it to the Intel [leaderboard](htt

## Development

-Check the [contributor guide](https://github.com/huggingface/optimum/blob/main/CONTRIBUTING.md) for instructions.

\ No newline at end of file

+Check the [contributor guide](https://github.com/huggingface/optimum/blob/main/CONTRIBUTING.md) for instructions.

diff --git a/conftest.py b/conftest.py

deleted file mode 100644

index 71cb6bb7ca..0000000000

--- a/conftest.py

+++ /dev/null

@@ -1,25 +0,0 @@

-class Secret:

- """

- Taken from: https://stackoverflow.com/a/67393351

- """

-

- def __init__(self, value):

- self.value = value

-

- def __repr__(self):

- return "Secret(********)"

-

- def __str___(self):

- return "*******"

-

-

-def pytest_addoption(parser):

- parser.addoption("--token", action="store", default=None)

-

-

-def pytest_generate_tests(metafunc):

- # This is called for every test. Only get/set command line arguments

- # if the argument is specified in the list of test "fixturenames".

- option_value = Secret(metafunc.config.option.token)

- if "token" in metafunc.fixturenames:

- metafunc.parametrize("token", [option_value])

diff --git a/docs/Dockerfile b/docs/Dockerfile

deleted file mode 100644

index a31904c957..0000000000

--- a/docs/Dockerfile

+++ /dev/null

@@ -1,15 +0,0 @@

-FROM vault.habana.ai/gaudi-docker/1.16.0/ubuntu22.04/habanalabs/pytorch-installer-2.2.2:latest

-

-ARG commit_sha

-ARG clone_url

-

-# Need node to build doc HTML. Taken from https://stackoverflow.com/a/67491580

-RUN apt-get update && apt-get install -y \

- software-properties-common \

- npm

-RUN npm install n -g && \

- n latest

-

-RUN git clone $clone_url optimum-habana && cd optimum-habana && git checkout $commit_sha

-RUN python3 -m pip install --no-cache-dir --upgrade pip

-RUN python3 -m pip install --no-cache-dir ./optimum-habana[quality]

diff --git a/docs/source/_toctree.yml b/docs/source/_toctree.yml

deleted file mode 100644

index aa79f0df2e..0000000000

--- a/docs/source/_toctree.yml

+++ /dev/null

@@ -1,51 +0,0 @@

-- sections:

- - local: index

- title: 🤗 Optimum Habana

- - local: installation

- title: Installation

- - local: quickstart

- title: Quickstart

- - sections:

- - local: tutorials/overview

- title: Overview

- - local: tutorials/single_hpu

- title: Single-HPU Training

- - local: tutorials/distributed

- title: Distributed Training

- - local: tutorials/inference

- title: Run Inference

- - local: tutorials/stable_diffusion

- title: Stable Diffusion

- - local: tutorials/stable_diffusion_ldm3d

- title: LDM3D

- title: Tutorials

- - sections:

- - local: usage_guides/overview

- title: Overview

- - local: usage_guides/pretraining

- title: Pretraining Transformers

- - local: usage_guides/accelerate_training

- title: Accelerating Training

- - local: usage_guides/accelerate_inference

- title: Accelerating Inference

- - local: usage_guides/deepspeed

- title: How to use DeepSpeed

- - local: usage_guides/multi_node_training

- title: Multi-node Training

- title: How-To Guides

- - sections:

- - local: concept_guides/hpu

- title: What are Habana's Gaudi and HPUs?

- title: Conceptual Guides

- - sections:

- - local: package_reference/trainer

- title: Gaudi Trainer

- - local: package_reference/gaudi_config

- title: Gaudi Configuration

- - local: package_reference/stable_diffusion_pipeline

- title: Gaudi Stable Diffusion Pipeline

- - local: package_reference/distributed_runner

- title: Distributed Runner

- title: Reference

- title: Optimum Habana

- isExpanded: false

diff --git a/docs/source/concept_guides/hpu.mdx b/docs/source/concept_guides/hpu.mdx

deleted file mode 100644

index 111f8be903..0000000000

--- a/docs/source/concept_guides/hpu.mdx

+++ /dev/null

@@ -1,49 +0,0 @@

-

-

-# What are Intel® Gaudi® 1, Intel® Gaudi® 2 and HPUs?

-

-[Intel Gaudi 1](https://habana.ai/training/gaudi/) and [Intel Gaudi 2](https://habana.ai/training/gaudi2/) are the first- and second-generation AI hardware accelerators designed by Habana Labs and Intel.

-A single server contains 8 devices called Habana Processing Units (HPUs) with 96GB of memory each on Gaudi2 and 32GB on first-gen Gaudi.

-Check out [here](https://docs.habana.ai/en/latest/Gaudi_Overview/Gaudi_Architecture.html) for more information about the underlying hardware architecture.

-

-The Habana SDK is called [SynapseAI](https://docs.habana.ai/en/latest/index.html) and is common to both first-gen Gaudi and Gaudi2.

-As a consequence, 🤗 Optimum Habana is fully compatible with both generations of accelerators.

-

-

-## Execution modes

-

-Two execution modes are supported on HPUs for PyTorch, which is the main deep learning framework the 🤗 Transformers and 🤗 Diffusers libraries rely on:

-

-- *Eager mode* execution, where the framework executes one operation at a time as defined in [standard PyTorch eager mode](https://pytorch.org/tutorials/beginner/hybrid_frontend/learning_hybrid_frontend_through_example_tutorial.html).

-- *Lazy mode* execution, where operations are internally accumulated in a graph. The execution of the operations in the accumulated graph is triggered in a lazy manner, only when a tensor value is required by the user or when it is explicitly required in the script. The [SynapseAI graph compiler](https://docs.habana.ai/en/latest/Gaudi_Overview/SynapseAI_Software_Suite.html#graph-compiler-and-runtime) will optimize the execution of the operations accumulated in the graph (e.g. operator fusion, data layout management, parallelization, pipelining and memory management, graph-level optimizations).

-

-See [here](../usage_guides/accelerate_training#lazy-mode) how to use these execution modes in Optimum for Intel Gaudi.

-

-

-## Distributed training

-

-First-gen Gaudi and Gaudi2 are well-equipped for distributed training:

-

-- *Scale-up* to 8 devices on one server. See [here](../tutorials/distributed) how to perform distributed training on a single node.

-- *Scale-out* to 1000s of devices on several servers. See [here](../usage_guides/multi_node_training) how to do multi-node training.

-

-

-## Inference

-

-HPUs can also be used to perform inference:

-- Through HPU graphs that are well-suited for latency-sensitive applications. Check out [here](../usage_guides/accelerate_inference) how to use them.

-- In lazy mode, which can be used the same way as for training.

diff --git a/docs/source/index.mdx b/docs/source/index.mdx

deleted file mode 100644

index b33cfd062e..0000000000

--- a/docs/source/index.mdx

+++ /dev/null

@@ -1,119 +0,0 @@

-

-

-

-# Optimum for Intel Gaudi

-

-Optimum for Intel Gaudi is the interface between the Transformers and Diffusers libraries and [Intel® Gaudi® AI Accelerators (HPUs)](https://docs.habana.ai/en/latest/index.html).

-It provides a set of tools that enable easy model loading, training and inference on single- and multi-HPU settings for various downstream tasks as shown in the table below.

-

-HPUs offer fast model training and inference as well as a great price-performance ratio.

-Check out [this blog post about BERT pre-training](https://huggingface.co/blog/pretraining-bert) and [this post benchmarking Intel Gaudi 2 with NVIDIA A100 GPUs](https://huggingface.co/blog/habana-gaudi-2-benchmark) for concrete examples.

-If you are not familiar with HPUs, we recommend you take a look at [our conceptual guide](./concept_guides/hpu).

-

-

-The following model architectures, tasks and device distributions have been validated for Optimum for Intel Gaudi:

-

-

-

# Optimum for Intel® Gaudi® Accelerators

Optimum for Intel Gaudi - a.k.a. `optimum-habana` - is the interface between the Transformers and Diffusers libraries and [Intel Gaudi AI Accelerators (HPU)](https://docs.habana.ai/en/latest/index.html).

@@ -249,4 +252,4 @@ After training your model, feel free to submit it to the Intel [leaderboard](htt

## Development

-Check the [contributor guide](https://github.com/huggingface/optimum/blob/main/CONTRIBUTING.md) for instructions.

\ No newline at end of file

+Check the [contributor guide](https://github.com/huggingface/optimum/blob/main/CONTRIBUTING.md) for instructions.

diff --git a/conftest.py b/conftest.py

deleted file mode 100644

index 71cb6bb7ca..0000000000

--- a/conftest.py

+++ /dev/null

@@ -1,25 +0,0 @@

-class Secret:

- """

- Taken from: https://stackoverflow.com/a/67393351

- """

-

- def __init__(self, value):

- self.value = value

-

- def __repr__(self):

- return "Secret(********)"

-

- def __str___(self):

- return "*******"

-

-

-def pytest_addoption(parser):

- parser.addoption("--token", action="store", default=None)

-

-

-def pytest_generate_tests(metafunc):

- # This is called for every test. Only get/set command line arguments

- # if the argument is specified in the list of test "fixturenames".

- option_value = Secret(metafunc.config.option.token)

- if "token" in metafunc.fixturenames:

- metafunc.parametrize("token", [option_value])

diff --git a/docs/Dockerfile b/docs/Dockerfile

deleted file mode 100644

index a31904c957..0000000000

--- a/docs/Dockerfile

+++ /dev/null

@@ -1,15 +0,0 @@

-FROM vault.habana.ai/gaudi-docker/1.16.0/ubuntu22.04/habanalabs/pytorch-installer-2.2.2:latest

-

-ARG commit_sha

-ARG clone_url

-

-# Need node to build doc HTML. Taken from https://stackoverflow.com/a/67491580

-RUN apt-get update && apt-get install -y \

- software-properties-common \

- npm

-RUN npm install n -g && \

- n latest

-

-RUN git clone $clone_url optimum-habana && cd optimum-habana && git checkout $commit_sha

-RUN python3 -m pip install --no-cache-dir --upgrade pip

-RUN python3 -m pip install --no-cache-dir ./optimum-habana[quality]

diff --git a/docs/source/_toctree.yml b/docs/source/_toctree.yml

deleted file mode 100644

index aa79f0df2e..0000000000

--- a/docs/source/_toctree.yml

+++ /dev/null

@@ -1,51 +0,0 @@

-- sections:

- - local: index

- title: 🤗 Optimum Habana

- - local: installation

- title: Installation

- - local: quickstart

- title: Quickstart

- - sections:

- - local: tutorials/overview

- title: Overview

- - local: tutorials/single_hpu

- title: Single-HPU Training

- - local: tutorials/distributed

- title: Distributed Training

- - local: tutorials/inference

- title: Run Inference

- - local: tutorials/stable_diffusion

- title: Stable Diffusion

- - local: tutorials/stable_diffusion_ldm3d

- title: LDM3D

- title: Tutorials

- - sections:

- - local: usage_guides/overview

- title: Overview

- - local: usage_guides/pretraining

- title: Pretraining Transformers

- - local: usage_guides/accelerate_training

- title: Accelerating Training

- - local: usage_guides/accelerate_inference

- title: Accelerating Inference

- - local: usage_guides/deepspeed

- title: How to use DeepSpeed

- - local: usage_guides/multi_node_training

- title: Multi-node Training

- title: How-To Guides

- - sections:

- - local: concept_guides/hpu

- title: What are Habana's Gaudi and HPUs?

- title: Conceptual Guides

- - sections:

- - local: package_reference/trainer

- title: Gaudi Trainer

- - local: package_reference/gaudi_config

- title: Gaudi Configuration

- - local: package_reference/stable_diffusion_pipeline

- title: Gaudi Stable Diffusion Pipeline

- - local: package_reference/distributed_runner

- title: Distributed Runner

- title: Reference

- title: Optimum Habana

- isExpanded: false

diff --git a/docs/source/concept_guides/hpu.mdx b/docs/source/concept_guides/hpu.mdx

deleted file mode 100644

index 111f8be903..0000000000

--- a/docs/source/concept_guides/hpu.mdx

+++ /dev/null

@@ -1,49 +0,0 @@

-

-

-# What are Intel® Gaudi® 1, Intel® Gaudi® 2 and HPUs?

-

-[Intel Gaudi 1](https://habana.ai/training/gaudi/) and [Intel Gaudi 2](https://habana.ai/training/gaudi2/) are the first- and second-generation AI hardware accelerators designed by Habana Labs and Intel.

-A single server contains 8 devices called Habana Processing Units (HPUs) with 96GB of memory each on Gaudi2 and 32GB on first-gen Gaudi.

-Check out [here](https://docs.habana.ai/en/latest/Gaudi_Overview/Gaudi_Architecture.html) for more information about the underlying hardware architecture.

-

-The Habana SDK is called [SynapseAI](https://docs.habana.ai/en/latest/index.html) and is common to both first-gen Gaudi and Gaudi2.

-As a consequence, 🤗 Optimum Habana is fully compatible with both generations of accelerators.

-

-

-## Execution modes

-

-Two execution modes are supported on HPUs for PyTorch, which is the main deep learning framework the 🤗 Transformers and 🤗 Diffusers libraries rely on:

-

-- *Eager mode* execution, where the framework executes one operation at a time as defined in [standard PyTorch eager mode](https://pytorch.org/tutorials/beginner/hybrid_frontend/learning_hybrid_frontend_through_example_tutorial.html).

-- *Lazy mode* execution, where operations are internally accumulated in a graph. The execution of the operations in the accumulated graph is triggered in a lazy manner, only when a tensor value is required by the user or when it is explicitly required in the script. The [SynapseAI graph compiler](https://docs.habana.ai/en/latest/Gaudi_Overview/SynapseAI_Software_Suite.html#graph-compiler-and-runtime) will optimize the execution of the operations accumulated in the graph (e.g. operator fusion, data layout management, parallelization, pipelining and memory management, graph-level optimizations).

-

-See [here](../usage_guides/accelerate_training#lazy-mode) how to use these execution modes in Optimum for Intel Gaudi.

-

-

-## Distributed training

-

-First-gen Gaudi and Gaudi2 are well-equipped for distributed training:

-

-- *Scale-up* to 8 devices on one server. See [here](../tutorials/distributed) how to perform distributed training on a single node.

-- *Scale-out* to 1000s of devices on several servers. See [here](../usage_guides/multi_node_training) how to do multi-node training.

-

-

-## Inference

-

-HPUs can also be used to perform inference:

-- Through HPU graphs that are well-suited for latency-sensitive applications. Check out [here](../usage_guides/accelerate_inference) how to use them.

-- In lazy mode, which can be used the same way as for training.

diff --git a/docs/source/index.mdx b/docs/source/index.mdx

deleted file mode 100644

index b33cfd062e..0000000000

--- a/docs/source/index.mdx

+++ /dev/null

@@ -1,119 +0,0 @@

-

-

-

-# Optimum for Intel Gaudi

-

-Optimum for Intel Gaudi is the interface between the Transformers and Diffusers libraries and [Intel® Gaudi® AI Accelerators (HPUs)](https://docs.habana.ai/en/latest/index.html).

-It provides a set of tools that enable easy model loading, training and inference on single- and multi-HPU settings for various downstream tasks as shown in the table below.

-

-HPUs offer fast model training and inference as well as a great price-performance ratio.

-Check out [this blog post about BERT pre-training](https://huggingface.co/blog/pretraining-bert) and [this post benchmarking Intel Gaudi 2 with NVIDIA A100 GPUs](https://huggingface.co/blog/habana-gaudi-2-benchmark) for concrete examples.

-If you are not familiar with HPUs, we recommend you take a look at [our conceptual guide](./concept_guides/hpu).

-

-

-The following model architectures, tasks and device distributions have been validated for Optimum for Intel Gaudi:

-

-Learn the basics and become familiar with training transformers on HPUs with 🤗 Optimum. Start here if you are using 🤗 Optimum Habana for the first time!

- -Practical guides to help you achieve a specific goal. Take a look at these guides to learn how to use 🤗 Optimum Habana to solve real-world problems.

- -High-level explanations for building a better understanding of important topics such as HPUs.

- -Technical descriptions of how the Habana classes and methods of 🤗 Optimum Habana work.

- -

-  - Security group for multi-node training on AWS DL1 instances

-

- Security group for multi-node training on AWS DL1 instances

-

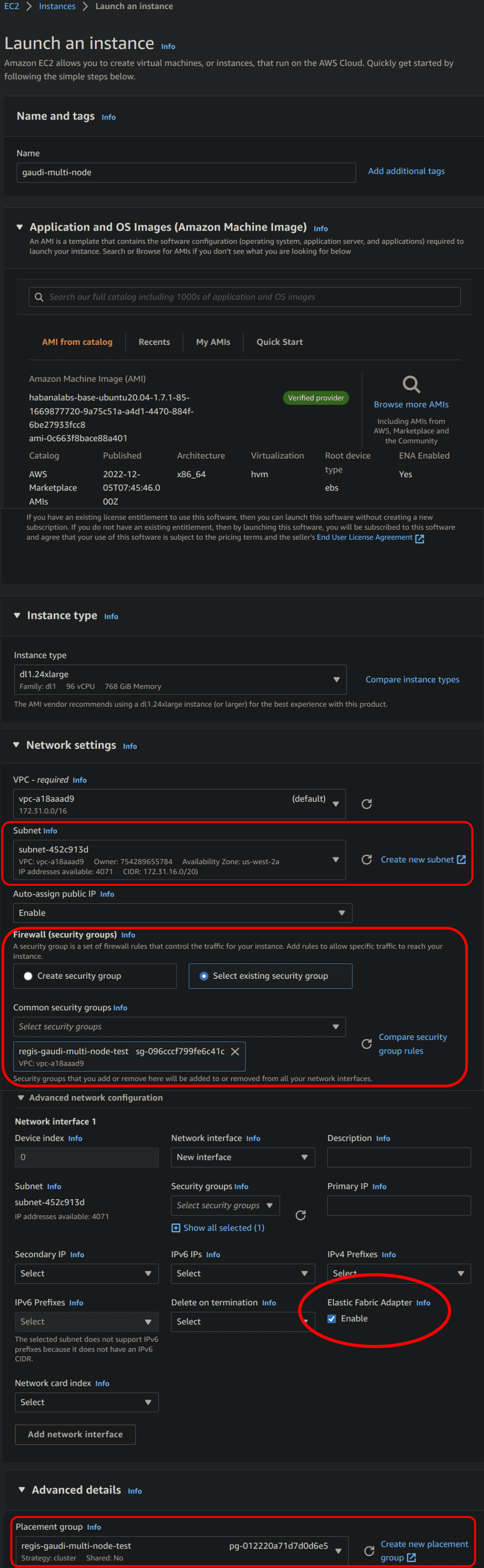

-  - Parameters for launching EFA-enabled AWS instances. The important parameters to set are circled in red. For the sake of clarity, not all parameters are represented.

-

- Parameters for launching EFA-enabled AWS instances. The important parameters to set are circled in red. For the sake of clarity, not all parameters are represented.

-